FlexRAN 20.11 enable on ocp4, pf mode, option 7.2

本文描述,如何把 intel 的 oran 解决方案 flexran (版本 20.11) ,移植到 openshift 平台之上。

This article describes how to port Intel's oran solution flexran (version 20.11) to the openshift platform.

本运行环境,是在openshift 4.9.5 上,硬件包含了intel e810网卡, ACC100 加速卡。 由于软件的限制,网卡开启了VF模式,但是ACC100没有开启VF模式,使用的PF模式。 PTP 组件没有使用openshift自带的ptp operator,而是使用了升级的自定义版本。 容器运行的时候,和operator以及硬件的关系结构图:

This operating environment is based on openshift 4.9.5, and the hardware includes intel e810 network card and ACC100 accelerator card. Due to software limitations, the network card enables the VF mode, but the ACC100 does not enable the VF mode and uses the PF mode. The PTP component does not use the ptp operator that comes with openshift, but uses an upgraded custom version. When the container is running, the structure diagram of the relationship with the operator and hardware:

本次实验整体网络架构图: The overall network architecture diagram of this experiment:

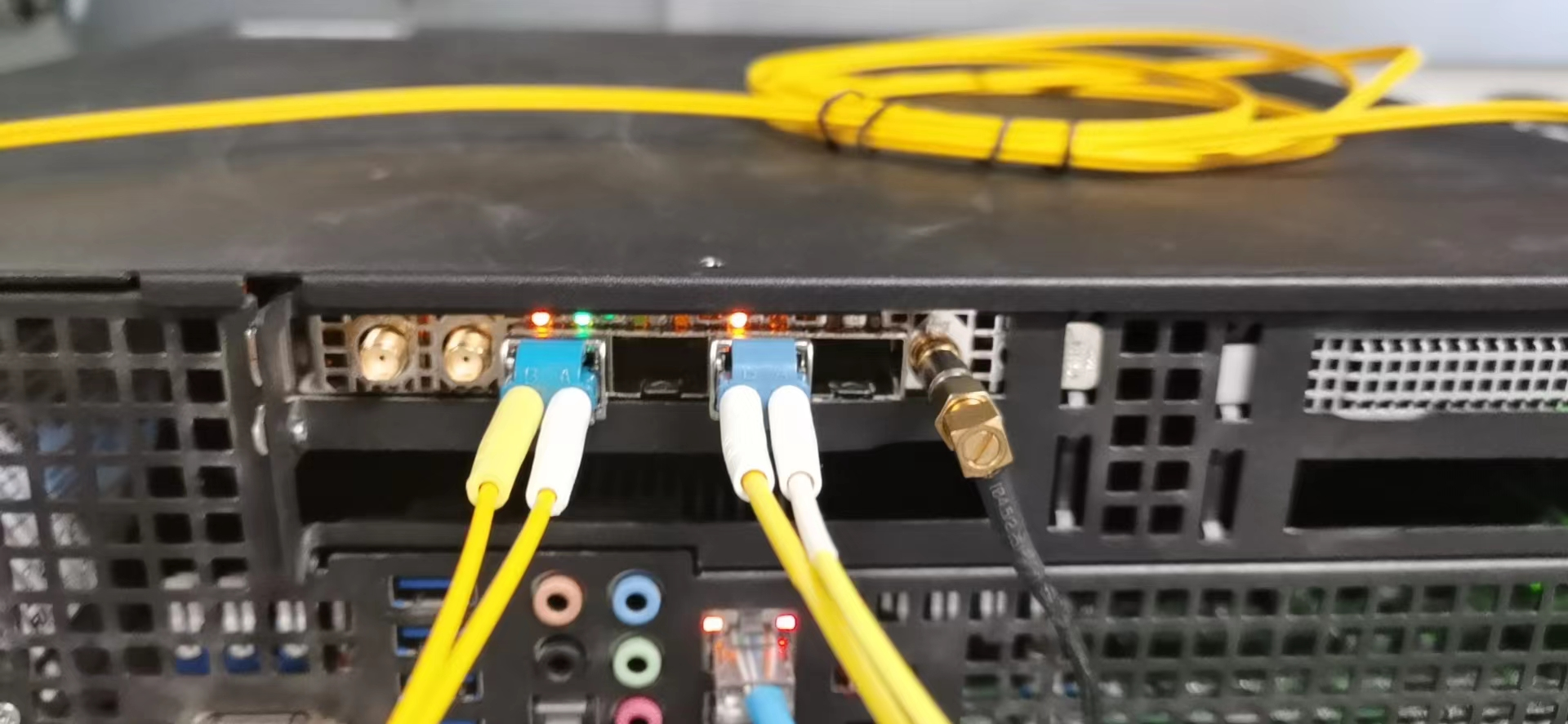

intel E810 Nic 的样子:

intel ACC100 是这个样子的

实验用的RU 长这个样子 The RU used for the experiment looks like this

视频讲解

如何编译相关的基础镜像,请参考环境开发文档 。

How to compile the relevant basic image, please refer to Environmental Development Documentation 。

应用镜像编译

我们已经制作好了一个基础镜像,quay.io/nepdemo/flexran_vdu:flexran-20.11-dpdk-19.11-ocp4.9.5-ubi-8.4-core-conf ,镜像很大(>5G), 项目现场有一个镜像仓库,就很有必要了。在项目现场,我们需要调整bbu应用参数的,这个是通过一个config map,注入一个脚本实现的。

核配置

bbu应用是大型的dpdk应用,而dpdk应用,cpu绑定配置,非常重要,配置不善,直接导致dpdk应用core dump,甚至物理机死机,这里,我们就提供一个 16 核配置的模板。他使用 1-16 core,实际测试证明,稳定性还是可以接受的。

demo bbu 应用的特点,是物理层使用8个core,l2, l3使用剩下的8个core,这些core如果相互冲突,物理层就会coredump.

制作镜像

上游镜像是一个包含systemd的ubi-init镜像,里面是有一个set_ip.sh的脚本,并且配置了对应的system service。 但是在项目实际过程中,发现通过systemd 启动服务的方式,启动bbu等应用,有莫名其妙的退出问题,于是我们还是用这个 ubi-init 的镜像,但是启动的时候,就不去用默认的init了,而是指定脚本运行。

既然指定脚本运行了,那我们就在这个脚本里面,做环境初始化,并且把bbu的核绑定参数也放进去。最后,在k8s的配置里面,启动bbu应用。

具体的制作镜像步骤,非常繁琐,参见这个文档。

deploy on ocp 4.9.5

镜像都准备好了,我们开始在openshift4 上进行部署测试。

set security for temp image registry

我们临时创建了一个镜像仓库,那么我们就要把这个配置放到集群里面去,主要是让ocp集群,不要检查这个新镜像仓库的证书。

oc patch schedulers.config.openshift.io/cluster --type merge -p '{"spec":{"mastersSchedulable":false}}'

install /data/ocp4/clients/butane-amd64 /usr/local/bin/butane

cat << EOF > /data/sno/tmp.images.bu

variant: openshift

version: 4.9.0

metadata:

labels:

machineconfiguration.openshift.io/role: worker

name: 99-zzz-worker-temp-images

storage:

files:

- path: /etc/containers/registries.conf.d/temp.registries.conf

overwrite: true

contents:

inline: |

[[registry]]

location = "tmp-registry.ocp4.redhat.ren:5443"

insecure = true

blocked = false

mirror-by-digest-only = false

prefix = ""

EOF

butane /data/sno/tmp.images.bu > /data/sno/99-zzz-worker-temp-images.yaml

oc create -f /data/sno/99-zzz-worker-temp-images.yaml

worker-2 node, rt-kernel setting

bbu 应用是需要实时操作系统支持的,那么我们就用openshift的performance addon operator来搞这个事情,pao支持激活rt-kernel,同时还能设置一些内核参数,我们需要把hugepage,cpu隔离设置好,还有e810的驱动屏蔽。

The bbu application needs the support of the real-time operating system, so we use the performance addon operator of openshift to do this. Pao supports the activation of rt-kernel, and can also set some kernel parameters. We need to set hugepage and cpu isolation. There are driver shields for e810.

cat << EOF > /data/install/performance-2.yaml

---

apiVersion: performance.openshift.io/v2

kind: PerformanceProfile

metadata:

name: wzh-performanceprofile-2

spec:

additionalKernelArgs:

- nmi_watchdog=0

- isolcpus=1-18

- nohz_full=1-18

- rcu_nocbs=1-18

- kthread_cpus=0,19

- irqaffinity=0,19

- iommu=pt

- intel_iommu=on

- intel_pstate=disable

# try to upgrade e810 driver

- module_name.blacklist=1

- rd.driver.blacklist=ice

# profile creator

- audit=0

- mce=off

- nmi_watchdog=0

globallyDisableIrqLoadBalancing: true

cpu:

isolated: "1-18"

reserved: "0,19"

hugepages:

defaultHugepagesSize: "1G"

pages:

- size: "1G"

count: 24

realTimeKernel:

enabled: true

numa:

topologyPolicy: "single-numa-node"

nodeSelector:

node-role.kubernetes.io/worker-rt-2: ""

machineConfigPoolSelector:

machineconfiguration.openshift.io/role: worker-rt-2

EOF

oc create --save-config -f /data/install/performance-2.yaml

# oc apply -f /data/install/performance-2.yaml

# oc delete -f /data/install/performance-2.yaml

oc label node worker-2.ocp4.redhat.ren node-role.kubernetes.io/worker-rt-2=""

intel e810 driver

openshift 4.9.5 对应的coreos操作系统里面自带的e810驱动ice.ko,版本比较低,无法支持ptp,我们需要升级驱动。但是coreos升级驱动操作比较麻烦,我们需要制作一个systemd service,让他在kubelet之前启动,在这个service 里面,用podman启动一个特权容器,在容器里面,insmod ice.ko。当然一切的前提,是在kernel参数上,屏蔽了ice的自动加载。

The e810 driver ice.ko that comes with the coreos operating system corresponding to openshift 4.9.5 has a relatively low version and cannot support ptp. We need to upgrade the driver. But the coreos upgrade driver operation is more troublesome, we need to make a systemd service, let it start before kubelet, in this service, use podman to start a privileged container, in the container, insmod ice.ko. Of course, the premise of everything is that the automatic loading of ice is blocked on the kernel parameters.

cat << EOF > /data/sno/static-pod.bu

variant: openshift

version: 4.9.0

metadata:

labels:

machineconfiguration.openshift.io/role: worker-rt-2

name: 99-zzz-e810-dpdk-driver-static-worker-rt-2

storage:

files:

- path: /etc/modprobe.d/blacklist-ice.conf

mode: 0644

overwrite: true

contents:

inline: |

blacklist ice

systemd:

units:

- name: driver.ice.service

enabled: true

contents: |

[Unit]

Description=driver.ice service

Wants=network-online.target

After=network-online.target

[Service]

Type=oneshot

RemainAfterExit=yes

User=root

WorkingDirectory=/root/

ExecStart=podman run --privileged --rm -it quay.io/nepdemo/intel-driver:8.4-rt-1.9.7 /bin/sh -c " rmmod ice; rmmod auxiliary ; insmod /diy/auxiliary.ko; insmod /diy/ice.ko ; "

[Install]

WantedBy=multi-user.target

- name: kubelet.service

dropins:

- name: 99-after-ice.conf

contents: |

[Unit]

Requires=driver.ice.service

After=driver.ice.service

EOF

butane -d /data/install /data/sno/static-pod.bu > /data/install/99-zzz-e810-dpdk-driver-static-worker-rt-2.yaml

oc create --save-config -f /data/install/99-zzz-e810-dpdk-driver-static-worker-rt-2.yaml

# oc apply -f /data/install/99-zzz-e810-dpdk-driver-static-worker-rt-2.yaml

# oc delete -f /data/install/99-zzz-e810-dpdk-driver-static-worker-rt-2.yaml

linuxptp 3.11

vRAN应用,特别是option 7.2 的方案,需要ptp时钟方案支持,物理形态,要么是GPS master连到交换机上,通过交换机授时,要么GPS master直接连网卡上。在服务器端,需要支持网络授时的网卡,并且主机上要启动ptp相关的服务,同时关掉ntp。

从上图可以看到,ptp4l,是从网络上拿时间到网卡上,phc2sys,是从网卡上,写到系统时钟上。ts2phc应该是把本地时间同步给其他设备用的。

openshift自带的ptp operator版本比较低,我们需要升级,就自己做镜像,做服务吧。

build linuxptp container image

我们在外网,用linuxptp 3.1.1版本做镜像,并且支持注入参数。方便项目现场调整。

# http://linuxptp.sourceforge.net/

# download linuxptp-3.1.1

mkdir -p /data/ptp

cd /data/ptp

wget https://nchc.dl.sourceforge.net/project/linuxptp/v3.1/linuxptp-3.1.1.tgz

tar zvxf linuxptp-3.1.1.tgz

cd linuxptp-3.1.1

make

cat << 'EOF' > ptp4l.sh

#!/bin/bash

if [ -z $DEMO_ENV_PRIO ]; then

/usr/local/sbin/ptp4l -f /etc/ptp4l.conf -m $DEMO_ENV_PTP4L_ARG

else

/usr/bin/chrt -f $DEMO_ENV_PRIO /usr/local/sbin/ptp4l -f /etc/ptp4l.conf -m $DEMO_ENV_PTP4L_ARG

fi

EOF

cat << 'EOF' > phc2sys.sh

#!/bin/bash

if [ -z $DEMO_ENV_PRIO ]; then

/usr/local/sbin/phc2sys -m -z /var/run/ptp4l -t [phc2sys] $DEMO_ENV_PHC2SYS_ARG

else

/usr/bin/chrt -f $DEMO_ENV_PRIO /usr/local/sbin/phc2sys -m -z /var/run/ptp4l -t [phc2sys] $DEMO_ENV_PHC2SYS_ARG

fi

EOF

cat << 'EOF' > ts2phc.sh

#!/bin/bash

if [ -z $DEMO_ENV_PRIO ]; then

/usr/local/sbin/ts2phc -f /etc/ts2phc.cfg -m $DEMO_ENV_TS2PHC_ARG

else

/usr/bin/chrt -f $DEMO_ENV_PRIO /usr/local/sbin/ts2phc -f /etc/ts2phc.cfg -m $DEMO_ENV_TS2PHC_ARG

fi

EOF

cat << EOF > ./ptp.dockerfile

FROM registry.access.redhat.com/ubi8/ubi:8.4

COPY hwstamp_ctl nsm phc2sys phc_ctl pmc ptp4l timemaster ts2phc incdefs.sh version.sh ptp4l.sh phc2sys.sh ts2phc.sh /usr/local/sbin/

RUN cd /usr/local/sbin/ && chmod +x hwstamp_ctl nsm phc2sys phc_ctl pmc ptp4l timemaster ts2phc incdefs.sh version.sh ptp4l.sh phc2sys.sh ts2phc.sh

EOF

podman build --squash -t quay.io/nepdemo/linuxptp:3.1.1-ubi-8.4-v06 -f ptp.dockerfile ./

podman push quay.io/nepdemo/linuxptp:3.1.1-ubi-8.4-v06

deploy linux ptp

有了镜像,我们就做一个deployment,来启动ptp,注意,里面有3个container, 同时还有一些configmap 做配置文件注入. 在项目现场, 注意需要调整配置文件参数.

oc new-project vbbu-demo

oc project vbbu-demo

export REG_TMP='tmp-registry.ocp4.redhat.ren:5443'

# kernel driver deployment

oc create serviceaccount svcacct-driver -n vbbu-demo

oc adm policy add-scc-to-user privileged -z svcacct-driver -n vbbu-demo

# oc adm policy add-scc-to-user anyuid -z mysvcacct -n vbbu-demo

# !!! remember to disable chronyd on dest host !!!

# we do not use ptp opeerator, so we need to do it manually

# TODO

# https://docs.openshift.com/container-platform/4.10/scalability_and_performance/ztp-configuring-single-node-cluster-deployment-during-installation.html#sno-du-disabling-ntp_sno-du-deploying-distributed-units-manually-on-single-node-openshift

cat << 'EOF' > /data/install/ptp.chrony.conf

apiVersion: machineconfiguration.openshift.io/v1

kind: MachineConfig

metadata:

labels:

machineconfiguration.openshift.io/role: worker-rt-2

name: disable-chronyd

spec:

config:

systemd:

units:

- contents: |

[Unit]

Description=NTP client/server

Documentation=man:chronyd(8) man:chrony.conf(5)

After=ntpdate.service sntp.service ntpd.service

Conflicts=ntpd.service systemd-timesyncd.service

ConditionCapability=CAP_SYS_TIME

[Service]

Type=forking

PIDFile=/run/chrony/chronyd.pid

EnvironmentFile=-/etc/sysconfig/chronyd

ExecStart=/usr/sbin/chronyd $OPTIONS

ExecStartPost=/usr/libexec/chrony-helper update-daemon

PrivateTmp=yes

ProtectHome=yes

ProtectSystem=full

[Install]

WantedBy=multi-user.target

enabled: false

name: chronyd.service

ignition:

version: 2.2.0

EOF

oc create -f /data/install/ptp.chrony.conf

cat << EOF > /data/install/ptp4l.conf

[global]

#

# Default Data Set

#

twoStepFlag 1

slaveOnly 0

priority1 128

priority2 128

domainNumber 24

clockClass 248

clockAccuracy 0xFE

offsetScaledLogVariance 0xFFFF

free_running 0

freq_est_interval 0

#

# Port Data Set

# 16 TS a second use logSyncInterval -4

#

#logAnnounceInterval 4

logAnnounceInterval 1

logSyncInterval -4

logMinDelayReqInterval 0

logMinPdelayReqInterval 0

announceReceiptTimeout 3

syncReceiptTimeout 0

delayAsymmetry 0

fault_reset_interval 4

neighborPropDelayThresh 20000000

#

# Run time options

#

assume_two_step 0

logging_level 6

path_trace_enabled 0

follow_up_info 0

tx_timestamp_timeout 200

use_syslog 1

verbose 0

summary_interval 0

kernel_leap 1

check_fup_sync 0

#

# Servo Options

#

pi_proportional_const 0.0

pi_integral_const 0.0

pi_proportional_scale 0.0

pi_proportional_exponent -0.3

pi_proportional_norm_max 0.7

pi_integral_scale 0.0

pi_integral_exponent 0.4

pi_integral_norm_max 0.3

step_threshold 0.00000002

first_step_threshold 0.00002

max_frequency 900000000

clock_servo nullf

sanity_freq_limit 200000000

ntpshm_segment 0

#

# Transport options

#

transportSpecific 0x0

ptp_dst_mac 01:1B:19:00:00:00

p2p_dst_mac 01:80:C2:00:00:0E

udp6_scope 0x0E

uds_address /var/run/ptp4l

#

# Default interface options

#

network_transport UDPv4

#network_transport L2

delay_mechanism E2E

time_stamping hardware

delay_filter moving_median

delay_filter_length 10

egressLatency 0

ingressLatency 0

boundary_clock_jbod 0

#

# Clock description

#

productDescription ;;

revisionData ;;

manufacturerIdentity 00:00:00

userDescription ;

timeSource 0xA0

EOF

cat << EOF > /data/install/ts2phc.cfg

[global]

use_syslog 0

verbose 1

logging_level 7

ts2phc.pulsewidth 100000000

# For GNSS module

ts2phc.nmea_serialport /dev/ttyGNSS_6500_0

[ens2f0]

ts2phc.extts_polarity rising

EOF

oc delete configmap ptp-config -n vbbu-demo

oc create configmap ptp-config -n vbbu-demo --from-file=/data/install/ptp4l.conf --from-file=/data/install/ts2phc.cfg --save-config=true

# 06 for fifo

# 07 for nice

export VAR_IMAGE='quay.io/nepdemo/linuxptp:3.1.1-ubi-8.4-v06'

cat << EOF > /data/install/ptp.demo.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nepdemo-linuxptp-daemon

labels:

app: nepdemo-linuxptp-daemon

spec:

replicas: 1

selector:

matchLabels:

app: nepdemo-linuxptp-daemon

template:

metadata:

annotations:

labels:

app: nepdemo-linuxptp-daemon

name: nepdemo-linuxptp-daemon

# namespace: openshift-ptp

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchFields:

- key: metadata.name

operator: In

values:

- worker-2.ocp4.redhat.ren

tolerations:

- key: "vbbu"

operator: "Exists"

effect: "NoSchedule"

containers:

- name: ptp4l

image: $VAR_IMAGE

command: ["/bin/sh", "-c", "--"]

args: [" /usr/local/sbin/ptp4l.sh ;"]

env:

- name: DEMO_ENV_PTP4L_ARG

value: " -i ens2f0 -2 "

- name: DEMO_ENV_PRIO

value: "65"

securityContext:

privileged: true

runAsUser: 0

volumeMounts:

- mountPath: /etc/ptp4l.conf

subPath: ptp4l.conf

name: config-volume

- mountPath: /var/run

name: socket-dir

- name: phc2sys

image: $VAR_IMAGE

imagePullPolicy: IfNotPresent

command: ["/bin/sh", "-c", "--"]

args: [" /usr/local/sbin/phc2sys.sh ;"]

env:

- name: DEMO_ENV_PHC2SYS_ARG

# value: " -s ens2f0 -O 0 -R 8 "

value: " -s ens2f0 -r -u 1 -O 0 -R 8 "

- name: DEMO_ENV_PRIO

value: "65"

securityContext:

privileged: true

runAsUser: 0

volumeMounts:

- mountPath: /etc/ptp4l.conf

subPath: ptp4l.conf

name: config-volume

- mountPath: /var/run

name: socket-dir

- name: ts2phc

image: $VAR_IMAGE

imagePullPolicy: IfNotPresent

command: ["/bin/sh", "-c", "--"]

args: [" /usr/local/sbin/ts2phc.sh ;"]

env:

- name: DEMO_ENV_TS2PHC_ARG

value: " -s generic -c ens2f0 "

- name: DEMO_ENV_PRIO

value: "65"

securityContext:

privileged: true

runAsUser: 0

volumeMounts:

- mountPath: /etc/ts2phc.cfg

subPath: ts2phc.cfg

name: config-volume

- mountPath: /var/run

name: socket-dir

- name: dev

mountPath: /dev

hostNetwork: true

# hostPID: true

serviceAccountName: svcacct-driver

volumes:

- configMap:

defaultMode: 420

name: ptp-config

name: config-volume

- name: socket-dir

emptyDir: {}

- name: dev

hostPath:

path: "/dev"

EOF

oc create --save-config -n vbbu-demo -f /data/install/ptp.demo.yaml

# oc delete -n vbbu-demo -f /data/install/ptp.demo.yaml

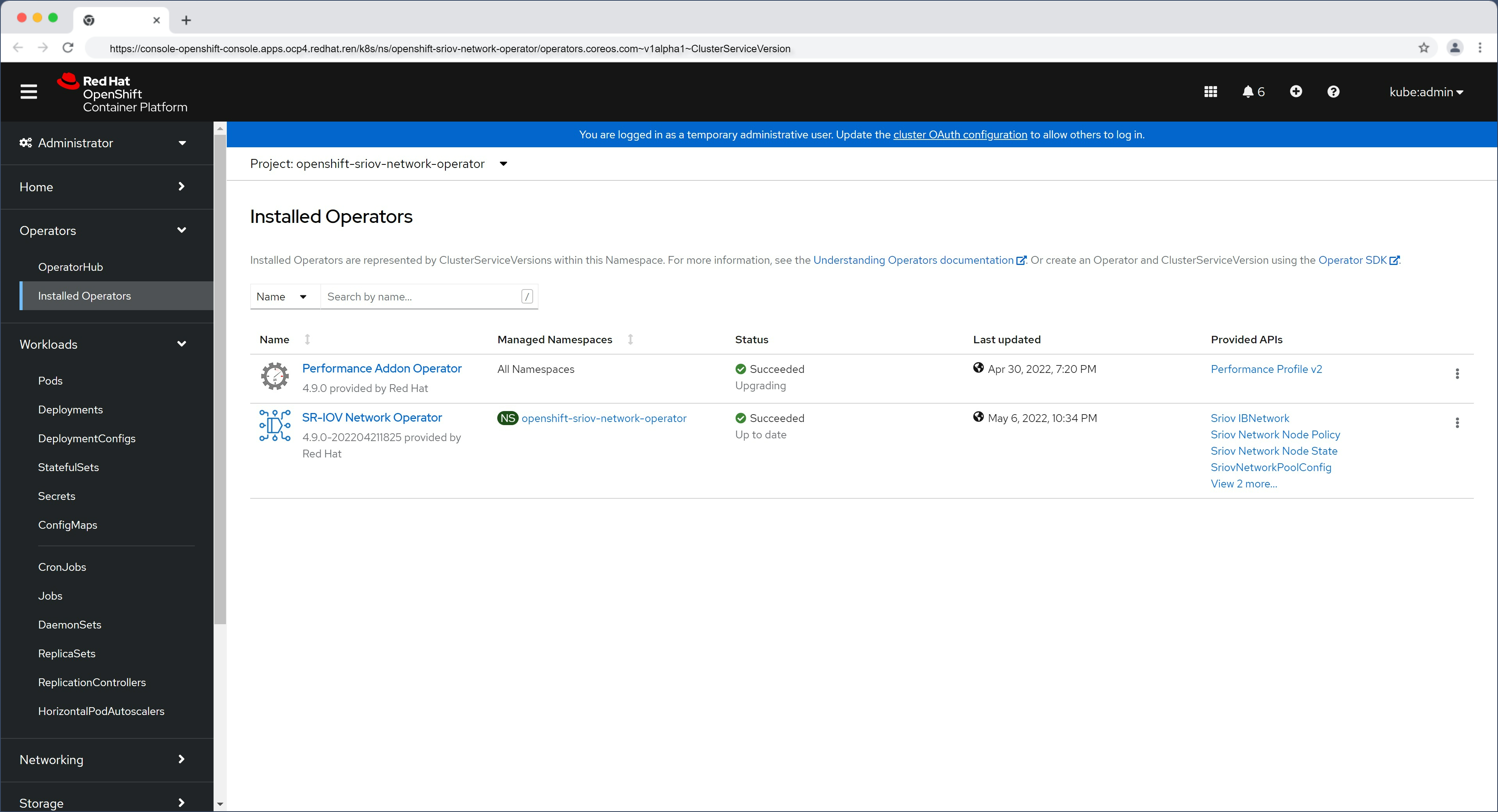

setup sriov operator

openshift有sriov的operator,官方支持intel e810网卡,我们直接用就好了。

the env has nic Intel e810 : 8086 1593

# install sriov operator

cat << EOF > /data/install/sriov.yaml

---

apiVersion: v1

kind: Namespace

metadata:

name: openshift-sriov-network-operator

annotations:

workload.openshift.io/allowed: management

---

apiVersion: operators.coreos.com/v1

kind: OperatorGroup

metadata:

name: sriov-network-operators

namespace: openshift-sriov-network-operator

spec:

targetNamespaces:

- openshift-sriov-network-operator

---

apiVersion: operators.coreos.com/v1alpha1

kind: Subscription

metadata:

name: sriov-network-operator-subscription

namespace: openshift-sriov-network-operator

spec:

channel: "stable"

installPlanApproval: Manual

name: sriov-network-operator

source: redhat-operators

sourceNamespace: openshift-marketplace

EOF

oc create -f /data/install/sriov.yaml

oc get SriovNetworkNodeState -n openshift-sriov-network-operator

# NAME AGE

# master-0 42m

# worker-0.ocp4.redhat.ren 42m

# worker-1 42m

# worker-2.ocp4.redhat.ren 42m

oc get SriovNetworkNodeState/worker-2.ocp4.redhat.ren -n openshift-sriov-network-operator -o yaml

# apiVersion: sriovnetwork.openshift.io/v1

# kind: SriovNetworkNodeState

# metadata:

# creationTimestamp: "2022-05-06T14:34:54Z"

# generation: 61

# name: worker-2.ocp4.redhat.ren

# namespace: openshift-sriov-network-operator

# ownerReferences:

# - apiVersion: sriovnetwork.openshift.io/v1

# blockOwnerDeletion: true

# controller: true

# kind: SriovNetworkNodePolicy

# name: default

# uid: 4eca5eea-e1e5-410f-8833-dd2de1434e53

# resourceVersion: "93262422"

# uid: 1d122c8e-b788-4f1e-a3d5-865c6230a476

# spec:

# dpConfigVersion: "93222170"

# status:

# interfaces:

# - deviceID: "1593"

# driver: ice

# linkSpeed: -1 Mb/s

# linkType: ETH

# mac: 40:a6:b7:82:0e:4c

# mtu: 1500

# name: ens2f0

# pciAddress: 0000:65:00.0

# totalvfs: 64

# vendor: "8086"

# - deviceID: "1593"

# driver: ice

# linkSpeed: -1 Mb/s

# linkType: ETH

# mac: 40:a6:b7:82:0e:4d

# mtu: 1500

# name: ens2f1

# pciAddress: 0000:65:00.1

# totalvfs: 64

# vendor: "8086"

# - deviceID: "1593"

# driver: ice

# linkSpeed: -1 Mb/s

# linkType: ETH

# mac: 40:a6:b7:82:0e:4e

# mtu: 1500

# name: ens2f2

# pciAddress: 0000:65:00.2

# totalvfs: 64

# vendor: "8086"

# - deviceID: "1593"

# driver: ice

# linkSpeed: -1 Mb/s

# linkType: ETH

# mac: 40:a6:b7:82:0e:4f

# mtu: 1500

# name: ens2f3

# pciAddress: 0000:65:00.3

# totalvfs: 64

# vendor: "8086"

# - deviceID: 37d1

# driver: i40e

# linkSpeed: 1000 Mb/s

# linkType: ETH

# mac: ac:1f:6b:ea:5b:32

# mtu: 1500

# name: eno1

# pciAddress: 0000:b5:00.0

# totalvfs: 32

# vendor: "8086"

# - deviceID: 37d1

# driver: i40e

# linkSpeed: 1000 Mb/s

# linkType: ETH

# mac: ac:1f:6b:ea:5b:33

# mtu: 1500

# name: eno2

# pciAddress: 0000:b5:00.1

# totalvfs: 32

# vendor: "8086"

# syncStatus: Succeeded

# how to use the sriov to create VF and attach to pod, depends on use case from nep demo request

# remember to active SRIOV in bios

# remember to active VT-d in bios

cat << EOF > /data/install/sriov.policy.yaml

---

apiVersion: sriovnetwork.openshift.io/v1

kind: SriovNetworkNodePolicy

metadata:

name: policy-810-nic01-rt2

namespace: openshift-sriov-network-operator

spec:

resourceName: intel_810_nic01_rt2

nodeSelector:

kubernetes.io/hostname: worker-2.ocp4.redhat.ren

numVfs: 2

nicSelector:

vendor: "8086"

deviceID: "1593"

rootDevices:

- "0000:65:00.0"

# pfNames:

# - "ens2f0"

# linkType: eth

# isRdma: false

deviceType: vfio-pci

EOF

oc create -f /data/install/sriov.policy.yaml

# oc delete -f /data/install/sriov.policy.yaml

oc get sriovnetworknodestates/worker-2.ocp4.redhat.ren -n openshift-sriov-network-operator -o jsonpath='{.status.syncStatus}' && echo

# Succeeded

cat << EOF > /data/install/sriov.attach.yaml

---

apiVersion: sriovnetwork.openshift.io/v1

kind: SriovNetwork

metadata:

name: intel-810-nic01-vf0-rt2

namespace: openshift-sriov-network-operator

spec:

resourceName: intel_810_nic01_rt2

networkNamespace: vbbu-demo

vlan: 5

---

apiVersion: sriovnetwork.openshift.io/v1

kind: SriovNetwork

metadata:

name: intel-810-nic01-vf1-rt2

namespace: openshift-sriov-network-operator

spec:

resourceName: intel_810_nic01_rt2

networkNamespace: vbbu-demo

vlan: 5

EOF

oc create -f /data/install/sriov.attach.yaml

# oc delete -f /data/install/sriov.attach.yaml

oc get net-attach-def -n vbbu-demo

# NAME AGE

# intel-810-nic01-vf0-rt2 2m19s

# intel-810-nic01-vf1-rt2 2m19s

nepdemo license file

把license file放到config map里面,注入容器。

不过呢,当前,我们是在制作容器镜像的步骤里面,直接把license 复制到容器里面了。

# license file 加载到config map中

oc create configmap -n vbbu-demo license.for.nepdemo \

--from-file=license=./3496531EC238AD91DED6DBA5BD6B.lic

# to updated config map

oc create configmap -n vbbu-demo license.for.nepdemo --from-file=license=./3496531EC238AD91DED6DBA5BD6B.lic -o yaml --dry-run=client | oc apply -f -

create deployment for release/production

终于, 我们要启动服务了, 这是一个dpdk程序, 我们设置resource request, limits, 达到绑核的目的.

Finally, we have to start the service, this is a dpdk program, we set the resource request, limits, to achieve the purpose of binding the core.

oc new-project vbbu-demo

oc project vbbu-demo

# kernel driver deployment

oc create serviceaccount svcacct-driver -n vbbu-demo

oc adm policy add-scc-to-user privileged -z svcacct-driver -n vbbu-demo

# oc adm policy add-scc-to-user anyuid -z mysvcacct -n vbbu-demo

16 cpu core config, auto convert

cpu core 1-16

cat << 'EOF' > /data/install/bbu.core.conf.sh

#!/bin/bash

sed -i 's/<systemThread>.*</<systemThread>2, 0, 0</' /root/flexran/bin/nr5g/gnb/l1/phycfg_xran.xml

sed -i 's/<timerThread>.*</<timerThread>1, 96, 0</' /root/flexran/bin/nr5g/gnb/l1/phycfg_xran.xml

sed -i 's/<FpgaDriverCpuInfo>.*</<FpgaDriverCpuInfo>3, 96, 0</' /root/flexran/bin/nr5g/gnb/l1/phycfg_xran.xml

sed -i 's/<FrontHaulCpuInfo>.*</<FrontHaulCpuInfo>3, 96, 0</' /root/flexran/bin/nr5g/gnb/l1/phycfg_xran.xml

sed -i 's/<radioDpdkMaster>.*</<radioDpdkMaster>2, 99, 0</' /root/flexran/bin/nr5g/gnb/l1/phycfg_xran.xml

sed -i "s/<BbuPoolThreadDefault_0_63>.*</<BbuPoolThreadDefault_0_63>0x$(to_hex '10,11,12,13,14,15')</" /root/flexran/bin/nr5g/gnb/l1/phycfg_xran.xml

sed -i 's/<xRANThread>.*</<xRANThread>9, 96, 0</' /root/flexran/bin/nr5g/gnb/l1/xrancfg_sub6.xml

sed -i "s/<xRANWorker>.*</<xRANWorker>0x$(to_hex '16'), 96, 0</" /root/flexran/bin/nr5g/gnb/l1/xrancfg_sub6.xml

sed -i "s/OAM_SHARED_CORE_BITMAP=.*/OAM_SHARED_CORE_BITMAP=$(to_dec '3,4')/" /etc/BBU_cfg/cu_cfg/gNodeB_CU_Configuration.cfg

sed -i "s/L3_SHARED_CORE_BITMAP=.*/L3_SHARED_CORE_BITMAP=$(to_dec '4,5')/" /etc/BBU_cfg/cu_cfg/gNodeB_CU_Configuration.cfg

sed -i "s/PDCP_SHRED_CORE_BITMAP=.*/PDCP_SHRED_CORE_BITMAP=$(to_dec '7,8')/" /etc/BBU_cfg/cu_cfg/gNodeB_CU_Configuration.cfg

sed -i "s/RRM_SHARED_CORE_BITMAP=.*/RRM_SHARED_CORE_BITMAP=$(to_dec '1,8')/" /etc/BBU_cfg/cu_cfg/gNodeB_CU_Configuration.cfg

sed -i "s/SON_SHARED_CORE_BITMAP=.*/SON_SHARED_CORE_BITMAP=$(to_dec '1,2')/" /etc/BBU_cfg/cu_cfg/gNodeB_CU_Configuration.cfg

# https://unix.stackexchange.com/questions/487451/sed-replace-a-pattern-between-a-pattern-and-the-end-of-file

sed -i "/<oam_shm_logger_cfg>/,\$s/<cpu_bitmap>.*</<cpu_bitmap>$(to_dec '7')</" /etc/BBU_cfg/cu_cfg/Proprietary_gNodeB_CU_Data_Model.xml

sed -i "/<shm_logger_cfg>/,\$s/<cpu_bitmap>.*</<cpu_bitmap>$(to_dec '7')</" /etc/BBU_cfg/cu_cfg/Proprietary_gNodeB_CU_Data_Model.xml

sed -i '/<L3Params>/,$s/<core_no>.*</<core_no>2</' /etc/BBU_cfg/cu_cfg/Proprietary_gNodeB_CU_Data_Model.xml

sed -i '/<process_name>gnb_cu_son</,$s/<process_args>.* /<process_args>2 /' /etc/BBU_cfg/cu_cfg/Proprietary_gNodeB_CU_Data_Model.xml

sed -i '/<process_name>gnb_cu_rrm</,$s/<process_args>.* /<process_args>2 /' /etc/BBU_cfg/cu_cfg/Proprietary_gNodeB_CU_Data_Model.xml

sed -i '/<pdcp_index>0</,$s/<core_num_for_worker_thread>.*</<core_num_for_worker_thread>3</' /etc/BBU_cfg/cu_cfg/Proprietary_gNodeB_CU_Data_Model.xml

sed -i '/<pdcp_index>1</,$s/<core_num_for_worker_thread>.*</<core_num_for_worker_thread>3</' /etc/BBU_cfg/cu_cfg/Proprietary_gNodeB_CU_Data_Model.xml

sed -i '/<egtpu_instance>0</,$s/<core_num_of_worker_thread>.*</<core_num_of_worker_thread>6</' /etc/BBU_cfg/cu_cfg/Proprietary_gNodeB_CU_Data_Model.xml

sed -i '/<egtpu_instance>1</,$s/<core_num_of_worker_thread>.*</<core_num_of_worker_thread>6</' /etc/BBU_cfg/cu_cfg/Proprietary_gNodeB_CU_Data_Model.xml

sed -i '/<f1u_instance>0</,$s/<core_num_of_worker_thread>.*</<core_num_of_worker_thread>6</' /etc/BBU_cfg/cu_cfg/Proprietary_gNodeB_CU_Data_Model.xml

sed -i '/<f1u_instance>1</,$s/<core_num_of_worker_thread>.*</<core_num_of_worker_thread>6</' /etc/BBU_cfg/cu_cfg/Proprietary_gNodeB_CU_Data_Model.xml

sed -i 's/<core_num_mapping>.*</<core_num_mapping>4,4</' /etc/BBU_cfg/cu_cfg/Proprietary_gNodeB_CU_Data_Model.xml

sed -i 's/MAC_BINREAD_CORE_NUM=.*/MAC_BINREAD_CORE_NUM=1/' /etc/BBU_cfg/du_cfg/gNB_DU_Configuration.cfg

sed -i 's/RLC_BINREAD_CORE_NUM=.*/RLC_BINREAD_CORE_NUM=1/' /etc/BBU_cfg/du_cfg/gNB_DU_Configuration.cfg

sed -i 's/MAC_HP_CORE_NUM=.*/MAC_HP_CORE_NUM=5/' /etc/BBU_cfg/du_cfg/gNB_DU_Configuration.cfg

sed -i 's/RLC_MASTER_CORE_NUM=.*/RLC_MASTER_CORE_NUM=2/' /etc/BBU_cfg/du_cfg/gNB_DU_Configuration.cfg

sed -i "s/SHARED_CORE_BITMAP=.*/SHARED_CORE_BITMAP=$(to_dec '5,6')/" /etc/BBU_cfg/du_cfg/gNB_DU_Configuration.cfg

sed -i 's/RELAY_ADAPTER_RECVR_THREAD_CORE_NUM=.*/RELAY_ADAPTER_RECVR_THREAD_CORE_NUM=4/' /etc/BBU_cfg/du_cfg/gNB_DU_Configuration.cfg

sed -i '/<RlcProvsioningParams>/,$s/<CoreNumWorkerThread>.*</<CoreNumWorkerThread>7</' /etc/BBU_cfg/du_cfg/Proprietary_gNodeB_DU_Data_Model.xml

sed -i '/<RlclSystemParams>/,$s/<CoreNumWorkerThread>.*</<CoreNumWorkerThread>7</' /etc/BBU_cfg/du_cfg/Proprietary_gNodeB_DU_Data_Model.xml

sed -i '/<F1uProvisioningParams>/,$s/<numCoreWorkerThreads>.*</<numCoreWorkerThreads>7</' /etc/BBU_cfg/du_cfg/Proprietary_gNodeB_DU_Data_Model.xml

# --a=8 --t=8 --b=8

sed -i 's/\.\/gnb_cu_pdcp .* >/\.\/gnb_cu_pdcp --r=2 --a=8 --t=8 --m=2 --i=0 --b=8 --p=0 --s=50 --n=10 >/' /home/BaiBBU_XSS/BaiBBU_SXSS/gNB_app

EOF

oc delete configmap vbbu-core-config -n vbbu-demo

oc create configmap vbbu-core-config -n vbbu-demo --from-file=/data/install/bbu.core.conf.sh --save-config=true

create vbbu deployment

oc adm taint nodes worker-2.ocp4.redhat.ren vbbu=realtime:NoSchedule

# oc adm taint nodes worker-2.ocp4.redhat.ren vbbu=realtime:NoExecute-

oc get nodes -o json | jq '.items[] | .metadata.name, (.spec.taints | tostring )' | paste - -

# "master-0" "null"

# "worker-1" "null"

# "worker-2.ocp4.redhat.ren" "[{\"effect\":\"NoSchedule\",\"key\":\"vbbu\",\"value\":\"realtime\"}]"

export REG_TMP='tmp-registry.ocp4.redhat.ren:5443'

# the pod with vbbu container and dev container

# later, it will change to deployment

cat << EOF > /data/install/vran.intel.flexran.yaml

---

apiVersion: "k8s.cni.cncf.io/v1"

kind: NetworkAttachmentDefinition

metadata:

name: host-device-vbbu-demo

spec:

config: '{

"cniVersion": "0.3.1",

"type": "host-device",

"device": "ens2f2",

"ipam": {

"type": "static",

"addresses": [

{

"address": "192.168.12.20/24"

},

{

"address": "192.168.12.19/24"

}

]

}

}'

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: flexran-binary-release-deployment

labels:

app: flexran-binary-release-deployment

spec:

replicas: 1

selector:

matchLabels:

app: flexran-binary-release

template:

metadata:

labels:

app: flexran-binary-release

name: flexran-binary-release

annotations:

k8s.v1.cni.cncf.io/networks: |-

[

{

"name": "host-device-vbbu-demo"

},

{

"name": "intel-810-nic01-vf0-rt2",

"mac": "00:11:22:33:44:66"

},

{

"name": "intel-810-nic01-vf1-rt2",

"mac": "00:11:22:33:44:67"

}

]

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: "app"

operator: In

values:

- flexran-binary-release

topologyKey: "kubernetes.io/hostname"

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- worker-2.ocp4.redhat.ren

tolerations:

- key: "vbbu"

operator: "Exists"

effect: "NoSchedule"

serviceAccountName: svcacct-driver

containers:

- name: flexran-release-running

securityContext:

privileged: true

runAsUser: 0

# command: [ "/sbin/init" ]

command: [ "/bin/sh","-c","--" ]

args: [" /root/systemd/set_ip.sh ; cd /home/BaiBBU_XSS/tools/ ; ./XRAN_BBU start ; trap '{ cd /home/BaiBBU_XSS/tools/ ; ./XRAN_BBU stop ; exit 255; }' SIGINT SIGTERM ERR EXIT ; sleep infinity ; "]

tty: true

stdin: true

image: ${REG_TMP}/nepdemo/flexran_vdu:flexran-20.11-dpdk-19.11-ocp4.9.5-ubi-8.4-core-conf

imagePullPolicy: Always

resources:

requests:

cpu: 16

memory: "48Gi"

hugepages-1Gi: 24Gi

limits:

cpu: 16

memory: "48Gi"

hugepages-1Gi: 24Gi

volumeMounts:

- name: hugepage

mountPath: /hugepages

readOnly: False

- name: varrun

mountPath: /var/run/dpdk

readOnly: false

- name: lib-modules

mountPath: /lib/modules

- name: src

mountPath: /usr/src

- name: dev

mountPath: /dev

- name: cache-volume

mountPath: /dev/shm

- name: license-volume

mountPath: /nepdemo/lic

- mountPath: /root/bbu.core.conf.sh

subPath: bbu.core.conf.sh

name: vbbu-core-config-volume

volumes:

- name: hugepage

emptyDir:

medium: HugePages

- name: varrun

emptyDir: {}

- name: lib-modules

hostPath:

path: /lib/modules

- name: src

hostPath:

path: /usr/src

- name: dev

hostPath:

path: "/dev"

- name: cache-volume

emptyDir:

medium: Memory

sizeLimit: 1Gi

- name: license-volume

configMap:

name: license.for.nepdemo

items:

- key: license

path: license.lic

- name: vbbu-core-config-volume

configMap:

defaultMode: 420

name: vbbu-core-config

---

apiVersion: v1

kind: Service

metadata:

name: vbbu-http

spec:

ports:

- name: http

port: 80

targetPort: 80

nodePort: 31071

type: NodePort

selector:

app: flexran-binary-release

---

apiVersion: route.openshift.io/v1

kind: Route

metadata:

name: vbbu-http

spec:

port:

targetPort: http

to:

kind: Service

name: vbbu-http

---

EOF

oc create -n vbbu-demo -f /data/install/vran.intel.flexran.yaml

# oc delete -n vbbu-demo -f /data/install/vran.intel.flexran.yaml

# below, used for debug

POD_ID=$(oc get pod -n vbbu-demo -o json | jq -r '.items[].metadata.name | select(. | contains("flexran-binary-release"))' )

oc rsh -c flexran-release-running ${POD_ID}

# below runs the command in the pod

bash

tail -100 /root/flexran/bin/nr5g/gnb/l1/Phy.log

# ......

# ==== l1app Time: 315002 ms NumCarrier: 1 NumBbuCores: 6. Tti2Tti Time: [ 0.00.. 0.00.. 0.00] usces

# ==== [o-du0][rx 17639351 pps 55999 kbps 1585561][tx 58086520 pps 184408 kbps 5133280] [on_time 17639351 early 0 late 0 corrupt 0 pkt_dupl 8 Total 17639351]

# Pusch[ 64000 63999 64000 64000 0 0 0 0] SRS[ 0]

# -------------------------------------------------------------------------------------------------------------------------------------------------------

# Cell DL Tput UL Tput UL BLER SRS SNR MIMO PCI

# 0 (Kbps) 1,329,880 34,314 / 36,537 0.00% 0 Db 4T4R 21

# -------------------------------------------------------------------------------------------------------------------------------------------------------

# Core Utilization [6 BBU core(s)]:

# Core Id: 10 11 12 13 14 15 Avg

# Util %: 14 20 18 17 16 21 17.67

# Xran Id: 9 16 Master Core Util: 61 %

# -------------------------------------------------------------------------------------------------------------------------------------------------------

top to show thread and core

我们在调优的时候,特别是分配核绑定的时候,经常需要看看现在都有哪些线程,占用了哪些核,或者说,哪些核比较繁忙,我们就要重新平衡一下。那么就需要一个好用的工具,来帮助我们。很幸运top本身就具备这样的功能。我们只要 -H 运行 top,然后打开线程绑定的核的显示项就可以了。

When we are tuning, especially when assigning core bindings, we often need to see which threads are currently available, which cores are occupied, or which cores are busy, and we need to rebalance. Then we need a useful tool to help us. Fortunately, top itself has such a function. We just need to run top with -H, and then open the display item of the core bound to the thread.

sudo -i

# /root/.config/procps/toprc

mkdir /root/wzh

cat << 'EOF' > /root/wzh/.toprc

top's Config File (Linux processes with windows)

Id:i, Mode_altscr=0, Mode_irixps=1, Delay_time=3.0, Curwin=0

Def fieldscur=ķ&')*+,-./01258<>?ABCFGHIJKLMNOPQRSTUVWXYZ[\]^_`abcdefghij

winflags=193844, sortindx=18, maxtasks=0, graph_cpus=0, graph_mems=0

summclr=1, msgsclr=1, headclr=3, taskclr=1

Job fieldscur=(Ļ@<)*+,-./012568>?ABCFGHIJKLMNOPQRSTUVWXYZ[\]^_`abcdefghij

winflags=193844, sortindx=0, maxtasks=0, graph_cpus=0, graph_mems=0

summclr=6, msgsclr=6, headclr=7, taskclr=6

Mem fieldscur=<MBND34&'()*+,-./0125689FGHIJKLOPQRSTUVWXYZ[\]^_`abcdefghij

winflags=193844, sortindx=21, maxtasks=0, graph_cpus=0, graph_mems=0

summclr=5, msgsclr=5, headclr=4, taskclr=5

Usr fieldscur=)+,-./1234568;<=>?@ABCFGHIJKLMNOPQRSTUVWXYZ[\]^_`abcdefghij

winflags=193844, sortindx=3, maxtasks=0, graph_cpus=0, graph_mems=0

summclr=3, msgsclr=3, headclr=2, taskclr=3

Fixed_widest=0, Summ_mscale=1, Task_mscale=0, Zero_suppress=0

EOF

HOME="/root/wzh/" top -H