Openshift4 慢慢走

本仓库是作者在日常系统操作中的技术笔记。作者平日有些机会进行很多系统操作,包括很多PoC,新系统验证,方案探索工作,所以会有很多系统实际操作的机会,涉及到操作系统安装,iaas, paas平台搭建,中间件系统验证,应用系统的开发和验证。很多操作步骤比较复杂,所以需要一个地方进行集中的笔记记录,方便自己整理,并第一时间在线分享。

作者还做了一个chrome extension,用来在new tab上展示bing.com的美图,简单美观,欢迎使用。

作者还有很多视频演示,欢迎前往作者的频道订阅

许可证

书中涉及代码采用GNU V3许可。

版权声明

本书遵循 CC-BY-NC-SA 4.0 协议。商业转载必须征求作者 wangzheng422 授权同意,转载请务必注明出处。 作者保留最终解释权及法律追究权力。

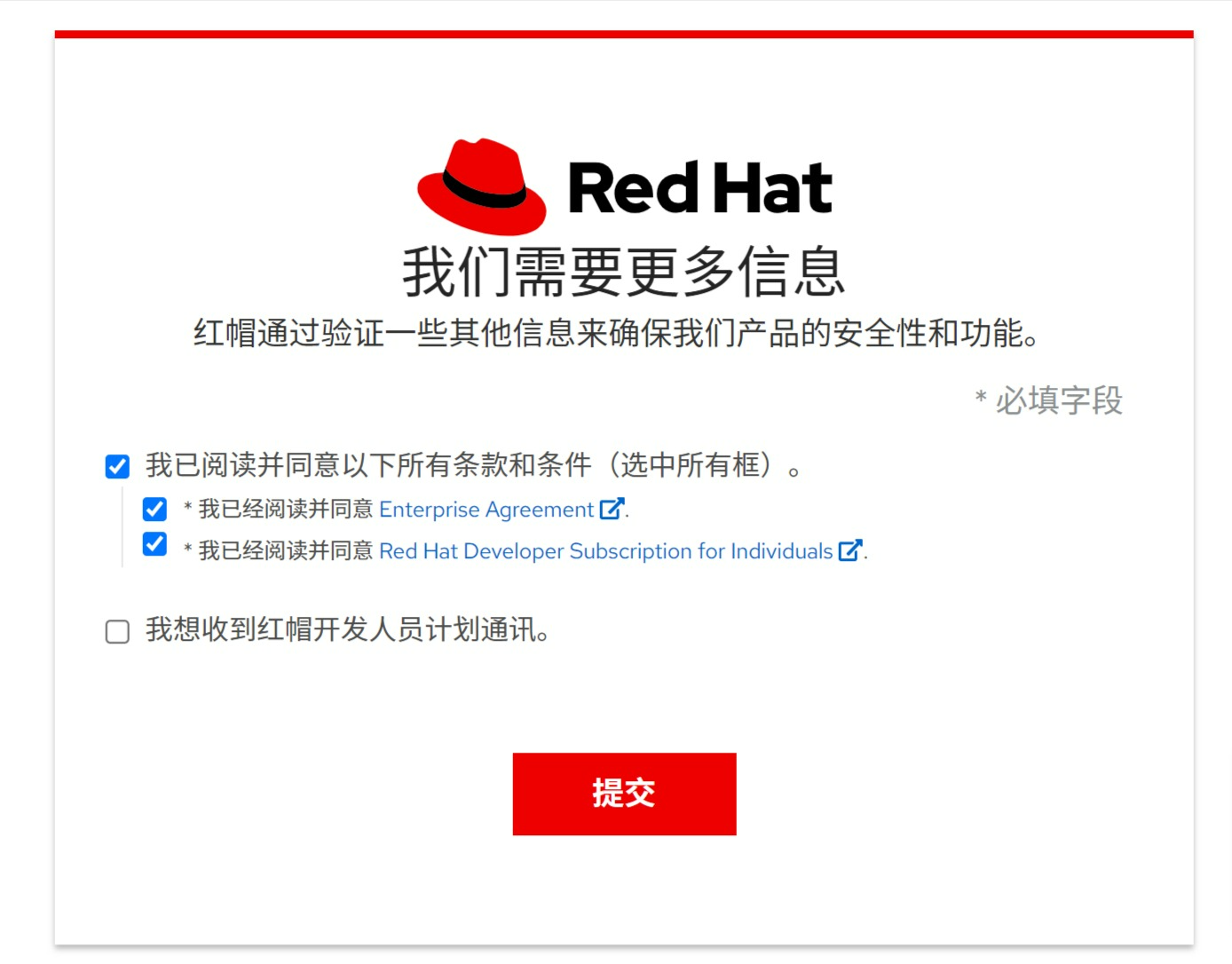

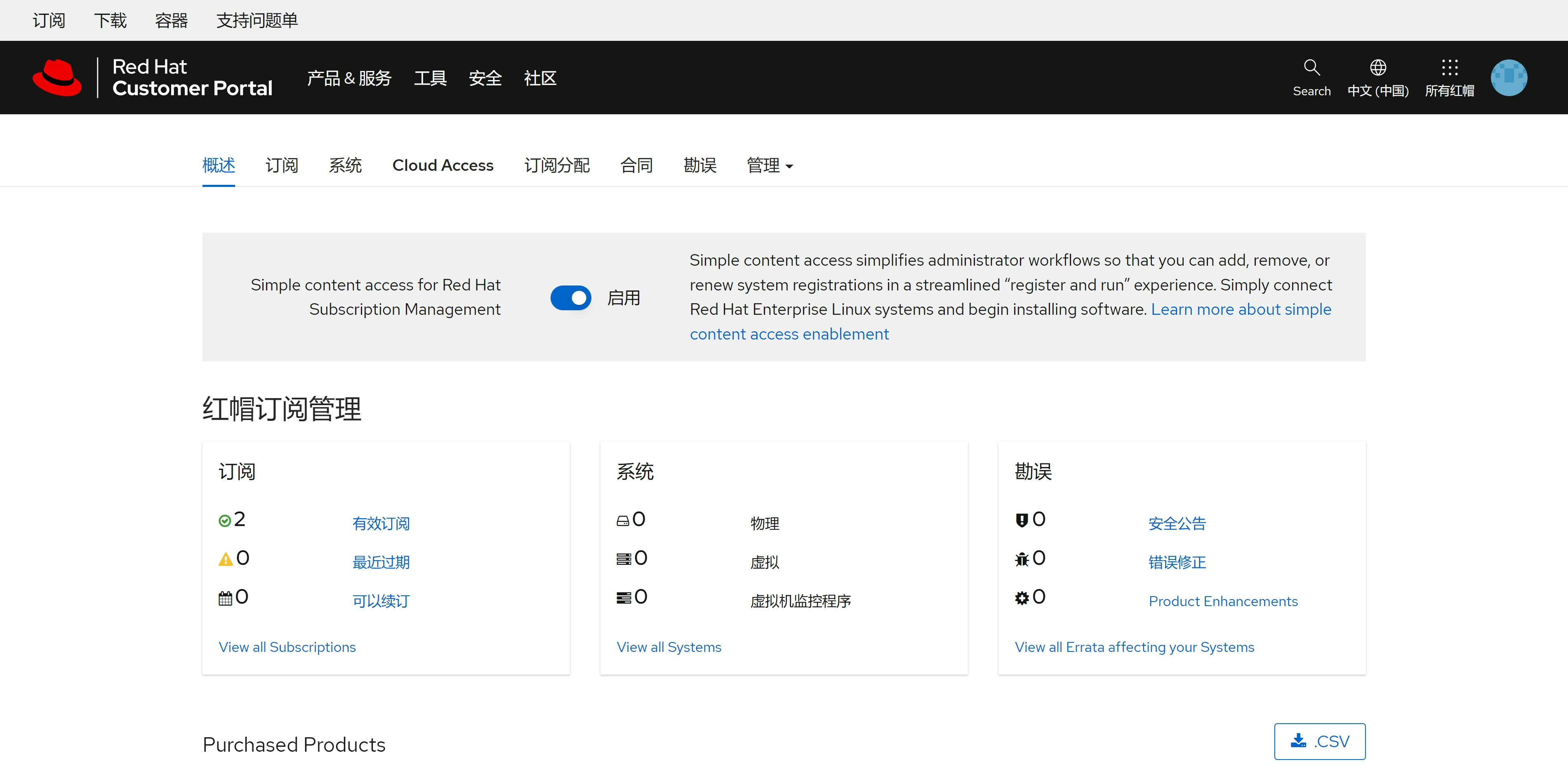

免费获得OpenShift4下载密钥

4.6 离线安装, 介质准备

本文的安装步骤,最好是在美国的VPS上完成,然后打包传输回来。

准备离线安装源的步骤如下

- 准备好operator hub catalog,主要是需要里面的日期信息

- 运行脚本,准备离线安装源

环境准备

# on vultr

yum -y install https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm

yum -y install htop byobu ethtool dstat

rm -rf /data/ocp4

mkdir -p /data/ocp4

cd /data/ocp4

yum -y install podman docker-distribution pigz skopeo docker buildah jq python3-pip git python36

pip3 install yq

# https://blog.csdn.net/ffzhihua/article/details/85237411

# wget http://mirror.centos.org/centos/7/os/x86_64/Packages/python-rhsm-certificates-1.19.10-1.el7_4.x86_64.rpm

# rpm2cpio python-rhsm-certificates-1.19.10-1.el7_4.x86_64.rpm | cpio -iv --to-stdout ./etc/rhsm/ca/redhat-uep.pem | tee /etc/rhsm/ca/redhat-uep.pem

systemctl enable --now docker

# systemctl start docker

docker login -u ****** -p ******** registry.redhat.io

docker login -u ****** -p ******** registry.access.redhat.com

docker login -u ****** -p ******** registry.connect.redhat.com

podman login -u ****** -p ******** registry.redhat.io

podman login -u ****** -p ******** registry.access.redhat.com

podman login -u ****** -p ******** registry.connect.redhat.com

# to download the pull-secret.json, open following link

# https://cloud.redhat.com/openshift/install/metal/user-provisioned

cat << 'EOF' > /data/pull-secret.json

{"auths":{"cloud.openshift.com":*********************

EOF

cat << EOF >> /etc/hosts

127.0.0.1 registry.redhat.ren

EOF

# 配置registry

mkdir -p /etc/crts/ && cd /etc/crts

# https://access.redhat.com/documentation/en-us/red_hat_codeready_workspaces/2.1/html/installation_guide/installing-codeready-workspaces-in-tls-mode-with-self-signed-certificates_crw

openssl genrsa -out /etc/crts/redhat.ren.ca.key 4096

openssl req -x509 \

-new -nodes \

-key /etc/crts/redhat.ren.ca.key \

-sha256 \

-days 36500 \

-out /etc/crts/redhat.ren.ca.crt \

-subj /CN="Local Red Hat Ren Signer" \

-reqexts SAN \

-extensions SAN \

-config <(cat /etc/pki/tls/openssl.cnf \

<(printf '[SAN]\nbasicConstraints=critical, CA:TRUE\nkeyUsage=keyCertSign, cRLSign, digitalSignature'))

openssl genrsa -out /etc/crts/redhat.ren.key 2048

openssl req -new -sha256 \

-key /etc/crts/redhat.ren.key \

-subj "/O=Local Red Hat Ren /CN=*.ocp4.redhat.ren" \

-reqexts SAN \

-config <(cat /etc/pki/tls/openssl.cnf \

<(printf "\n[SAN]\nsubjectAltName=DNS:*.ocp4.redhat.ren,DNS:*.apps.ocp4.redhat.ren,DNS:*.redhat.ren\nbasicConstraints=critical, CA:FALSE\nkeyUsage=digitalSignature, keyEncipherment, keyAgreement, dataEncipherment\nextendedKeyUsage=serverAuth")) \

-out /etc/crts/redhat.ren.csr

openssl x509 \

-req \

-sha256 \

-extfile <(printf "subjectAltName=DNS:*.ocp4.redhat.ren,DNS:*.apps.ocp4.redhat.ren,DNS:*.redhat.ren\nbasicConstraints=critical, CA:FALSE\nkeyUsage=digitalSignature, keyEncipherment, keyAgreement, dataEncipherment\nextendedKeyUsage=serverAuth") \

-days 36500 \

-in /etc/crts/redhat.ren.csr \

-CA /etc/crts/redhat.ren.ca.crt \

-CAkey /etc/crts/redhat.ren.ca.key \

-CAcreateserial -out /etc/crts/redhat.ren.crt

openssl x509 -in /etc/crts/redhat.ren.crt -text

/bin/cp -f /etc/crts/redhat.ren.ca.crt /etc/pki/ca-trust/source/anchors/

update-ca-trust extract

cd /data/ocp4

# systemctl stop docker-distribution

/bin/rm -rf /data/registry

mkdir -p /data/registry

cat << EOF > /etc/docker-distribution/registry/config.yml

version: 0.1

log:

fields:

service: registry

storage:

cache:

layerinfo: inmemory

filesystem:

rootdirectory: /data/registry

delete:

enabled: true

http:

addr: :5443

tls:

certificate: /etc/crts/redhat.ren.crt

key: /etc/crts/redhat.ren.key

compatibility:

schema1:

enabled: true

EOF

# systemctl restart docker

# systemctl enable docker-distribution

# systemctl restart docker-distribution

# podman login registry.redhat.ren:5443 -u a -p a

systemctl enable --now docker-distribution

operator hub catalog

mkdir -p /data/ocp4

cd /data/ocp4

export BUILDNUMBER=4.6.28

wget -O openshift-client-linux-${BUILDNUMBER}.tar.gz https://mirror.openshift.com/pub/openshift-v4/clients/ocp/${BUILDNUMBER}/openshift-client-linux-${BUILDNUMBER}.tar.gz

wget -O openshift-install-linux-${BUILDNUMBER}.tar.gz https://mirror.openshift.com/pub/openshift-v4/clients/ocp/${BUILDNUMBER}/openshift-install-linux-${BUILDNUMBER}.tar.gz

tar -xzf openshift-client-linux-${BUILDNUMBER}.tar.gz -C /usr/local/sbin/

tar -xzf openshift-install-linux-${BUILDNUMBER}.tar.gz -C /usr/local/sbin/

wget -O operator.sh https://raw.githubusercontent.com/wangzheng422/docker_env/dev/redhat/ocp4/4.6/scripts/operator.sh

bash operator.sh

# 2021.05.07.0344

离线安装源制作

rm -rf /data/ocp4

mkdir -p /data/ocp4

cd /data/ocp4

# wget -O build.dist.sh https://raw.githubusercontent.com/wangzheng422/docker_env/dev/redhat/ocp4/4.6/scripts/build.dist.sh

# bash build.dist.sh

wget -O prepare.offline.content.sh https://raw.githubusercontent.com/wangzheng422/docker_env/dev/redhat/ocp4/4.6/scripts/prepare.offline.content.sh

# git clone https://github.com/wangzheng422/docker_env.git

# cd docker_env

# git checkout dev

# cp redhat/ocp4/4.6/scripts/prepare.offline.content.sh /data/ocp4/

# cd /data/ocp4

# rm -rf docker_env

bash prepare.offline.content.sh -v 4.6.28, -m 4.6 -h 2021.05.07.0344

output of mirror of images

Success

Update image: registry.redhat.ren:5443/ocp4/openshift4:4.6.5

Mirror prefix: registry.redhat.ren:5443/ocp4/openshift4

To use the new mirrored repository to install, add the following section to the install-config.yaml:

imageContentSources:

- mirrors:

- registry.redhat.ren:5443/ocp4/openshift4

source: quay.io/openshift-release-dev/ocp-release

- mirrors:

- registry.redhat.ren:5443/ocp4/openshift4

source: quay.io/openshift-release-dev/ocp-v4.0-art-dev

To use the new mirrored repository for upgrades, use the following to create an ImageContentSourcePolicy:

apiVersion: operator.openshift.io/v1alpha1

kind: ImageContentSourcePolicy

metadata:

name: example

spec:

repositoryDigestMirrors:

- mirrors:

- registry.redhat.ren:5443/ocp4/openshift4

source: quay.io/openshift-release-dev/ocp-release

- mirrors:

- registry.redhat.ren:5443/ocp4/openshift4

source: quay.io/openshift-release-dev/ocp-v4.0-art-dev

########################################

##

Success

Update image: openshift/release:4.3.3

To upload local images to a registry, run:

oc image mirror --from-dir=/data/mirror_dir file://openshift/release:4.3.3* REGISTRY/REPOSITORY

download image for components

########################################

# your images

cd /data/ocp4/

export MIRROR_DIR='/data/install.image'

/bin/rm -rf ${MIRROR_DIR}

bash add.image.sh install.image.list ${MIRROR_DIR}

export MIRROR_DIR='/data/poc.image'

/bin/rm -rf ${MIRROR_DIR}

bash add.image.sh poc.image.list ${MIRROR_DIR}

########################################

# common function

build_image_list() {

VAR_INPUT_FILE=$1

VAR_OUTPUT_FILE=$2

VAR_OPERATOR=$3

VAR_FINAL=`cat $VAR_INPUT_FILE | grep $VAR_OPERATOR | awk '{if ($2) print $2;}' | sort | uniq | tail -1`

echo $VAR_FINAL

cat $VAR_INPUT_FILE | grep $VAR_FINAL | awk '{if ($2) print $1;}' >> $VAR_OUTPUT_FILE

}

########################################

# redhat operator hub

export MIRROR_DIR='/data/redhat-operator'

/bin/rm -rf ${MIRROR_DIR}

/bin/rm -f /data/ocp4/mapping-redhat.list

wanted_operator_list=$(cat redhat-operator-image.list | awk '{if ($2) print $2;}' \

| sed 's/\..*//g' | sort | uniq

)

while read -r line; do

build_image_list '/data/ocp4/redhat-operator-image.list' '/data/ocp4/mapping-redhat.list' $line

done <<< "$wanted_operator_list"

bash add.image.sh mapping-redhat.list ${MIRROR_DIR}

# /bin/cp -f pull.add.image.failed.list pull.add.image.failed.list.bak

# bash add.image.resume.sh pull.add.image.failed.list.bak ${MIRROR_DIR}

cd ${MIRROR_DIR%/*}

tar cf - echo ${MIRROR_DIR##*/}/ | pigz -c > echo ${MIRROR_DIR##*/}.tgz

# to load image back

bash add.image.load.sh '/data/redhat-operator' 'registry.redhat.ren:5443'

######################################

# certified operator hub

export MIRROR_DIR='/data/certified-operator'

/bin/rm -rf ${MIRROR_DIR}

/bin/rm -f /data/ocp4/mapping-certified.list

wanted_operator_list=$(cat certified-operator-image.list | awk '{if ($2) print $2;}' \

| sed 's/\..*//g' | sort | uniq

)

while read -r line; do

build_image_list '/data/ocp4/certified-operator-image.list' '/data/ocp4/mapping-certified.list' $line

done <<< "$wanted_operator_list"

bash add.image.sh mapping-certified.list ${MIRROR_DIR}

# /bin/cp -f pull.add.image.failed.list pull.add.image.failed.list.bak

# bash add.image.resume.sh pull.add.image.failed.list.bak ${MIRROR_DIR}

cd ${MIRROR_DIR%/*}

tar cf - echo ${MIRROR_DIR##*/}/ | pigz -c > echo ${MIRROR_DIR##*/}.tgz

# bash add.image.sh mapping-certified.txt

#######################################

# community operator hub

export MIRROR_DIR='/data/community-operator'

/bin/rm -rf ${MIRROR_DIR}

/bin/rm -f /data/ocp4/mapping-community.list

wanted_operator_list=$(cat community-operator-image.list | awk '{if ($2) print $2;}' \

| sed 's/\..*//g' | sort | uniq

)

while read -r line; do

build_image_list '/data/ocp4/community-operator-image.list' '/data/ocp4/mapping-community.list' $line

done <<< "$wanted_operator_list"

bash add.image.sh mapping-community.list ${MIRROR_DIR}

# /bin/cp -f pull.add.image.failed.list pull.add.image.failed.list.bak

# bash add.image.resume.sh pull.add.image.failed.list.bak ${MIRROR_DIR}

cd ${MIRROR_DIR%/*}

tar cf - echo ${MIRROR_DIR##*/}/ | pigz -c > echo ${MIRROR_DIR##*/}.tgz

# bash add.image.sh mapping-community.txt

# to load image back

bash add.image.load.sh '/data/community-operator' 'registry.redhat.ren:5443'

#####################################

# samples operator

export MIRROR_DIR='/data/is.samples'

/bin/rm -rf ${MIRROR_DIR}

bash add.image.sh is.openshift.list ${MIRROR_DIR}

镜像仓库代理 / image registry proxy

准备离线镜像仓库非常麻烦,好在我们找到了一台在线的主机,那么我们可以使用nexus构造image registry proxy,在在线环境上面,做一遍PoC,然后就能通过image registry proxy得到离线镜像了

- https://mtijhof.wordpress.com/2018/07/23/using-nexus-oss-as-a-proxy-cache-for-docker-images/

#####################################################

# init build the nexus fs

mkdir -p /data/ccn/nexus-image

chown -R 200 /data/ccn/nexus-image

# podman run -d -p 8082:8081 -p 8083:8083 -it --name nexus-image -v /data/ccn/nexus-image:/nexus-data:Z docker.io/sonatype/nexus3:3.29.0

podman run -d -p 8082:8081 -p 8083:8083 -it --name nexus-image -v /data/ccn/nexus-image:/nexus-data:Z docker.io/wangzheng422/imgs:nexus3-3.29.0-wzh

podman stop nexus-image

podman rm nexus-image

# get the admin password

cat /data/ccn/nexus-image/admin.password && echo

# 84091bcd-c82f-44a3-8b7b-dfc90f5b7da1

# open http://nexus.ocp4.redhat.ren:8082

# 开启 https

# https://blog.csdn.net/s7799653/article/details/105378645

# https://help.sonatype.com/repomanager3/system-configuration/configuring-ssl#ConfiguringSSL-InboundSSL-ConfiguringtoServeContentviaHTTPS

mkdir -p /data/install/tmp

cd /data/install/tmp

# 将证书导出成pkcs格式

# 这里需要输入密码 用 password,

openssl pkcs12 -export -out keystore.pkcs12 -inkey /etc/crts/redhat.ren.key -in /etc/crts/redhat.ren.crt

cat << EOF >> Dockerfile

FROM docker.io/sonatype/nexus3:3.29.0

USER root

COPY keystore.pkcs12 /keystore.pkcs12

RUN keytool -v -importkeystore -srckeystore keystore.pkcs12 -srcstoretype PKCS12 -destkeystore keystore.jks -deststoretype JKS -storepass password -srcstorepass password &&\

cp keystore.jks /opt/sonatype/nexus/etc/ssl/

USER nexus

EOF

buildah bud --format=docker -t docker.io/wangzheng422/imgs:nexus3-3.29.0-wzh -f Dockerfile .

buildah push docker.io/wangzheng422/imgs:nexus3-3.29.0-wzh

######################################################

# go to helper, update proxy setting for ocp cluster

cd /data/ocp4

bash image.registries.conf.sh nexus.ocp4.redhat.ren:8083

mkdir -p /etc/containers/registries.conf.d

/bin/cp -f image.registries.conf /etc/containers/registries.conf.d/

cd /data/ocp4

oc apply -f ./99-worker-container-registries.yaml -n openshift-config

oc apply -f ./99-master-container-registries.yaml -n openshift-config

######################################################

# dump the nexus image fs out

podman stop nexus-image

var_date=$(date '+%Y-%m-%d-%H%M')

echo $var_date

cd /data/ccn

tar cf - ./nexus-image | pigz -c > nexus-image.tgz

buildah from --name onbuild-container scratch

buildah copy onbuild-container nexus-image.tgz /

buildah umount onbuild-container

buildah commit --rm --format=docker onbuild-container docker.io/wangzheng422/nexus-fs:image-$var_date

# buildah rm onbuild-container

# rm -f nexus-image.tgz

buildah push docker.io/wangzheng422/nexus-fs:image-$var_date

echo "docker.io/wangzheng422/nexus-fs:image-$var_date"

# 以下这个版本,可以作为初始化的image proxy,里面包含了nfs provision,以及sample operator的metadata。很高兴的发现,image stream并不会完全下载镜像,好想只是下载metadata,真正用的时候,才去下载。

# docker.io/wangzheng422/nexus-fs:image-2020-12-26-1118

##################################################

## call nexus api to get image list

# https://community.sonatype.com/t/how-can-i-get-a-list-of-tags-for-a-docker-image-akin-to-the-docker-hub-list/3210

# https://help.sonatype.com/repomanager3/rest-and-integration-api/search-api

curl -k -u admin:84091bcd-c82f-44a3-8b7b-dfc90f5b7da1 -X GET 'http://nexus.ocp4.redhat.ren:8082/service/rest/v1/search?repository=registry.redhat.io'

curl -u admin:84091bcd-c82f-44a3-8b7b-dfc90f5b7da1 -X GET 'http://nexus.ocp4.redhat.ren:8082/service/rest/v1/components?repository=registry.redhat.io'

podman pull docker.io/anoxis/registry-cli

podman run --rm anoxis/registry-cli -l admin:84091bcd-c82f-44a3-8b7b-dfc90f5b7da1 -r https://nexus.ocp4.redhat.ren:8083

# https://github.com/rpardini/docker-registry-proxy

REPO_URL=nexus.ocp4.redhat.ren:8083

curl -k -s -X GET https://$REPO_URL/v2/_catalog \

| jq '.repositories[]' \

| sort \

| xargs -I _ curl -s -k -X GET https://$REPO_URL/v2/_/tags/list

##################################################

## prepare for baidu disk

mkdir -p /data/ccn/baidu

cd /data/ccn

tar cf - ./nexus-image | pigz -c > /data/ccn/baidu/nexus-image.tgz

cd /data/ccn/baidu

split -b 20000m nexus-image.tgz nexus-image.tgz.

rm -f nexus-image.tgz

yum -y install python3-pip

pip3 install --user bypy

/root/.local/bin/bypy list

/root/.local/bin/bypy upload

upload to baidu disk

export BUILDNUMBER=4.6.28

mkdir -p /data/bypy

cd /data

tar -cvf - ocp4/ | pigz -c > /data/bypy/ocp.$BUILDNUMBER.tgz

tar -cvf - registry/ | pigz -c > /data/bypy/registry.$BUILDNUMBER.tgz

cd /data/bypy

# https://github.com/houtianze/bypy

yum -y install python3-pip

pip3 install --user bypy

/root/.local/bin/bypy list

/root/.local/bin/bypy upload

openshift 4.9 single node, assisted install mode, without dhcp, connected

本文描述,如何使用assisted service(辅助安装服务),来安装一个单节点openshift4集群,特别的地方是,默认情况,openshift4要求网络上提供dhcp服务,让节点启动的时候,能拿到IP地址,从而进一步下载容器镜像,并且和assisted service交互,拿到配置。可是大部分客户的网络,是不允许开启dhcp服务的,那么我们在这里就使用assisted service暂时隐藏的功能,进行static ip模式的部署。

本实验设想的客户环境/需求是这样的:

- 实验网络没有dhcp

- 实验网络可以访问外网

- 实验环境中有2台主机

- 将在实验环境中的1台主机上,安装单节点openshift4(baremetal模式)

由于作者实验环境所限,我们就用kvm来代替baremetal进行实验。

安装过程大概是这样的:

- 启动helper vm,并在helper节点上配置dns服务

- 启动本地assisted service服务

- 在assisted service上进行配置

- 从assisted service上下载iso

- 通过iso启动kvm/baremetal

- 在assisted service上进行配置,开始安装

- 观察和等待安装结束

- 获得openshift4的用户名密码等信息,登录集群。

本次实验的架构图:

部署 dns

assisted install 模式下,如果想静态ip安装,需要在实验网络上部署一个dns服务。因为我们部署的是single node openshift,只需要把如下4个域名,指向同一个ip地址就可以。当然,你需要提前想好域名。

- api.ocp4s.redhat.ren

- api-int.ocp4s.redhat.ren

- *.apps.ocp4.redhat.ren

- ocp4-sno.ocp4.redhat.ren

部署 assisted install service

assisted install service有2个版本,一个是cloud.redhat.com上面那个,同时还有一个本地版本,两个版本功能一样,因为我们需要有定制需求,所以我们选择本地版本。

# https://github.com/openshift/assisted-service/blob/master/docs/user-guide/assisted-service-on-local.md

# https://github.com/openshift/assisted-service/tree/master/deploy/podman

podman version

# Version: 3.4.2

# API Version: 3.4.2

# Go Version: go1.16.12

# Built: Wed Feb 2 07:59:28 2022

# OS/Arch: linux/amd64

mkdir -p /data/assisted-service/

cd /data/assisted-service/

export http_proxy="http://192.168.195.54:5085"

export https_proxy=${http_proxy}

wget https://raw.githubusercontent.com/openshift/assisted-service/master/deploy/podman/configmap.yml

wget https://raw.githubusercontent.com/openshift/assisted-service/master/deploy/podman/pod.yml

unset http_proxy

unset https_proxy

sed -i 's/ SERVICE_BASE_URL:.*/ SERVICE_BASE_URL: "http:\/\/172.21.6.103:8090"/' configmap.yml

# 启动本地assisted service

podman play kube --configmap configmap.yml pod.yml

# 用以下命令,停止/删除本地assisted service

cd /data/assisted-service/

podman play kube --down pod.yml

⚠️注意:本地版本的assisted service,会从mirror.openshift.com上面下载多个版本的iso,总共有6GB。请等待下载完成

podman exec assisted-installer-image-service du -h /data

# 6.3G /data

运行成功以后,访问以下url

http://172.21.6.103:8080

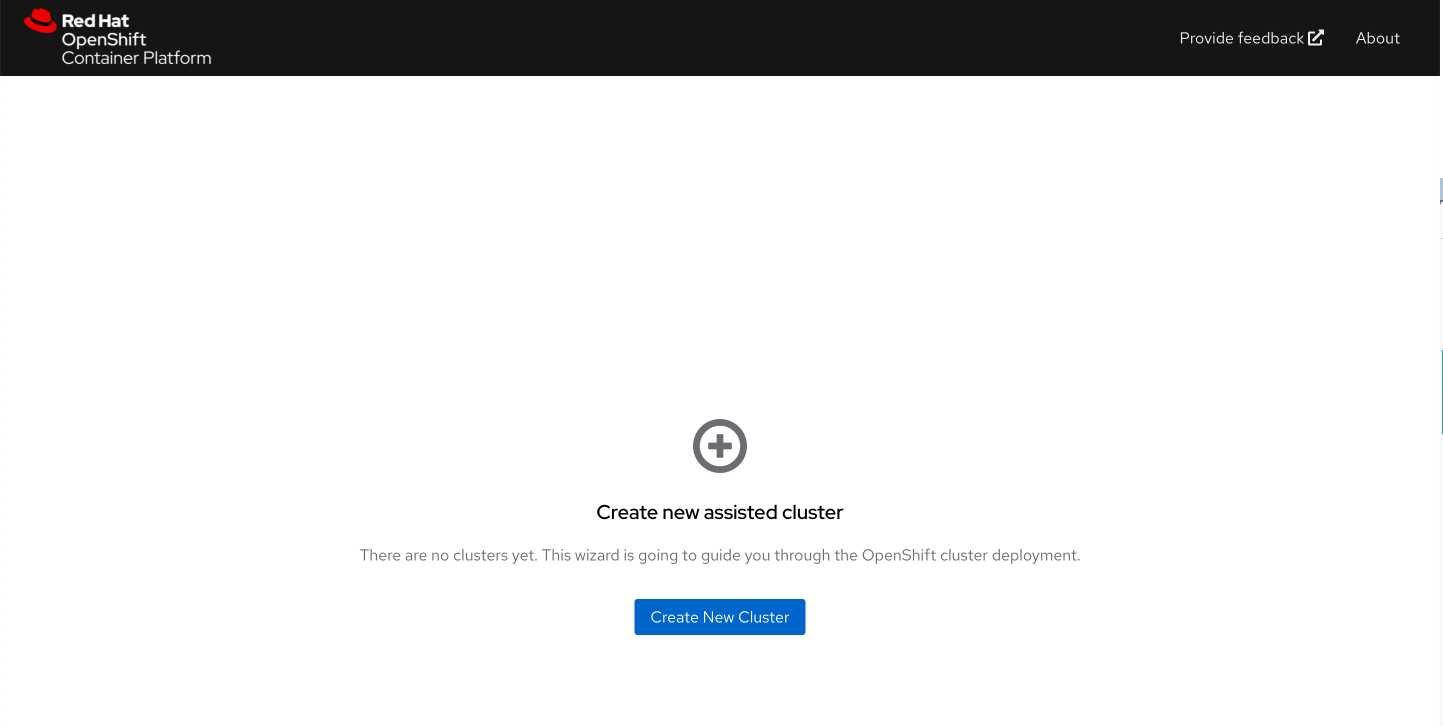

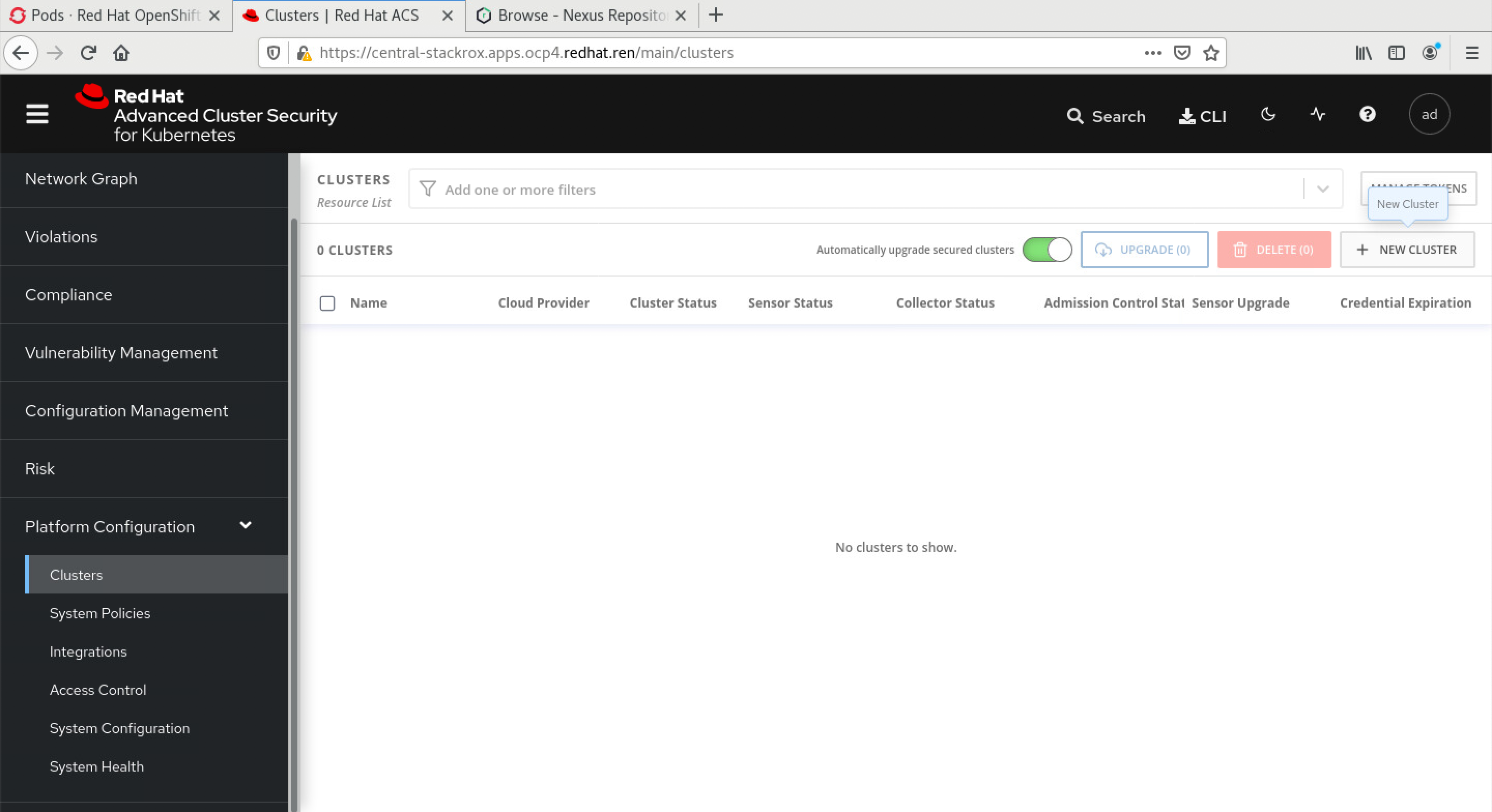

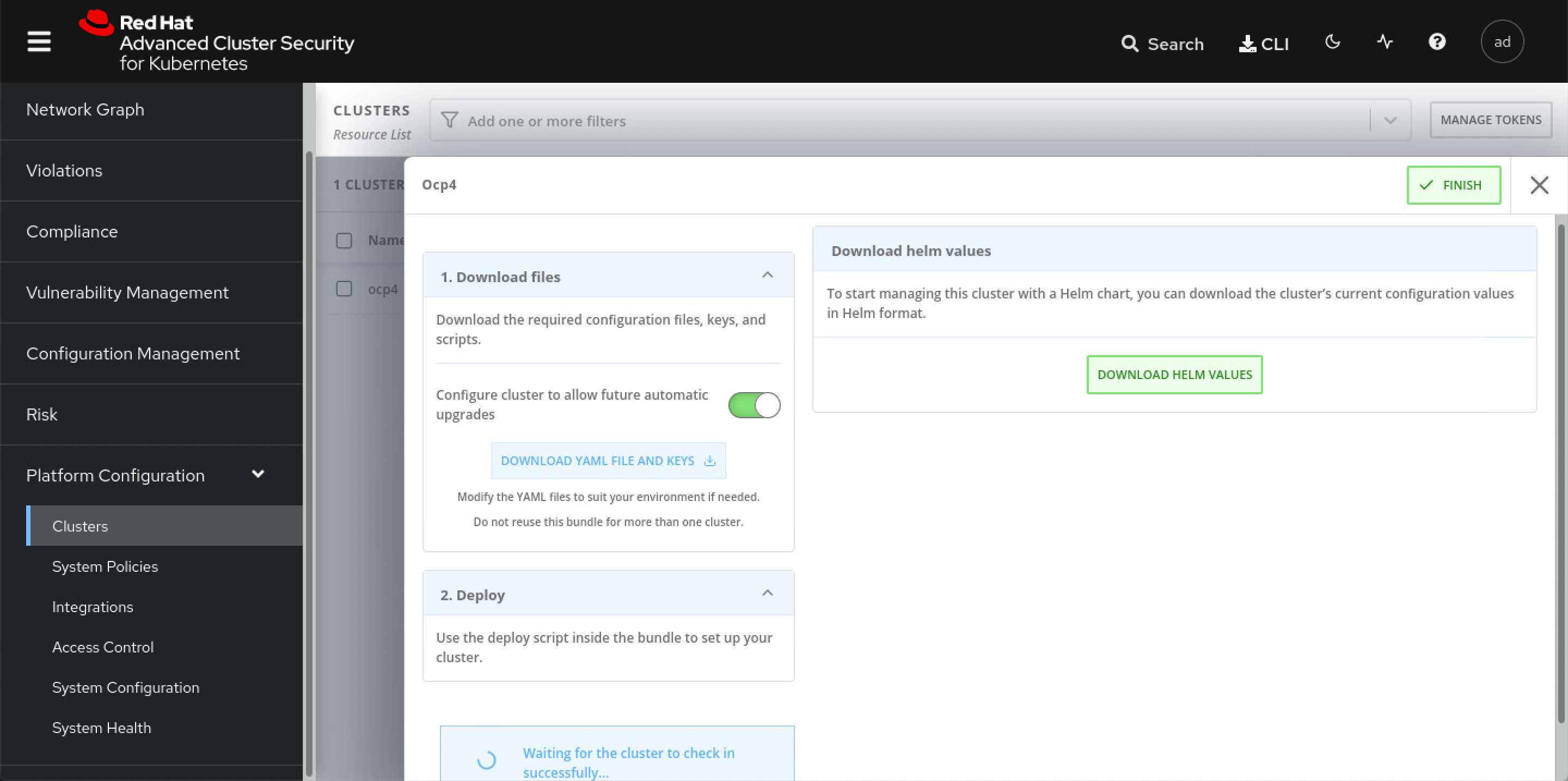

创建cluster

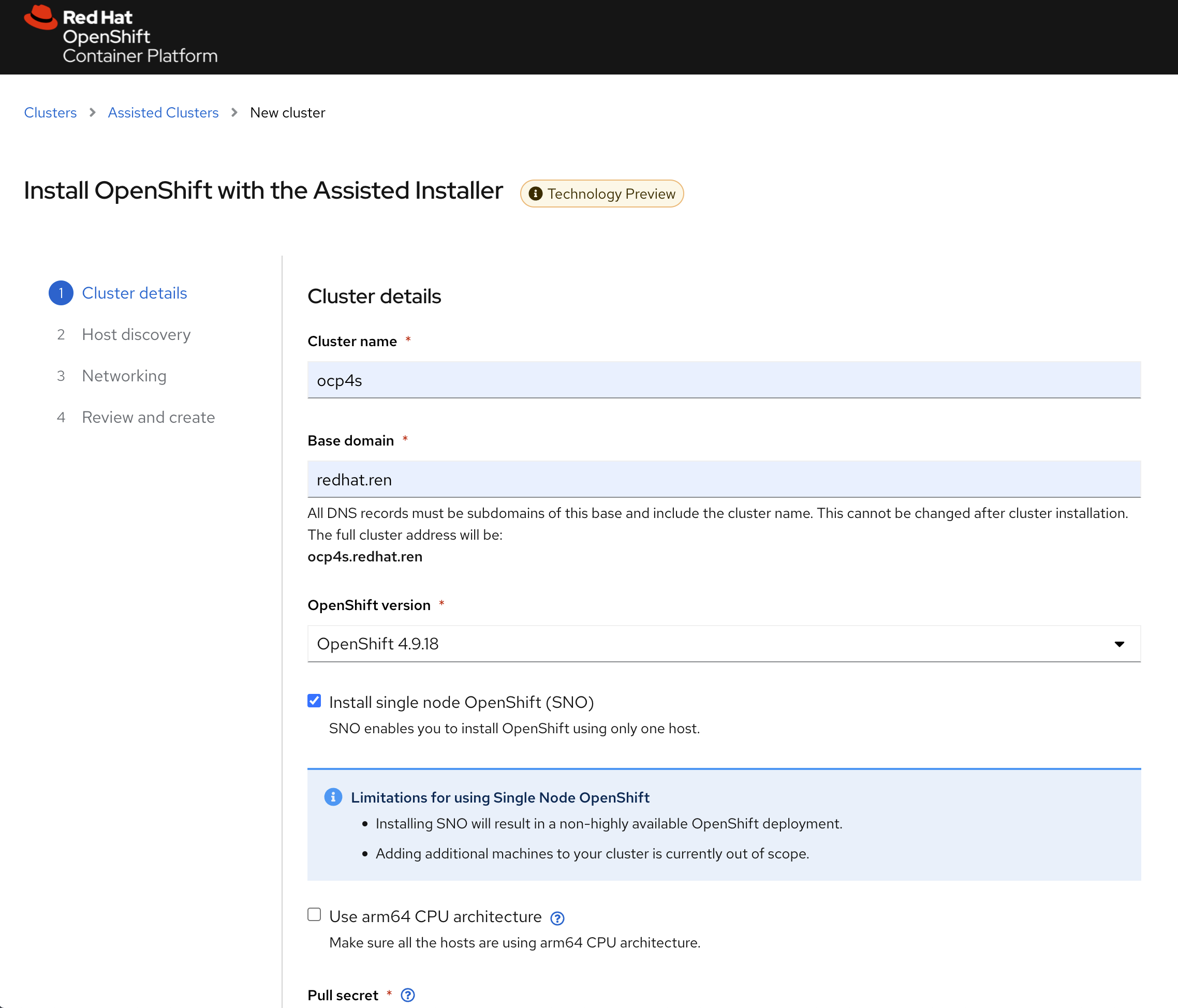

访问本地的assist install service, 创建一个cluster

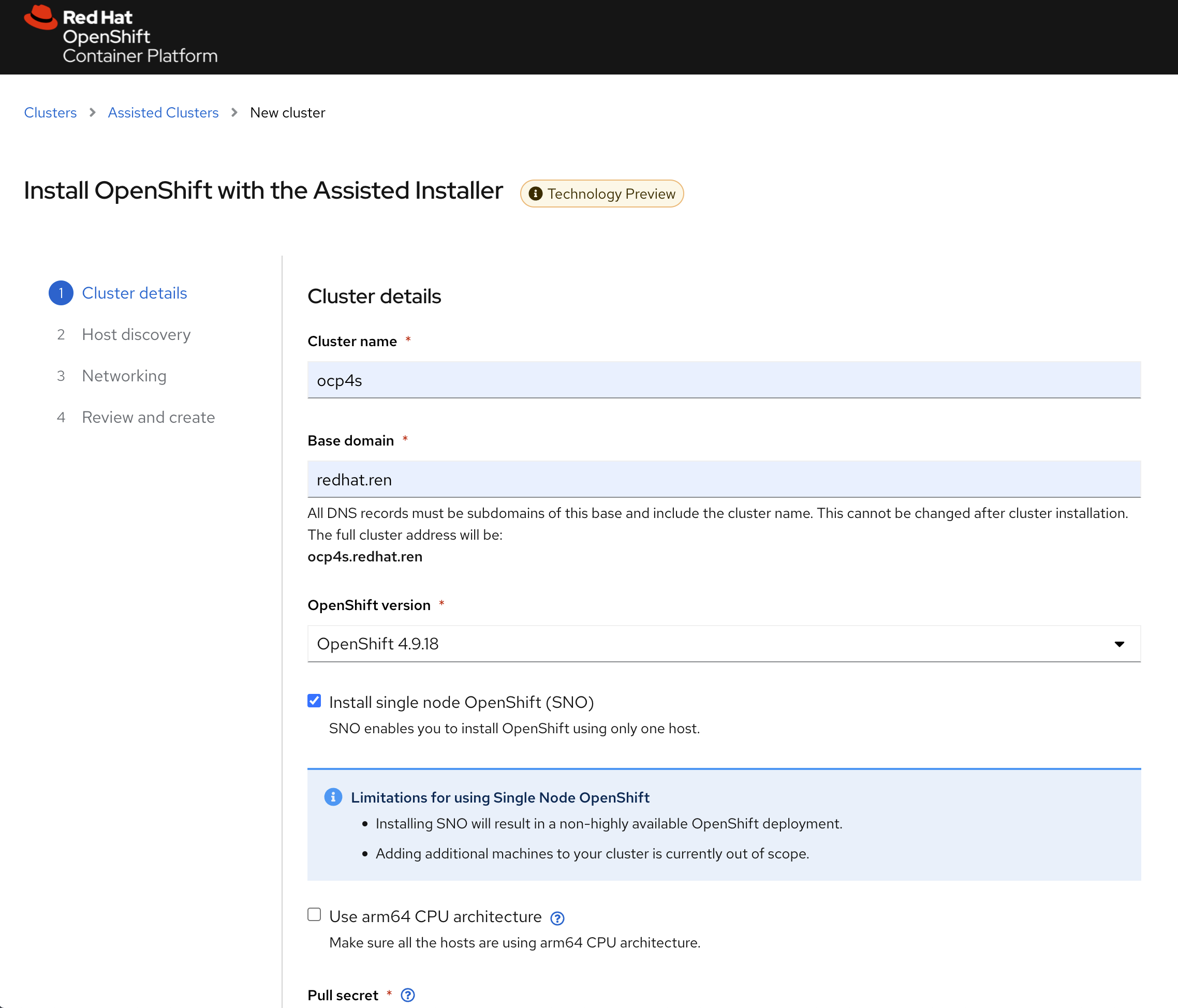

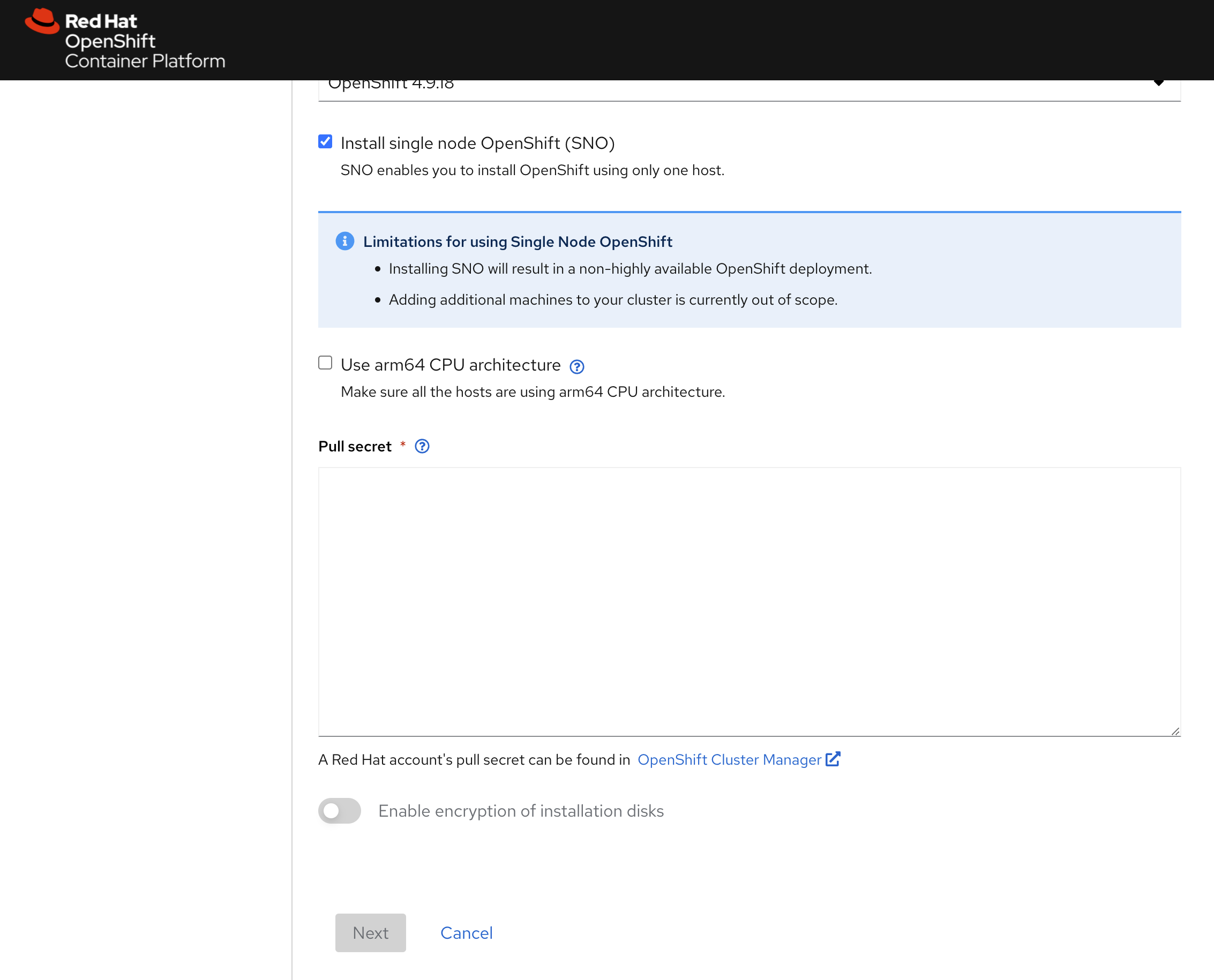

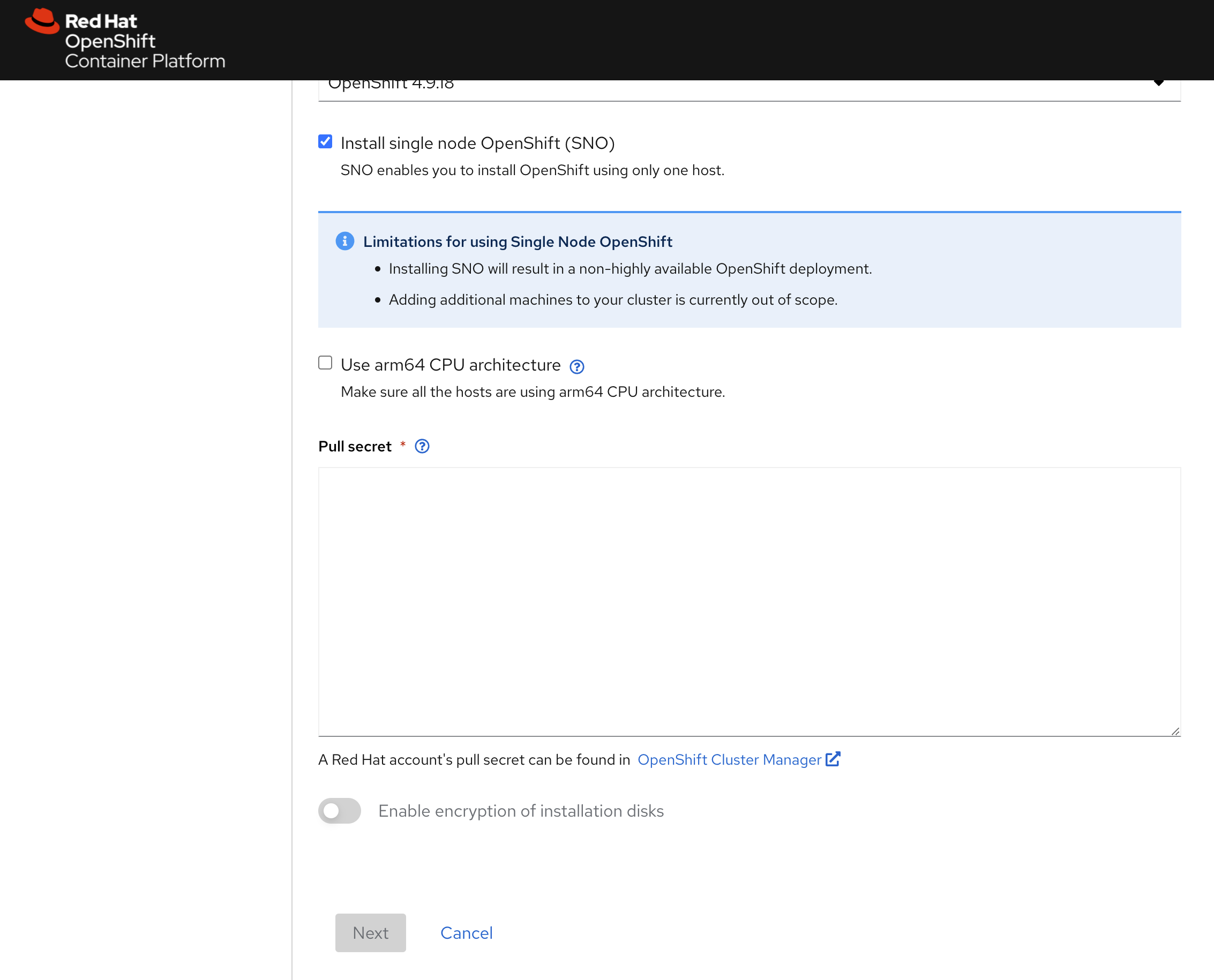

配置集群的基本信息

填写自己的pull-secret信息,并点击下一步

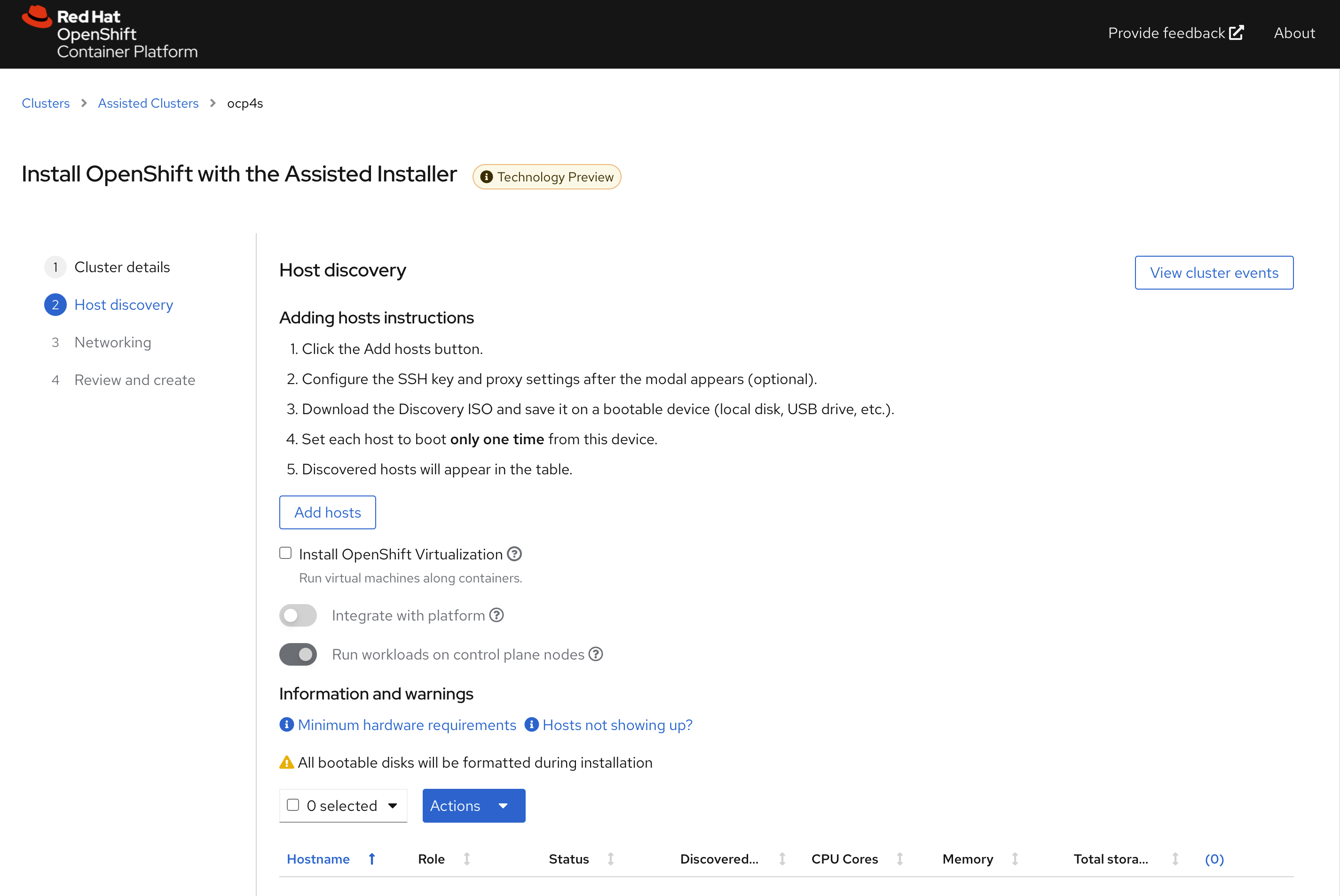

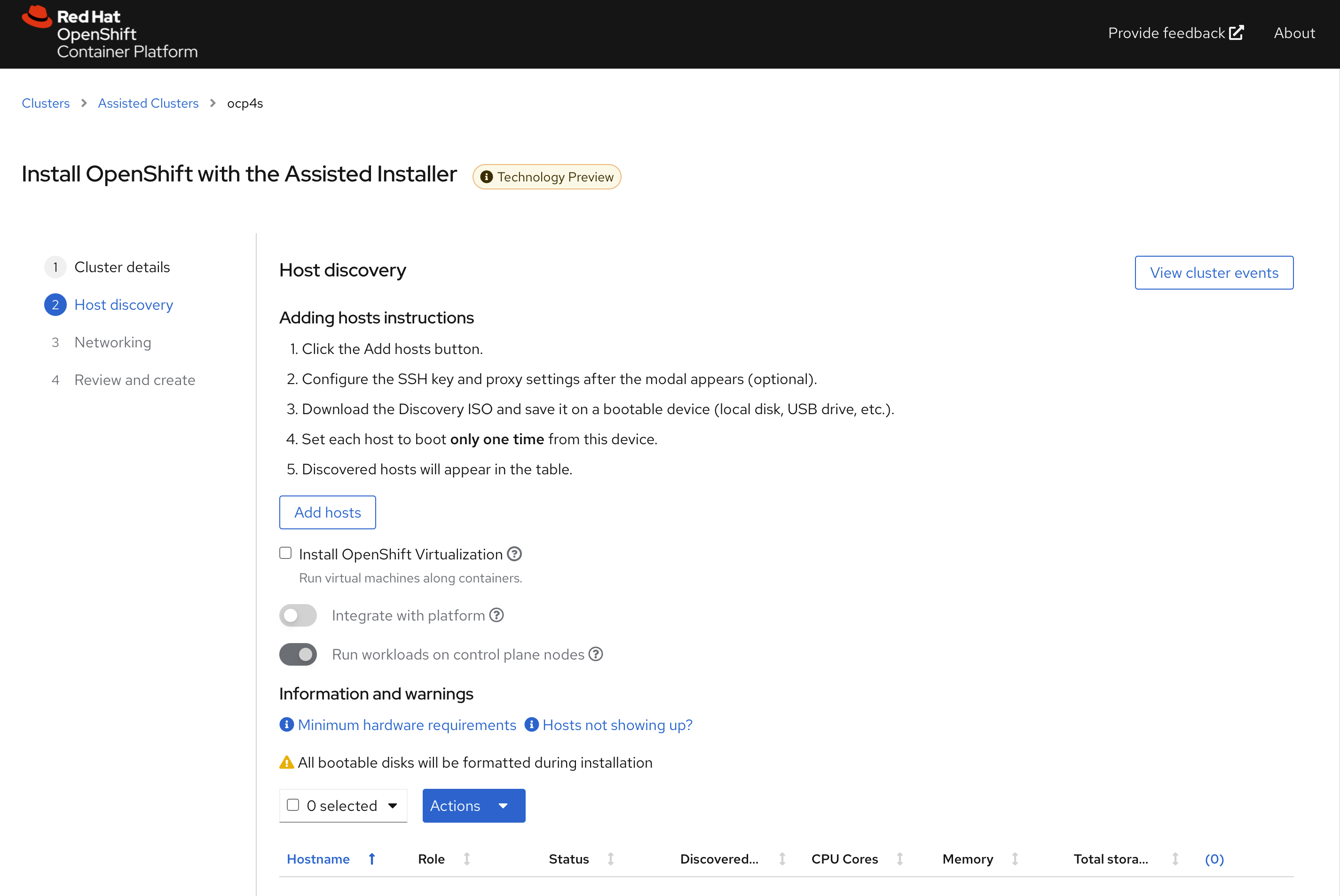

进入下一个页面后,点击add host

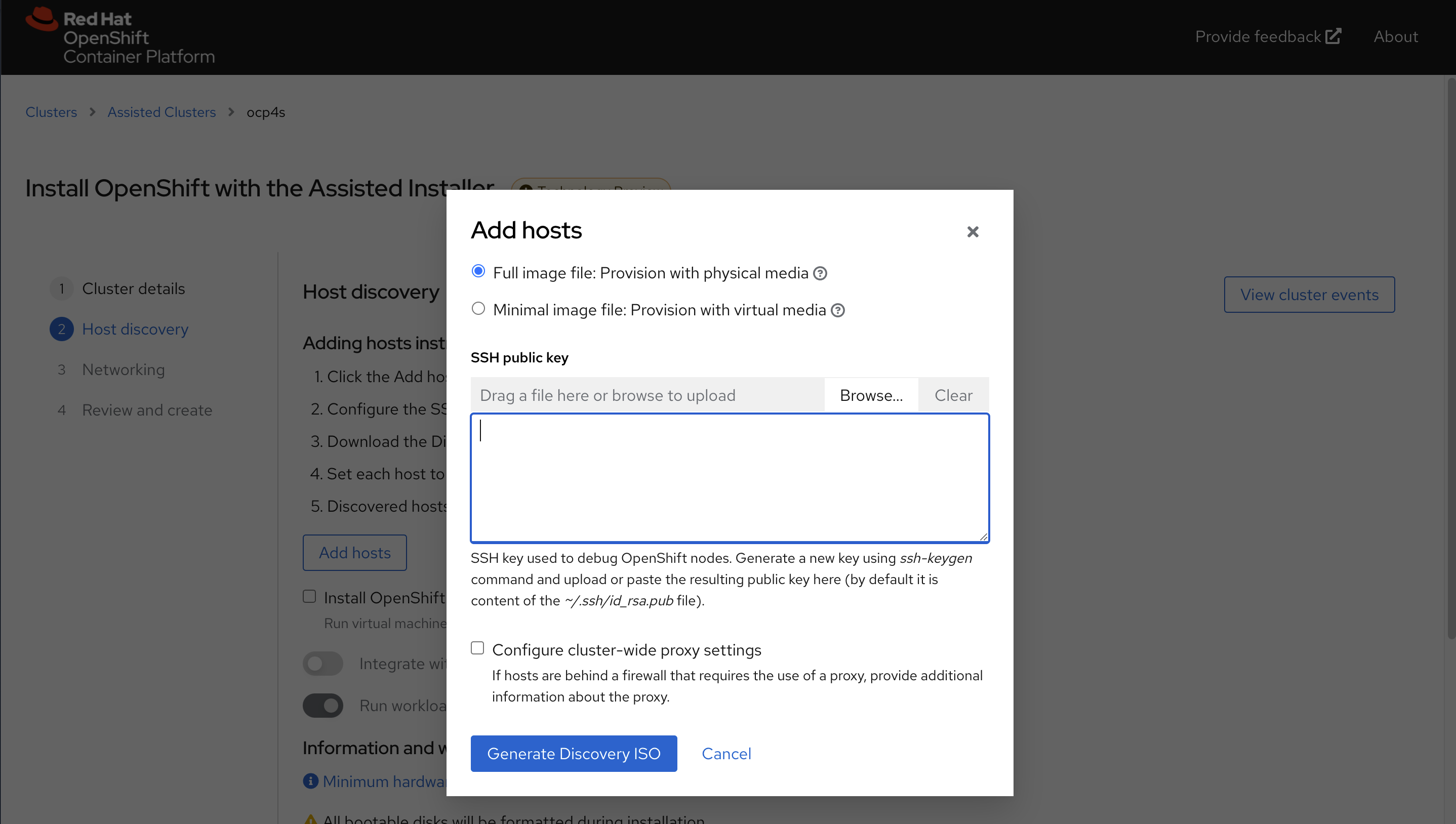

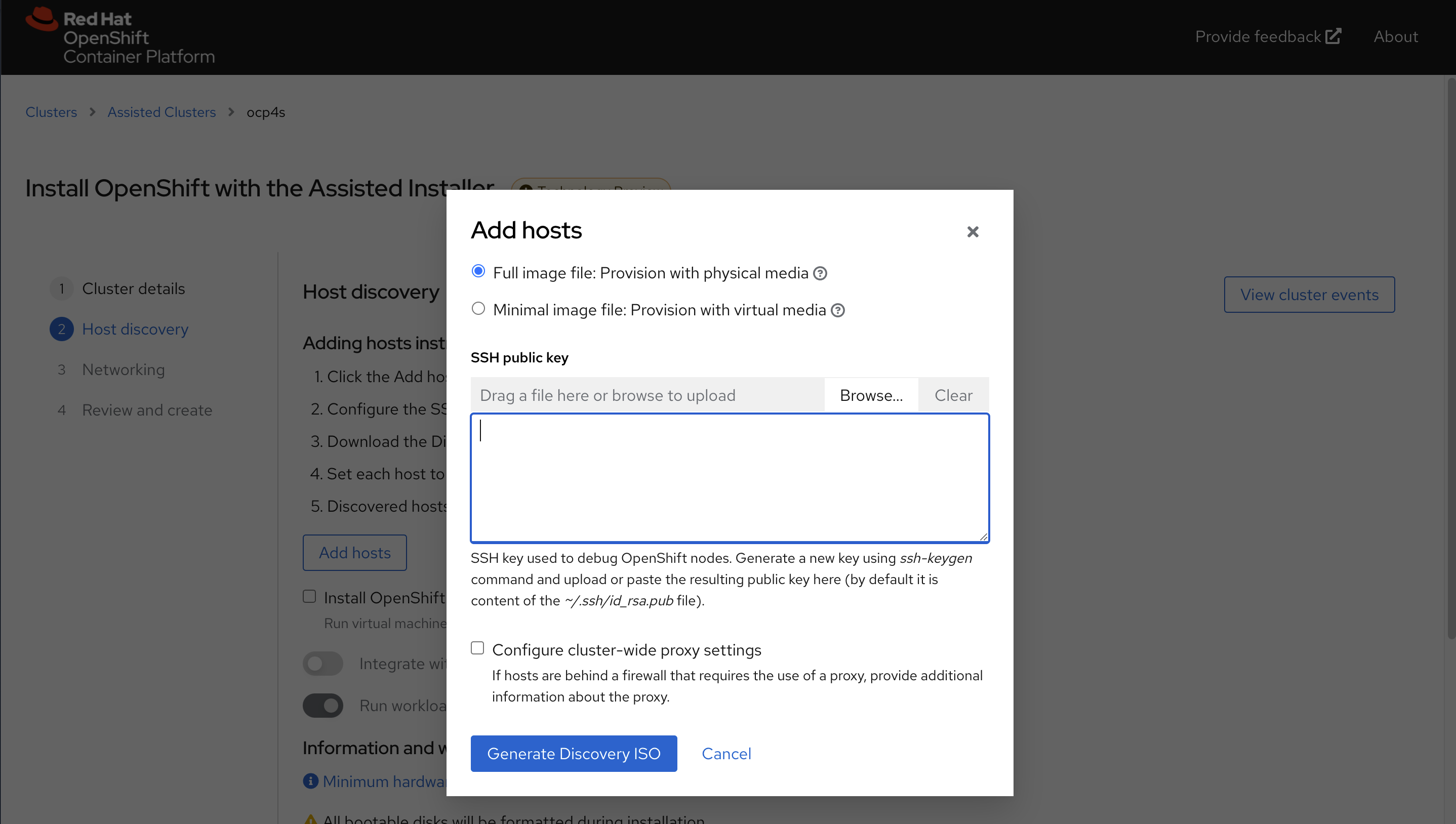

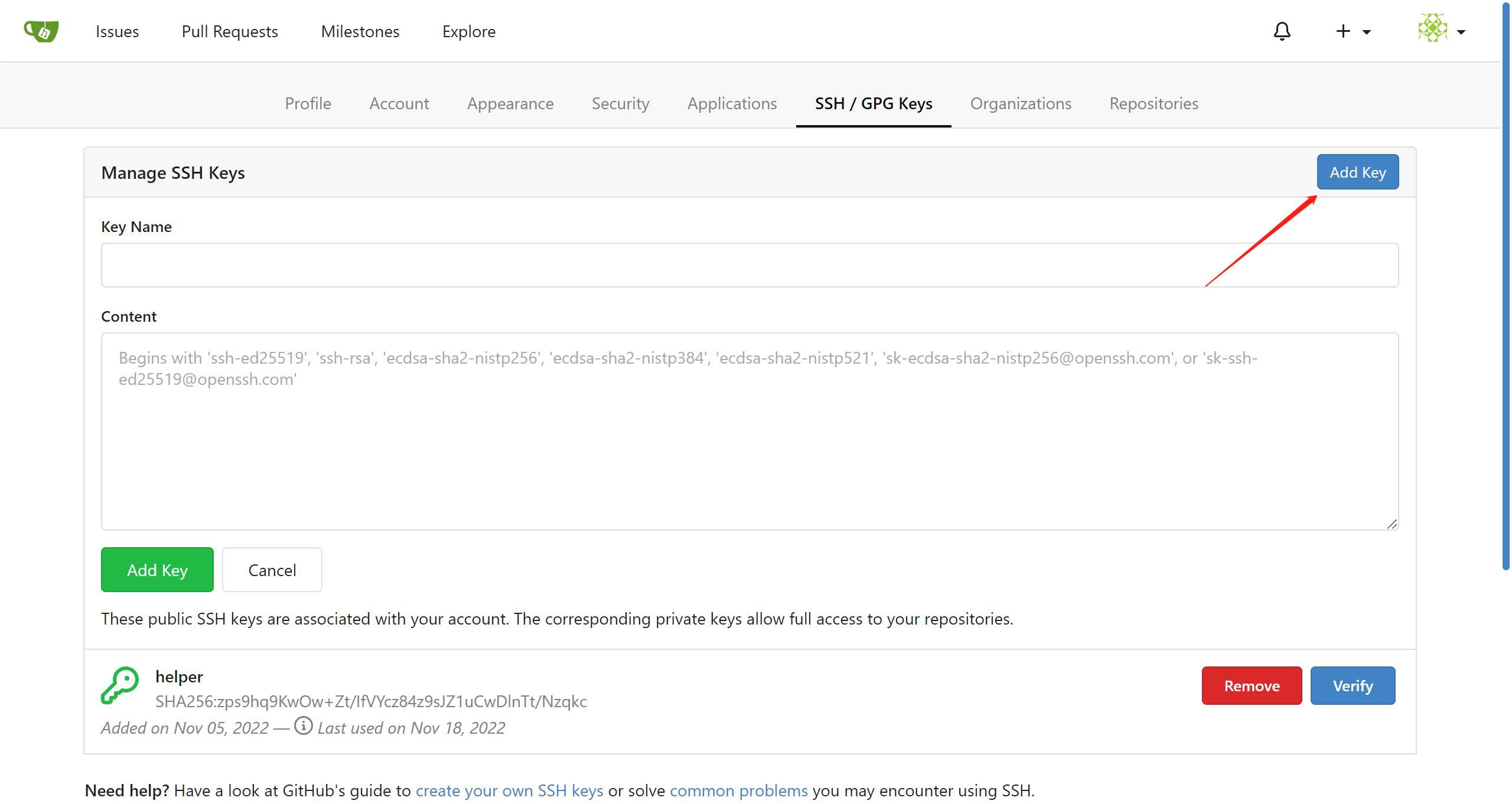

直接点击generate discovery iso,我们会在后面定制ssh key,现在不需要配置。

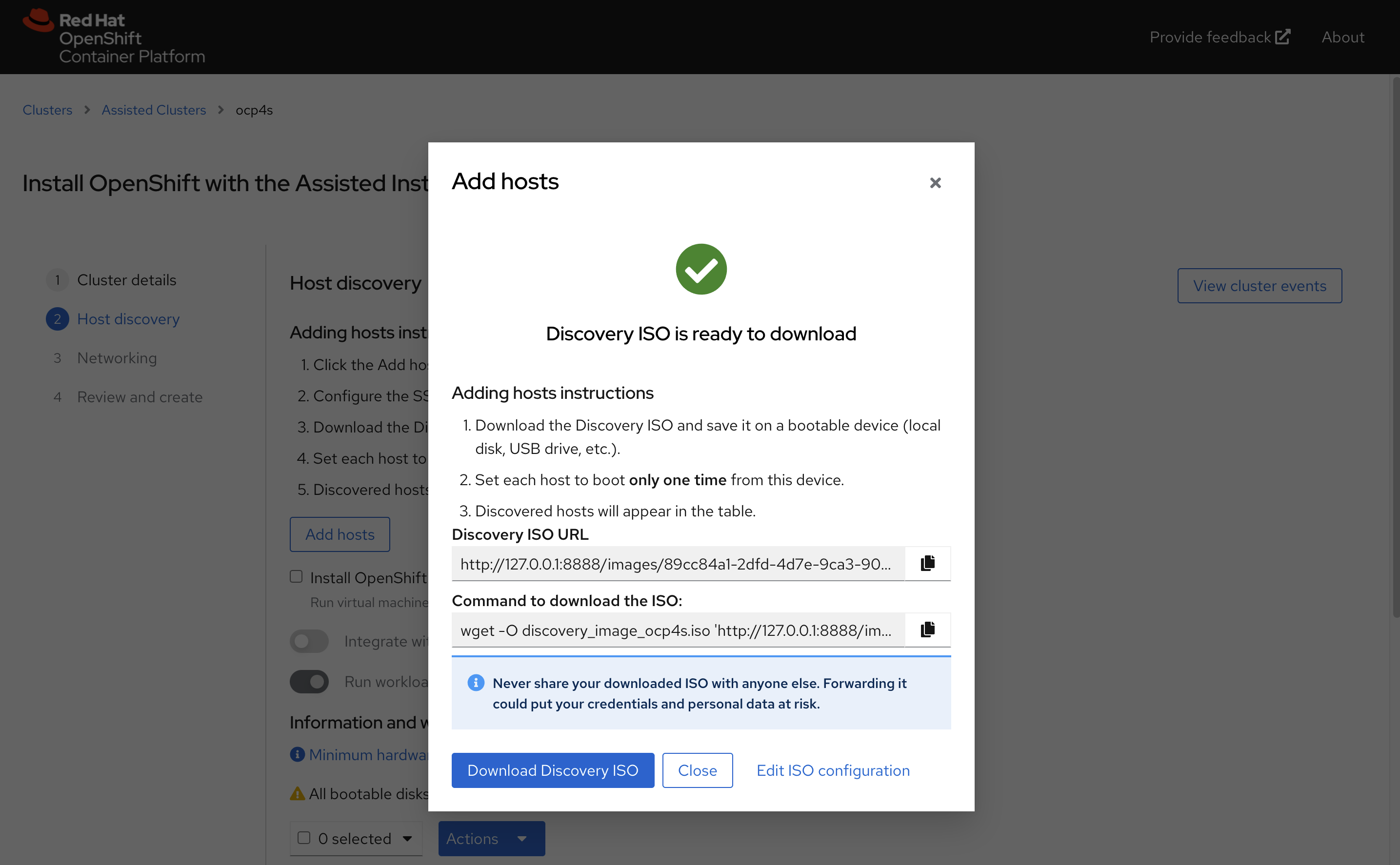

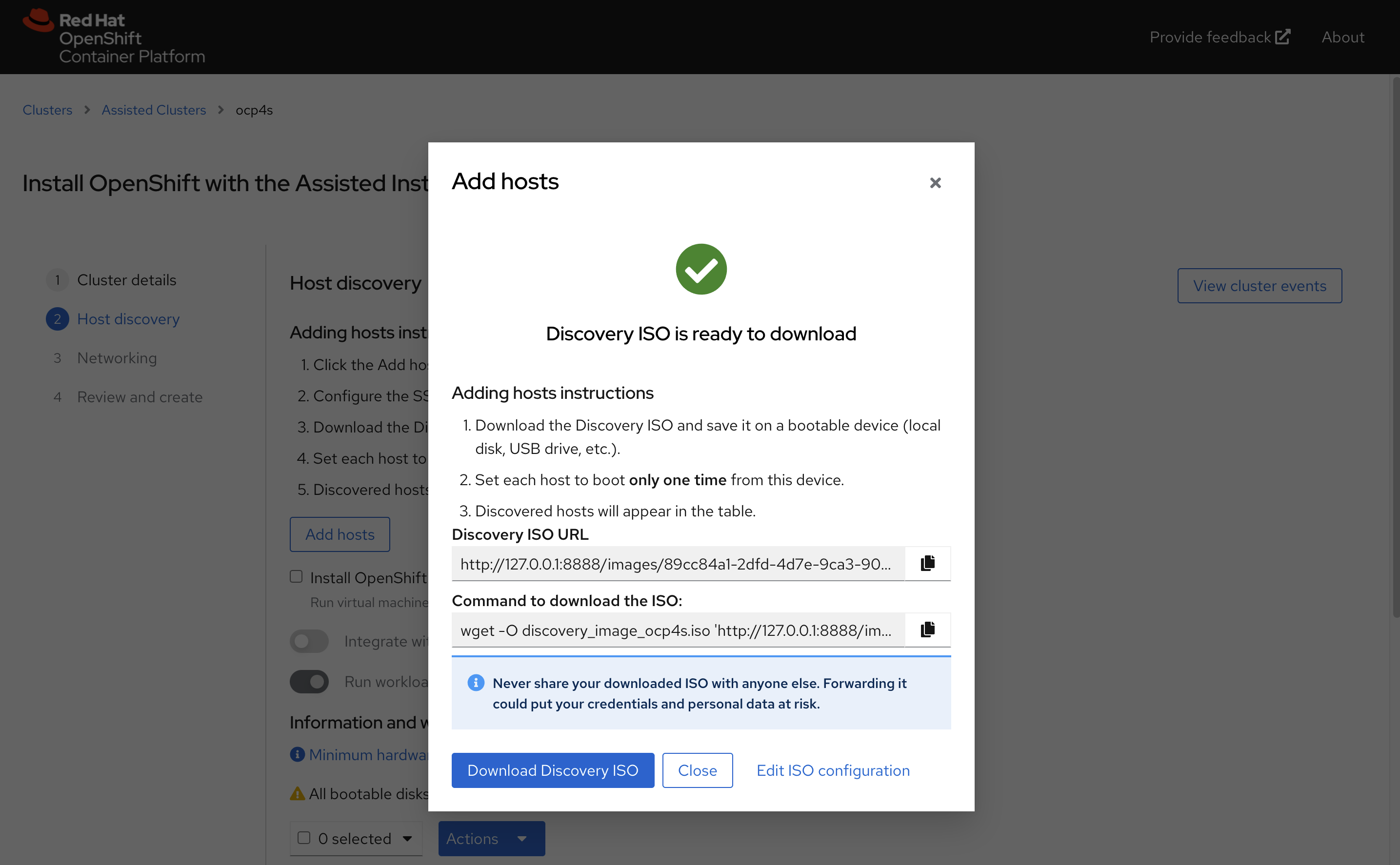

记录下来download command,因为我们需要里面的env infra id

我们这里的command是

wget -O discovery_image_ocp4s.iso 'http://127.0.0.1:8888/images/78506b3c-46e4-47f7-8a18-ec1ca4baa3b9?arch=x86_64&type=full-iso&version=4.9'

定制 assisted install service的配置

assisted install service创建的iso,要去实验网络必须有dhcp服务,我们要做的是static ip,那么我们就要定制一下 assisted install service, 激活他现在还是隐藏的功能(暂时没有官方支持)。

# on helper

cd /data/sno

SNO_IP=172.21.6.13

SNO_GW=172.21.6.254

SNO_NETMAST=255.255.255.0

SNO_NETMAST_S=24

SNO_HOSTNAME=ocp4-sno

SNO_IF=enp1s0

SNO_IF_MAC=`printf '00:60:2F:%02X:%02X:%02X' $[RANDOM%256] $[RANDOM%256] $[RANDOM%256]`

SNO_DNS=172.21.1.1

SNO_DISK=/dev/vda

SNO_CORE_PWD=redhat

echo ${SNO_IF_MAC} > /data/sno/sno.mac

ASSISTED_SERVICE_URL=http://172.21.6.103:8080

# infra id is part of download url on UI

INFRA_ENV_ID=78506b3c-46e4-47f7-8a18-ec1ca4baa3b9

NODE_SSH_KEY="$(cat ~/.ssh/id_rsa.pub)"

request_body=$(mktemp)

cat << EOF > /data/sno/server-a.yaml

dns-resolver:

config:

server:

- ${SNO_DNS}

interfaces:

- ipv4:

address:

- ip: ${SNO_IP}

prefix-length: ${SNO_NETMAST_S}

dhcp: false

enabled: true

name: ${SNO_IF}

state: up

type: ethernet

routes:

config:

- destination: 0.0.0.0/0

next-hop-address: ${SNO_GW}

next-hop-interface: ${SNO_IF}

table-id: 254

EOF

cat << EOF > /data/sno/static.ip.bu

variant: openshift

version: 4.9.0

metadata:

labels:

machineconfiguration.openshift.io/role: master

name: 99-zzz-master-static-ip

storage:

files:

- path: /etc/NetworkManager/system-connections/${SNO_IF}.nmconnection

overwrite: true

contents:

inline: |

[connection]

id=${SNO_IF}

type=ethernet

autoconnect-retries=1

interface-name=${SNO_IF}

multi-connect=1

permissions=

wait-device-timeout=60000

[ethernet]

mac-address-blacklist=

[ipv4]

address1=${SNO_IP}/${SNO_NETMAST_S=24},${SNO_GW}

dhcp-hostname=${SNO_HOSTNAME}

dhcp-timeout=90

dns=${SNO_DNS};

dns-search=

may-fail=false

method=manual

[ipv6]

addr-gen-mode=eui64

dhcp-hostname=${SNO_HOSTNAME}

dhcp-timeout=90

dns-search=

method=disabled

[proxy]

EOF

# https://access.redhat.com/solutions/6194821

# butane /data/sno/static.ip.bu | python3 -c 'import json, yaml, sys; print(json.dumps(yaml.load(sys.stdin)))'

# https://stackoverflow.com/questions/2854655/command-to-escape-a-string-in-bash

# VAR_PULL_SEC=`printf "%q" $(cat /data/pull-secret.json)`

tmppath=$(mktemp)

butane /data/sno/static.ip.bu | python3 -c 'import json, yaml, sys; print(json.dumps(yaml.load(sys.stdin)))' | jq -c '.spec.config | .ignition.version = "3.1.0" ' > ${tmppath}

VAR_NMSTATIC=$(cat ${tmppath})

# rm -f ${tmppath}

jq -n --arg SSH_KEY "$NODE_SSH_KEY" \

--arg NMSTATE_YAML1 "$(cat server-a.yaml)" \

--arg MAC_ADDR "$(cat /data/sno/sno.mac)" \

--arg PULL_SEC "$(cat /data/pull-secret.json)" \

--arg NMSTATIC "${VAR_NMSTATIC}" \

'{

"proxy":{"http_proxy":"","https_proxy":"","no_proxy":""},

"ssh_authorized_key":$SSH_KEY,

"pull_secret":$PULL_SEC,

"image_type":"full-iso",

"ignition_config_override":$NMSTATIC,

"static_network_config": [

{

"network_yaml": $NMSTATE_YAML1,

"mac_interface_map": [{"mac_address": $MAC_ADDR, "logical_nic_name": "enp1s0"}]

}

]

}' > $request_body

# 我们来看看创建的request body

cat $request_body

# 向 assisted install service发送请求,进行定制

curl -H "Content-Type: application/json" -X PATCH -d @$request_body ${ASSISTED_SERVICE_URL}/api/assisted-install/v2/infra-envs/$INFRA_ENV_ID

# {"cluster_id":"850934fd-fa64-4057-b9d2-1eeebd890e1a","cpu_architecture":"x86_64","created_at":"2022-02-11T03:54:46.632598Z","download_url":"http://127.0.0.1:8888/images/89cc84a1-2dfd-4d7e-9ca3-903342c40d60?arch=x86_64&type=full-iso&version=4.9","email_domain":"Unknown","expires_at":"0001-01-01T00:00:00.000Z","href":"/api/assisted-install/v2/infra-envs/89cc84a1-2dfd-4d7e-9ca3-903342c40d60","id":"89cc84a1-2dfd-4d7e-9ca3-903342c40d60","kind":"InfraEnv","name":"ocp4s_infra-env","openshift_version":"4.9","proxy":{"http_proxy":"","https_proxy":"","no_proxy":""},"pull_secret_set":true,"ssh_authorized_key":"ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCrkO4oLIFTwjkGON+aShlQRKwXHOf3XKrGDmpb+tQM3UcbsF2U7klsr9jBcGObQMZO7KBW8mlRu0wC2RxueBgjbqvylKoFacgVZg6PORfkclqE1gZRYFwoxDkLo2c5y5B7OhcAdlHO0eR5hZ3/0+8ZHZle0W+A0AD7qqowO2HlWLkMMt1QXFD7R0r6dzTs9u21jASGk3jjYgCOw5iHvqm2ueVDFAc4yVwNZ4MXKg5MRvqAJDYPqhaRozLE60EGIziy9SRj9HWynyNDncCdL1/IBK2z9T0JwDebD6TDNcPCtL+AeKIpaHed52PkjnFf+Q+8/0Z0iXt6GyFYlx8OkxdsiMgMxiXx43yIRaWZjx54kVtc9pB6CL50UKPQ2LjuFPIZSfaCab5KDgPRtzue82DE6Mxxg4PS+FTW32/bq1WiOxCg9ABrZ0n1CGaZWFepJkSw47wodMnvlBkcKY3Rn/SsLZVOUsJysd+b08LQgl1Fr3hjVrEQMLbyU0UxvoerYfk= root@ocp4-helper","static_network_config":"dns-resolver:\n config:\n server:\n - 172.21.1.1\ninterfaces:\n- ipv4:\n address:\n - ip: 172.21.6.13\n prefix-length: 24\n dhcp: false\n enabled: true\n name: enp1s0\n state: up\n type: ethernet\nroutes:\n config:\n - destination: 0.0.0.0/0\n next-hop-address: 172.21.6.254\n next-hop-interface: enp1s0\n table-id: 254HHHHH00:60:2F:8B:42:88=enp1s0","type":"full-iso","updated_at":"2022-02-11T04:01:14.008388Z","user_name":"admin"}

# on helper

cd /data/sno/

wget -O discovery_image_ocp4s.iso "http://172.21.6.103:8888/images/${INFRA_ENV_ID}?arch=x86_64&type=full-iso&version=4.9"

# coreos-installer iso kargs modify -a \

# " ip=${SNO_IP}::${SNO_GW}:${SNO_NETMAST}:${SNO_HOSTNAME}:${SNO_IF}:none nameserver=${SNO_DNS}" \

# /data/sno/discovery_image_ocp4s.iso

/bin/mv -f /data/sno/discovery_image_ocp4s.iso /data/sno/sno.iso

启动kvm

我们回到kvm宿主机,启动kvm,开始安装single node openshift

# back to kvm host

create_lv() {

var_vg=$1

var_lv=$2

var_size=$3

lvremove -f $var_vg/$var_lv

lvcreate -y -L $var_size -n $var_lv $var_vg

wipefs --all --force /dev/$var_vg/$var_lv

}

create_lv vgdata lvsno 120G

export KVM_DIRECTORY=/data/kvm

mkdir -p ${KVM_DIRECTORY}

cd ${KVM_DIRECTORY}

scp root@192.168.7.11:/data/sno/sno.* ${KVM_DIRECTORY}/

# on kvm host

# export KVM_DIRECTORY=/data/kvm

virt-install --name=ocp4-sno --vcpus=16 --ram=65536 \

--cpu=host-model \

--disk path=/dev/vgdata/lvsno,device=disk,bus=virtio,format=raw \

--os-variant rhel8.3 --network bridge=baremetal,model=virtio,mac=$(<sno.mac) \

--graphics vnc,port=59012 \

--boot menu=on --cdrom ${KVM_DIRECTORY}/sno.iso

在 assisted install service里面配置sno参数

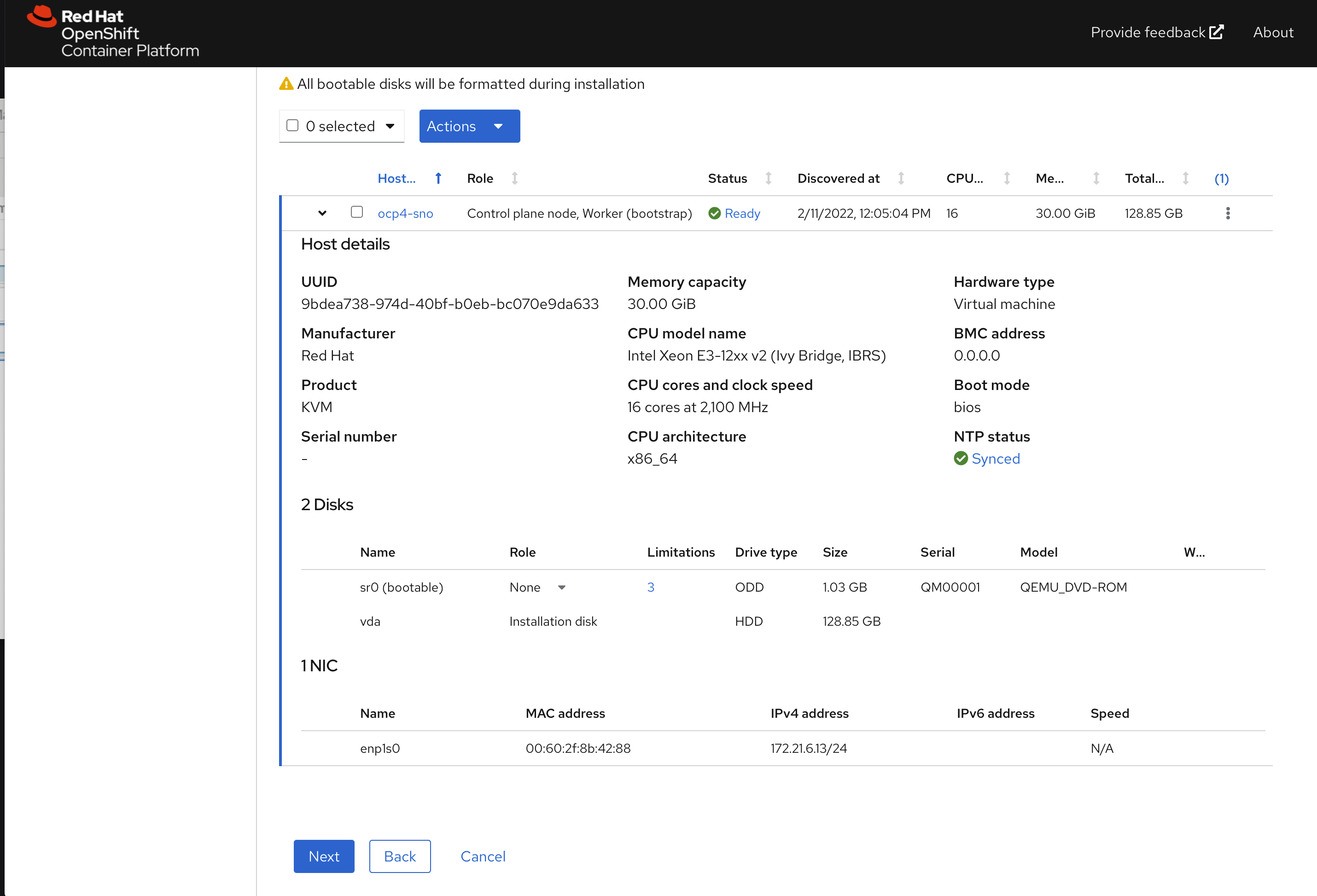

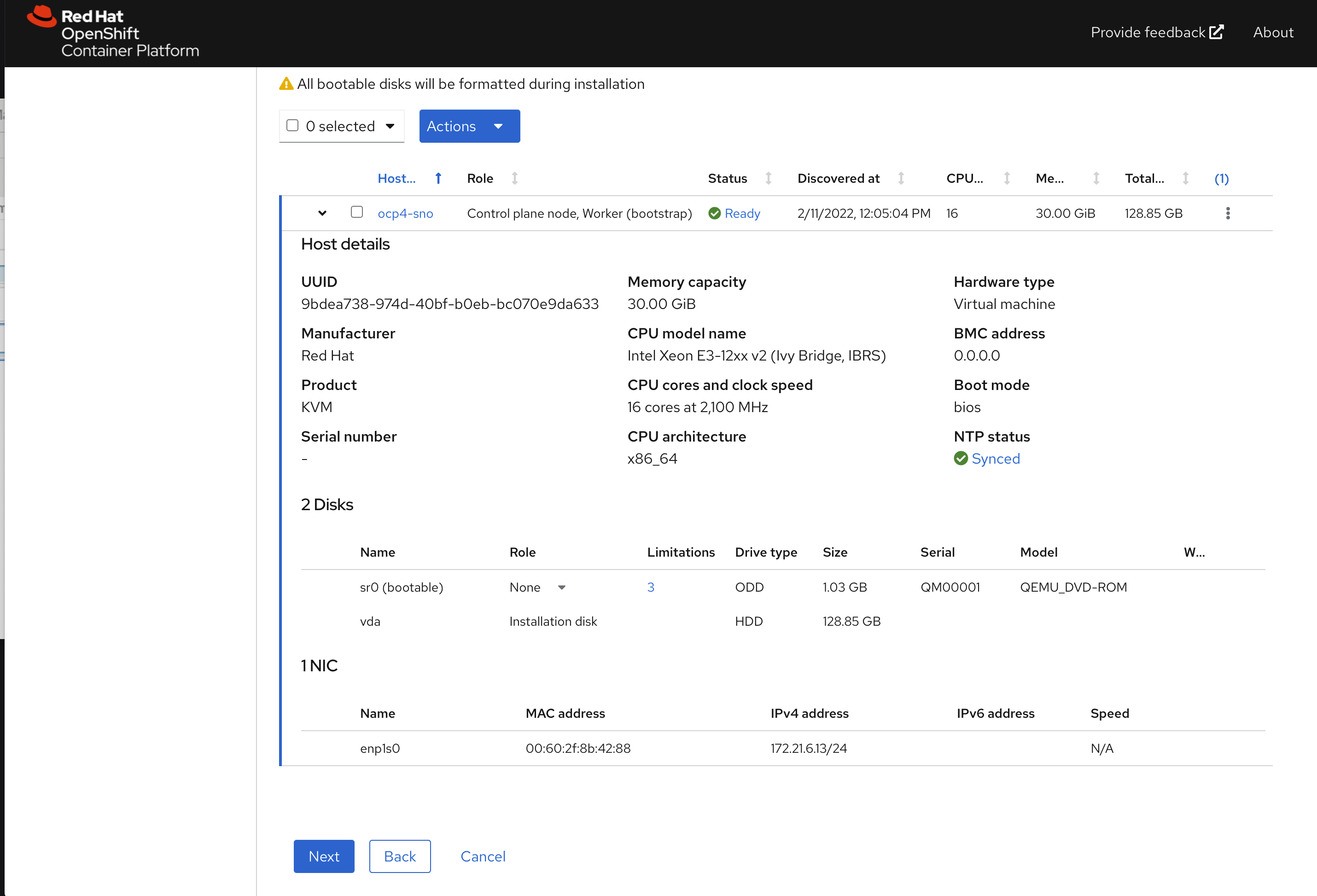

回到 assisted install service webUI,能看到node已经被发现

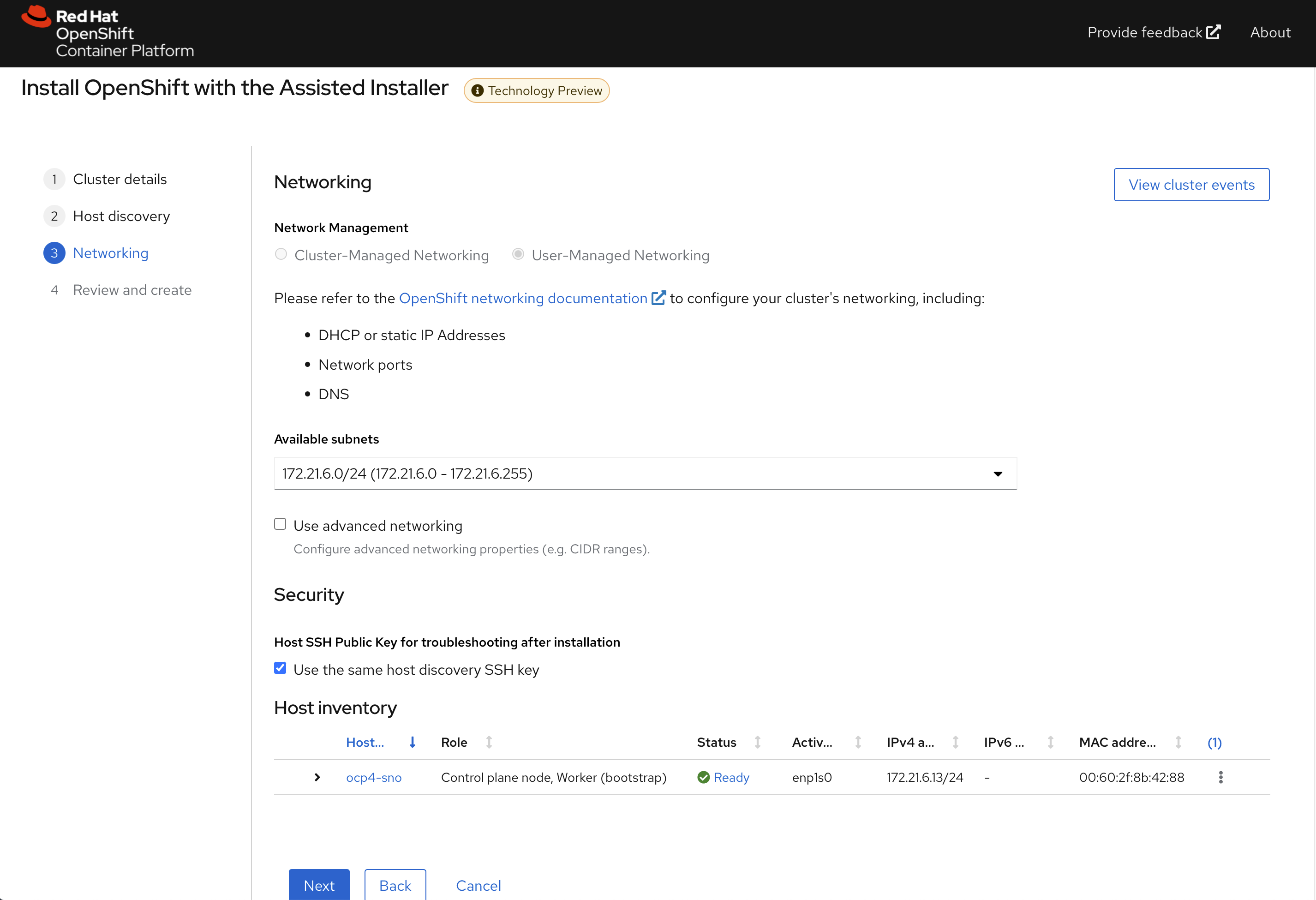

点击下一步,配置物理机的安装子网

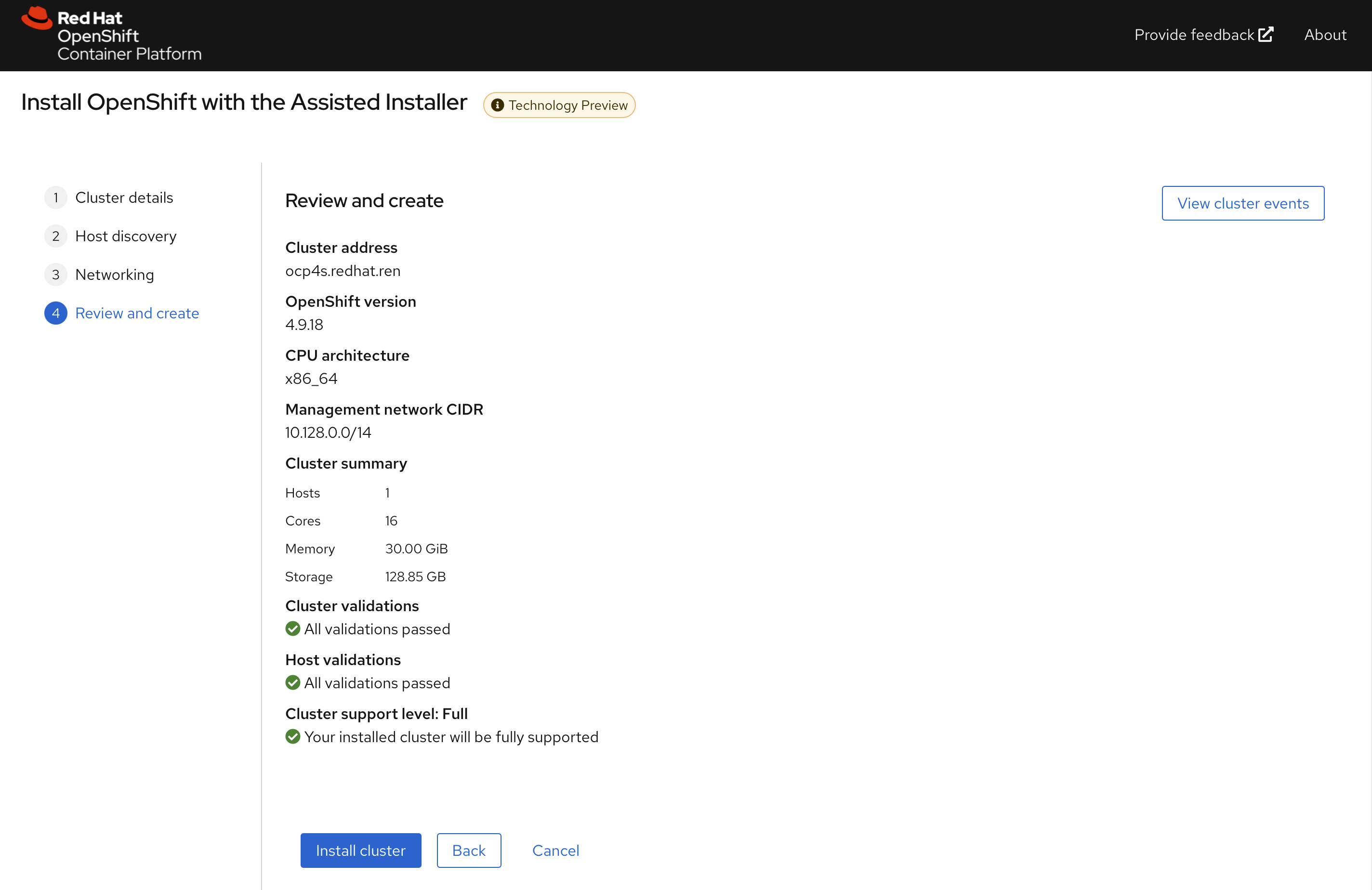

点击下一步,回顾集群配置信息

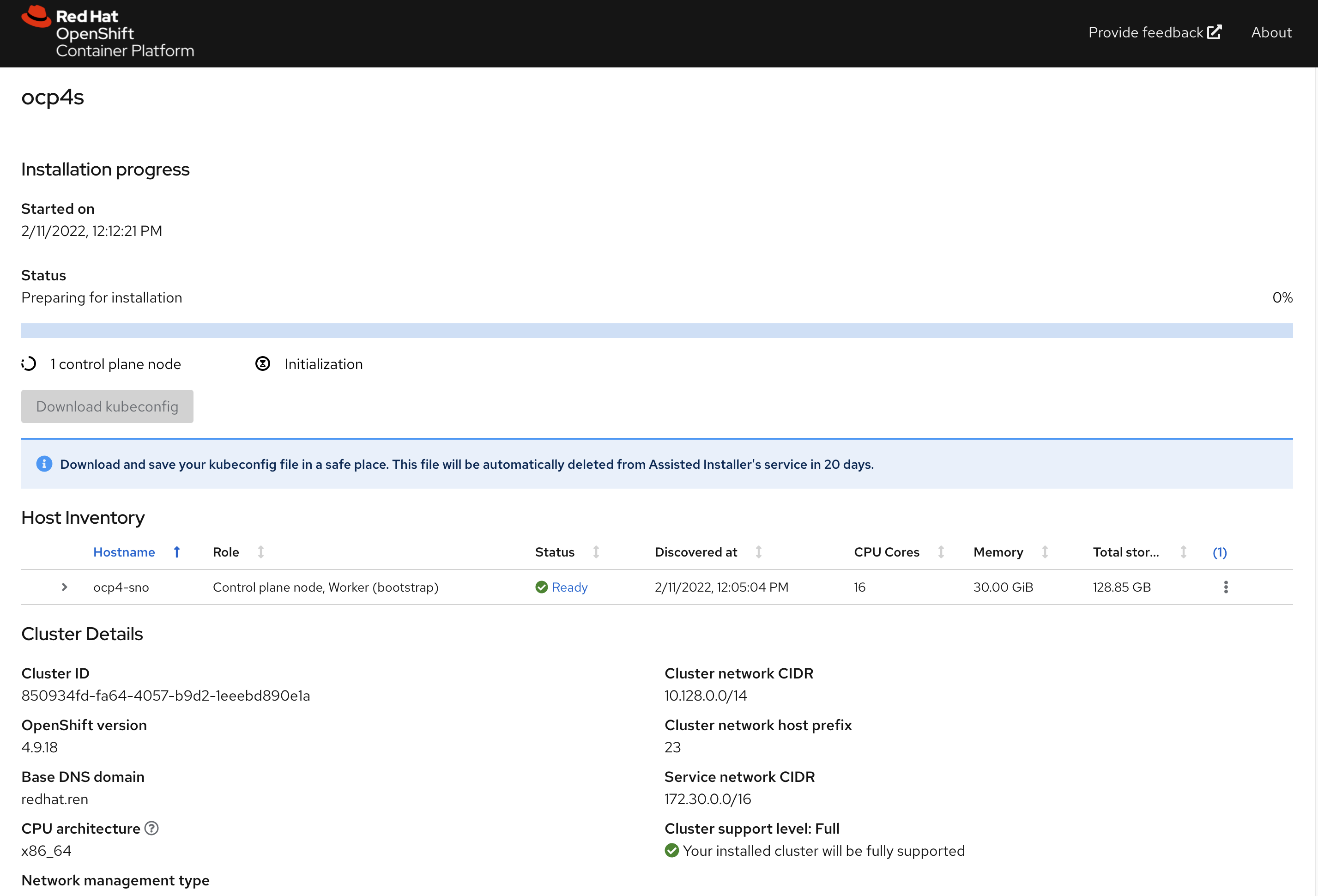

开始安装,到这里,我们等待就可以

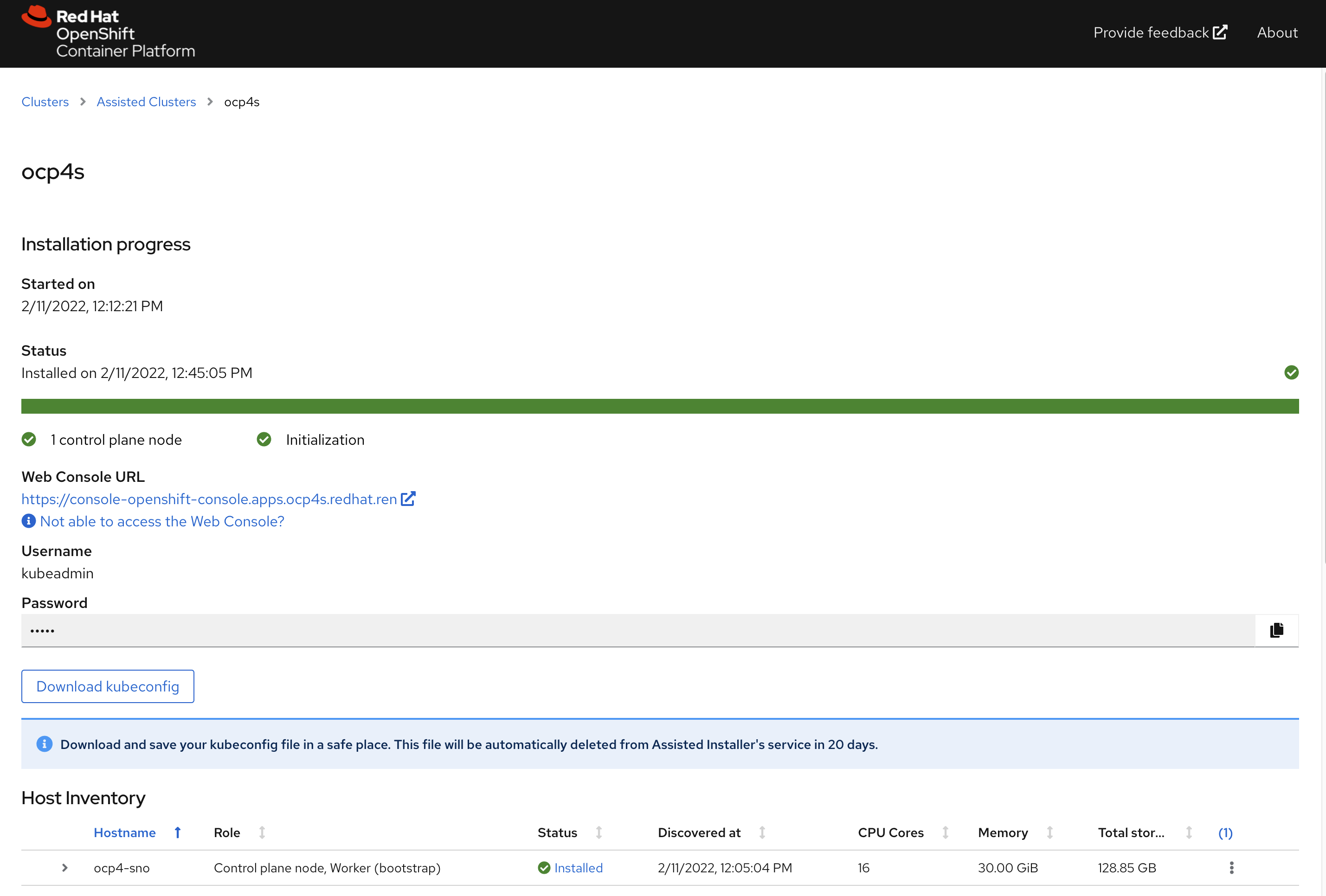

一段时间以后,通常20-30分钟,就安装完成了,当然这要网络情况比较好的条件下。

⚠️不要忘记下载集群证书,还有webUI的用户名,密码。

访问sno集群

# back to helper

# copy kubeconfig from web browser to /data/sno

export KUBECONFIG=/data/sno/auth/kubeconfig

oc get node

# NAME STATUS ROLES AGE VERSION

# ocp4-sno Ready master,worker 71m v1.22.3+e790d7f

oc get co

# NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE MESSAGE

# authentication 4.9.18 True False False 54m

# baremetal 4.9.18 True False False 58m

# cloud-controller-manager 4.9.18 True False False 63m

# cloud-credential 4.9.18 True False False 68m

# cluster-autoscaler 4.9.18 True False False 59m

# config-operator 4.9.18 True False False 69m

# console 4.9.18 True False False 54m

# csi-snapshot-controller 4.9.18 True False False 68m

# dns 4.9.18 True False False 58m

# etcd 4.9.18 True False False 62m

# image-registry 4.9.18 True False False 55m

# ingress 4.9.18 True False False 57m

# insights 4.9.18 True False False 63m

# kube-apiserver 4.9.18 True False False 58m

# kube-controller-manager 4.9.18 True False False 61m

# kube-scheduler 4.9.18 True False False 60m

# kube-storage-version-migrator 4.9.18 True False False 68m

# machine-api 4.9.18 True False False 59m

# machine-approver 4.9.18 True False False 60m

# machine-config 4.9.18 True False False 63m

# marketplace 4.9.18 True False False 68m

# monitoring 4.9.18 True False False 54m

# network 4.9.18 True False False 68m

# node-tuning 4.9.18 True False False 64m

# openshift-apiserver 4.9.18 True False False 55m

# openshift-controller-manager 4.9.18 True False False 60m

# openshift-samples 4.9.18 True False False 57m

# operator-lifecycle-manager 4.9.18 True False False 60m

# operator-lifecycle-manager-catalog 4.9.18 True False False 60m

# operator-lifecycle-manager-packageserver 4.9.18 True False False 58m

# service-ca 4.9.18 True False False 68m

# storage 4.9.18 True False False 63m

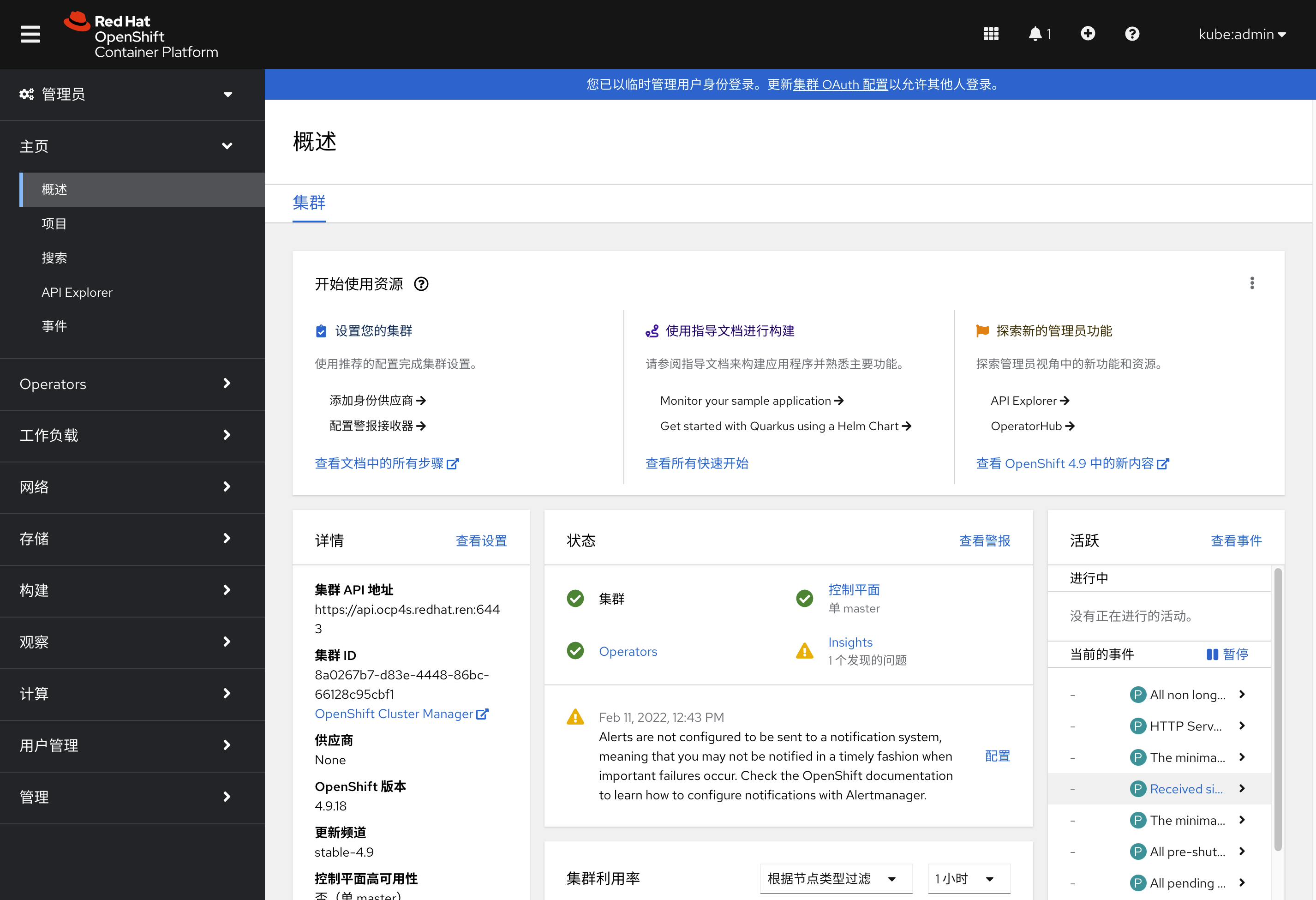

访问集群的webUI

https://console-openshift-console.apps.ocp4s.redhat.ren/

用户名密码是: kubeadmin / 3QS3M-HA3Px-376HD-bvfif

reference

https://github.com/openshift/assisted-service/tree/master/docs/user-guide

- https://access.redhat.com/solutions/6135171

- https://github.com/openshift/assisted-service/blob/master/docs/user-guide/assisted-service-on-local.md

- https://github.com/openshift/assisted-service/blob/master/docs/user-guide/restful-api-guide.md

search

- pre-network-manager-config.sh

- /Users/wzh/Desktop/dev/assisted-service/internal/constants/scripts.go

- NetworkManager

https://superuser.com/questions/218340/how-to-generate-a-valid-random-mac-address-with-bash-shell

end

cat << EOF > test

02:00:00:2c:23:a5=enp1s0

EOF

cat test | cut -d= -f1 | tr '[:lower:]' '[:upper:]'

printf '00-60-2F-%02X-%02X-%02X\n' $[RANDOM%256] $[RANDOM%256] $[RANDOM%256]

virsh domifaddr freebsd11.1

openshift 4.9 single node, assisted install mode, without dhcp, disconnected

本文描述,如何使用assisted service(辅助安装服务),来安装一个单节点openshift4集群,特别的地方是,默认情况,openshift4要求网络上提供dhcp服务,让节点启动的时候,能拿到IP地址,从而进一步下载容器镜像,并且和assisted service交互,拿到配置。可是大部分客户的网络,是不允许开启dhcp服务的,那么我们在这里就使用assisted service暂时隐藏的功能,进行static ip模式的部署。

本实验设想的客户环境/需求是这样的:

- 实验网络没有dhcp

- 实验网络不能访问外网

- 实验环境中有2台主机

- 将在实验环境中的1台主机上,安装单节点openshift4(baremetal模式)

由于作者实验环境所限,我们就用kvm来代替baremetal进行实验。

安装过程大概是这样的:

- 启动helper vm,并在helper节点上配置dns服务

- 启动本地assisted service服务

- 在assisted service上进行配置

- 从assisted service上下载iso

- 通过iso启动kvm/baremetal

- 在assisted service上进行配置,开始安装

- 观察和等待安装结束

- 获得openshift4的用户名密码等信息,登录集群。

本次实验的架构图:

安装介质

本文的安装,使用openshift 4.9.12,未来方便,作者打包了安装介质,里面除了openshift镜像,还有一些辅助软件和工具。

打包好的安装包,在这里下载,百度盘下载链接,版本是 4.9.12 :

- 4.9.12

- 链接: https://pan.baidu.com/s/1Wj5MUBLMFli1kOit1eafug 提取码: ur8r

部署 dns

assisted install 模式下,如果想静态ip安装,需要在实验网络上部署一个dns服务。因为我们部署的是single node openshift,只需要把如下4个域名,指向同一个ip地址就可以。当然,你需要提前想好域名。

- api.ocp4s.redhat.ren

- api-int.ocp4s.redhat.ren

- *.apps.ocp4.redhat.ren

- ocp4-sno.ocp4.redhat.ren

cd /data/ocp4/ocp4-upi-helpernode-master/

cat << 'EOF' > /data/ocp4/ocp4-upi-helpernode-master/vars.yaml

---

ocp_version: 4.9.12

ssh_gen_key: false

staticips: true

firewalld: false

dns_forward: yes

iso:

iso_dl_url: "file:///data/ocp4/rhcos-live.x86_64.iso"

my_iso: "rhcos-live.iso" # this is internal file, just leave as it.

helper:

name: "helper"

ipaddr: "192.168.7.11"

networkifacename: "enp1s0"

gateway: "192.168.7.1"

netmask: "255.255.255.0"

dns:

domain: "redhat.ren"

clusterid: "sno"

forwarder1: "172.21.1.1"

forwarder2: "172.21.1.1"

bootstrap:

name: "bootstrap"

ipaddr: "192.168.7.112"

interface: "enp1s0"

install_drive: "vda"

masters:

- name: "master-0"

ipaddr: "192.168.7.113"

interface: "enp1s0"

install_drive: "vda"

# - name: "master-1"

# ipaddr: "192.168.7.14"

# interface: "enp1s0"

# install_drive: "vda"

# - name: "master-2"

# ipaddr: "192.168.7.15"

# interface: "enp1s0"

# install_drive: "vda"

workers:

- name: "worker-0"

ipaddr: "192.168.7.116"

interface: "eno1"

install_drive: "sda"

- name: "worker-1"

ipaddr: "192.168.7.117"

interface: "enp1s0"

install_drive: "sda"

# - name: "worker-2"

# ipaddr: "192.168.7.18"

# interface: "enp1s0"

# install_drive: "vda"

# - name: "infra-0"

# ipaddr: "192.168.7.19"

# interface: "enp1s0"

# install_drive: "vda"

# - name: "infra-1"

# ipaddr: "192.168.7.20"

# interface: "enp1s0"

# install_drive: "vda"

# - name: "worker-3"

# ipaddr: "192.168.7.21"

# interface: "enp1s0"

# install_drive: "vda"

# - name: "worker-4"

# ipaddr: "192.168.7.22"

# interface: "enp1s0"

# install_drive: "vda"

others:

- name: "registry"

ipaddr: "192.168.7.1"

- name: "yum"

ipaddr: "192.168.7.1"

- name: "quay"

ipaddr: "192.168.7.1"

- name: "nexus"

ipaddr: "192.168.7.1"

- name: "git"

ipaddr: "192.168.7.1"

otherdomains:

- domain: "infra.redhat.ren"

hosts:

- name: "registry"

ipaddr: "192.168.7.1"

- name: "yum"

ipaddr: "192.168.7.1"

- name: "quay"

ipaddr: "192.168.7.1"

- name: "quaylab"

ipaddr: "192.168.7.1"

- name: "nexus"

ipaddr: "192.168.7.1"

- name: "git"

ipaddr: "192.168.7.1"

- domain: "ocp4s-ais.redhat.ren"

hosts:

- name: "api"

ipaddr: "192.168.7.13"

- name: "api-int"

ipaddr: "192.168.7.13"

- name: "ocp4-sno"

ipaddr: "192.168.7.13"

- name: "*.apps"

ipaddr: "192.168.7.13"

force_ocp_download: false

remove_old_config_files: false

ocp_client: "file:///data/ocp4/{{ ocp_version }}/openshift-client-linux-{{ ocp_version }}.tar.gz"

ocp_installer: "file:///data/ocp4/{{ ocp_version }}/openshift-install-linux-{{ ocp_version }}.tar.gz"

ppc64le: false

arch: 'x86_64'

chronyconfig:

enabled: true

content:

- server: "192.168.7.11"

options: iburst

setup_registry: # don't worry about this, just leave it here

deploy: false

registry_image: docker.io/library/registry:2

local_repo: "ocp4/openshift4"

product_repo: "openshift-release-dev"

release_name: "ocp-release"

release_tag: "4.6.1-x86_64"

ocp_filetranspiler: "file:///data/ocp4/filetranspiler.tgz"

registry_server: "registry.ocp4.redhat.ren:5443"

EOF

ansible-playbook -e @vars.yaml tasks/main.yml

/bin/cp -f /data/ocp4/rhcos-live.x86_64.iso /var/www/html/install/live.iso

部署 assisted install service

assisted install service有2个版本,一个是cloud.redhat.com上面那个,同时还有一个本地版本,两个版本功能一样,因为我们需要有定制需求,所以我们选择本地版本。

# https://github.com/openshift/assisted-service/blob/master/docs/user-guide/assisted-service-on-local.md

# https://github.com/openshift/assisted-service/tree/master/deploy/podman

podman version

# Version: 3.4.2

# API Version: 3.4.2

# Go Version: go1.16.12

# Built: Wed Feb 2 07:59:28 2022

# OS/Arch: linux/amd64

mkdir -p /data/assisted-service/

cd /data/assisted-service/

export http_proxy="http://192.168.195.54:5085"

export https_proxy=${http_proxy}

wget https://raw.githubusercontent.com/openshift/assisted-service/master/deploy/podman/configmap.yml

wget https://raw.githubusercontent.com/openshift/assisted-service/master/deploy/podman/pod.yml

/bin/cp -f configmap.yml configmap.yml.bak

unset http_proxy

unset https_proxy

sed -i 's/ SERVICE_BASE_URL:.*/ SERVICE_BASE_URL: "http:\/\/172.21.6.103:8090"/' configmap.yml

cat << EOF > /data/assisted-service/os_image.json

[{

"openshift_version": "4.9",

"cpu_architecture": "x86_64",

"url": "http://192.168.7.11:8080/install/live.iso",

"rootfs_url": "http://192.168.7.11:8080/install/rootfs.img",

"version": "49.84.202110081407-0"

}]

EOF

cat << EOF > /data/assisted-service/release.json

[{

"openshift_version": "4.9",

"cpu_architecture": "x86_64",

"url": "quaylab.infra.redhat.ren/ocp4/openshift4:4.9.12-x86_64",

"version": "4.9.12",

"default": true

}]

EOF

cat configmap.yml.bak \

| python3 -c 'import json, yaml, sys; print(json.dumps(yaml.load(sys.stdin)))' \

| jq --arg OSIMAGE "$(jq -c . /data/assisted-service/os_image.json)" '. | .data.OS_IMAGES = $OSIMAGE ' \

| jq --arg RELEASE_IMAGES "$(jq -c . /data/assisted-service/release.json)" '. | .data.RELEASE_IMAGES = $RELEASE_IMAGES ' \

| python3 -c 'import yaml, sys; print(yaml.dump(yaml.load(sys.stdin), default_flow_style=False))' \

> configmap.yml

# 启动本地assisted service

cd /data/assisted-service/

podman play kube --configmap configmap.yml pod.yml

# 注入离线镜像仓库的证书

podman cp /etc/crts/redhat.ren.ca.crt assisted-installer-service:/etc/pki/ca-trust/source/anchors/quaylab.crt

podman exec assisted-installer-service update-ca-trust

# 用以下命令,停止/删除本地assisted service

cd /data/assisted-service/

podman play kube --down pod.yml

podman exec assisted-installer-image-service du -h /data

# 1.1G /data

运行成功以后,访问以下url

http://172.21.6.103:8080

创建cluster

访问本地的assist install service, 创建一个cluster, ocp4s-ais.redhat.ren

配置集群的基本信息

填写自己的pull-secret信息,并点击下一步

进入下一个页面后,点击add host

直接点击generate discovery iso,我们会在后面定制ssh key,现在不需要配置。

记录下来download command,因为我们需要里面的env infra id

我们这里的command是

wget -O discovery_image_ocp4s-ais.iso 'http://127.0.0.1:8888/images/b6b173ab-f080-4378-a9e0-bb6ff02f78bb?arch=x86_64&type=full-iso&version=4.9'

定制 assisted install service的配置

assisted install service创建的iso,要去实验网络必须有dhcp服务,我们要做的是static ip,那么我们就要定制一下 assisted install service, 激活他现在还是隐藏的功能(暂时没有官方支持)。

# on helper

cd /data/sno

ASSISTED_SERVICE_URL=http://172.21.6.103:8080

# infra id is part of download url on UI

INFRA_ENV_ID=b6b173ab-f080-4378-a9e0-bb6ff02f78bb

NODE_SSH_KEY="$(cat ~/.ssh/id_rsa.pub)"

SNO_IP=192.168.7.13

SNO_GW=192.168.7.1

SNO_NETMAST=255.255.255.0

SNO_NETMAST_S=24

SNO_HOSTNAME=ocp4-sno

SNO_IF=enp1s0

SNO_IF_MAC=`printf '00:60:2F:%02X:%02X:%02X' $[RANDOM%256] $[RANDOM%256] $[RANDOM%256]`

SNO_DNS=192.168.7.11

SNO_DISK=/dev/vda

SNO_CORE_PWD=redhat

echo ${SNO_IF_MAC} > /data/sno/sno.mac

cat << EOF > /data/sno/server-a.yaml

dns-resolver:

config:

server:

- ${SNO_DNS}

interfaces:

- ipv4:

address:

- ip: ${SNO_IP}

prefix-length: ${SNO_NETMAST_S}

dhcp: false

enabled: true

name: ${SNO_IF}

state: up

type: ethernet

routes:

config:

- destination: 0.0.0.0/0

next-hop-address: ${SNO_GW}

next-hop-interface: ${SNO_IF}

table-id: 254

EOF

cat << EOF > /data/sno/static.ip.bu

variant: openshift

version: 4.9.0

metadata:

labels:

machineconfiguration.openshift.io/role: master

name: 99-zzz-master-static-ip

EOF

VAR_INSTALL_IMAGE_REGISTRY=quaylab.infra.redhat.ren

cat << EOF > /data/sno/install.images.bu

variant: openshift

version: 4.9.0

metadata:

labels:

machineconfiguration.openshift.io/role: master

name: 99-zzz-master-install-images

storage:

files:

- path: /etc/containers/registries.conf.d/base.registries.conf

overwrite: true

contents:

inline: |

unqualified-search-registries = ["registry.access.redhat.com", "docker.io"]

short-name-mode = ""

[[registry]]

prefix = ""

location = "quay.io/openshift-release-dev/ocp-release"

mirror-by-digest-only = true

[[registry.mirror]]

location = "${VAR_INSTALL_IMAGE_REGISTRY}/ocp4/openshift4"

[[registry.mirror]]

location = "${VAR_INSTALL_IMAGE_REGISTRY}/ocp4/release"

[[registry]]

prefix = ""

location = "quay.io/openshift-release-dev/ocp-v4.0-art-dev"

mirror-by-digest-only = true

[[registry.mirror]]

location = "${VAR_INSTALL_IMAGE_REGISTRY}/ocp4/openshift4"

[[registry.mirror]]

location = "${VAR_INSTALL_IMAGE_REGISTRY}/ocp4/release"

EOF

cat << EOF > /data/sno/install.crts.bu

variant: openshift

version: 4.9.0

metadata:

labels:

machineconfiguration.openshift.io/role: master

name: 99-zzz-master-install-crts

storage:

files:

- path: /etc/pki/ca-trust/source/anchors/quaylab.crt

overwrite: true

contents:

inline: |

$( cat /etc/crts/redhat.ren.ca.crt | sed 's/^/ /g' )

EOF

mkdir -p /data/sno/disconnected/

# copy ntp related config

/bin/cp -f /data/ocp4/ocp4-upi-helpernode-master/machineconfig/* /data/sno/disconnected/

# copy image registry proxy related config

cd /data/ocp4

bash image.registries.conf.sh nexus.infra.redhat.ren:8083

/bin/cp -f /data/ocp4/99-worker-container-registries.yaml /data/sno/disconnected/

/bin/cp -f /data/ocp4/99-master-container-registries.yaml /data/sno/disconnected/

cd /data/sno/

# scripts to get ignition from yaml file

# run under bash

# 1st paramter: is the filename which will write to coreos for first boot

# 2nd parameter: is the file content to read from

get_file_content_for_ignition() {

VAR_FILE_NAME=$1

VAR_FILE_CONTENT_IN_FILE=$2

tmppath=$(mktemp)

cat << EOF > $tmppath

{

"overwrite": true,

"path": "$VAR_FILE_NAME",

"user": {

"name": "root"

},

"contents": {

"source": "data:text/plain,$(cat $VAR_FILE_CONTENT_IN_FILE | python3 -c "import sys, urllib.parse; print(urllib.parse.quote(''.join(sys.stdin.readlines())))" )"

}

}

EOF

RET_VAL=$(cat $tmppath | jq -c .)

FILE_JSON=$(cat $VAR_FILE_CONTENT_IN_FILE | python3 -c 'import json, yaml, sys; print(json.dumps(yaml.load(sys.stdin)))')

cat << EOF > $tmppath

{

"overwrite": true,

"path": "$(echo $FILE_JSON | jq -r .spec.config.storage.files[0].path )",

"user": {

"name": "root"

},

"contents": {

"source": "$( echo $FILE_JSON | jq -r .spec.config.storage.files[0].contents.source )"

}

}

EOF

# cat $tmppath

RET_VAL_2=$(cat $tmppath | jq -c .)

/bin/rm -f $tmppath

}

get_file_content_for_ignition "/opt/openshift/openshift/99-master-chrony-configuration.yaml" "/data/sno/disconnected/99-master-chrony-configuration.yaml"

VAR_99_master_chrony=$RET_VAL

VAR_99_master_chrony_2=$RET_VAL_2

get_file_content_for_ignition "/opt/openshift/openshift/99-worker-chrony-configuration.yaml" "/data/sno/disconnected/99-worker-chrony-configuration.yaml"

VAR_99_worker_chrony=$RET_VAL

VAR_99_worker_chrony_2=$RET_VAL_2

get_file_content_for_ignition "/opt/openshift/openshift/99-master-container-registries.yaml" "/data/sno/disconnected/99-master-container-registries.yaml"

VAR_99_master_container_registries=$RET_VAL

VAR_99_master_container_registries_2=$RET_VAL_2

get_file_content_for_ignition "/opt/openshift/openshift/99-worker-container-registries.yaml" "/data/sno/disconnected/99-worker-container-registries.yaml"

VAR_99_worker_container_registries=$RET_VAL

VAR_99_worker_container_registries_2=$RET_VAL_2

butane /data/sno/install.images.bu > /data/sno/disconnected/99-zzz-master-install-images.yaml

get_file_content_for_ignition "/opt/openshift/openshift/99-zzz-master-install-images.yaml" "/data/sno/disconnected/99-zzz-master-install-images.yaml"

VAR_99_master_install_images=$RET_VAL

VAR_99_master_install_images_2=$RET_VAL_2

butane /data/sno/install.crts.bu > /data/sno/disconnected/99-zzz-master-install-crts.yaml

get_file_content_for_ignition "/opt/openshift/openshift/99-zzz-master-install-crts.yaml" "/data/sno/disconnected/99-zzz-master-install-crts.yaml"

VAR_99_master_install_crts=$RET_VAL

VAR_99_master_install_crts_2=$RET_VAL_2

# https://access.redhat.com/solutions/6194821

# butane /data/sno/static.ip.bu | python3 -c 'import json, yaml, sys; print(json.dumps(yaml.load(sys.stdin)))'

# https://stackoverflow.com/questions/2854655/command-to-escape-a-string-in-bash

# VAR_PULL_SEC=`printf "%q" $(cat /data/pull-secret.json)`

# https://access.redhat.com/solutions/221403

# VAR_PWD_HASH="$(openssl passwd -1 -salt 'openshift' 'redhat')"

VAR_PWD_HASH="$(python3 -c 'import crypt,getpass; print(crypt.crypt("redhat"))')"

tmppath=$(mktemp)

butane /data/sno/static.ip.bu \

| python3 -c 'import json, yaml, sys; print(json.dumps(yaml.load(sys.stdin)))' \

| jq '.spec.config | .ignition.version = "3.1.0" ' \

| jq --arg VAR "$VAR_PWD_HASH" --arg VAR_SSH "$NODE_SSH_KEY" '.passwd.users += [{ "name": "wzh", "system": true, "passwordHash": $VAR , "sshAuthorizedKeys": [ $VAR_SSH ], "groups": [ "adm", "wheel", "sudo", "systemd-journal" ] }]' \

| jq --argjson VAR "$VAR_99_master_chrony" '.storage.files += [$VAR] ' \

| jq --argjson VAR "$VAR_99_worker_chrony" '.storage.files += [$VAR] ' \

| jq --argjson VAR "$VAR_99_master_container_registries" '.storage.files += [$VAR] ' \

| jq --argjson VAR "$VAR_99_worker_container_registries" '.storage.files += [$VAR] ' \

| jq --argjson VAR "$VAR_99_master_install_images" '.storage.files += [$VAR] ' \

| jq --argjson VAR "$VAR_99_master_install_crts" '.storage.files += [$VAR] ' \

| jq --argjson VAR "$VAR_99_master_chrony_2" '.storage.files += [$VAR] ' \

| jq --argjson VAR "$VAR_99_master_container_registries_2" '.storage.files += [$VAR] ' \

| jq --argjson VAR "$VAR_99_master_install_images_2" '.storage.files += [$VAR] ' \

| jq --argjson VAR "$VAR_99_master_install_crts_2" '.storage.files += [$VAR] ' \

| jq -c . \

> ${tmppath}

VAR_IGNITION=$(cat ${tmppath})

rm -f ${tmppath}

# cat /run/user/0/containers/auth.json

# {

# "auths": {

# "quaylab.infra.redhat.ren": {

# "auth": "cXVheWFkbWluOnBhc3N3b3Jk"

# }

# }

# }

request_body=$(mktemp)

jq -n --arg SSH_KEY "$NODE_SSH_KEY" \

--arg NMSTATE_YAML1 "$(cat server-a.yaml)" \

--arg MAC_ADDR "$(cat /data/sno/sno.mac)" \

--arg IF_NIC "${SNO_IF}" \

--arg PULL_SEC '{"auths":{"registry.ocp4.redhat.ren:5443": {"auth": "ZHVtbXk6ZHVtbXk=","email": "noemail@localhost"},"quaylab.infra.redhat.ren": {"auth": "cXVheWFkbWluOnBhc3N3b3Jk","email": "noemail@localhost"}}}' \

--arg IGNITION "${VAR_IGNITION}" \

'{

"proxy":{"http_proxy":"","https_proxy":"","no_proxy":""},

"ssh_authorized_key":$SSH_KEY,

"pull_secret":$PULL_SEC,

"image_type":"full-iso",

"ignition_config_override":$IGNITION,

"static_network_config": [

{

"network_yaml": $NMSTATE_YAML1,

"mac_interface_map": [{"mac_address": $MAC_ADDR, "logical_nic_name": $IF_NIC}]

}

]

}' > $request_body

# 我们来看看创建的request body

cat $request_body

# 向 assisted install service发送请求,进行定制

curl -H "Content-Type: application/json" -X PATCH -d @$request_body ${ASSISTED_SERVICE_URL}/api/assisted-install/v2/infra-envs/$INFRA_ENV_ID

# {"cluster_id":"850934fd-fa64-4057-b9d2-1eeebd890e1a","cpu_architecture":"x86_64","created_at":"2022-02-11T03:54:46.632598Z","download_url":"http://127.0.0.1:8888/images/89cc84a1-2dfd-4d7e-9ca3-903342c40d60?arch=x86_64&type=full-iso&version=4.9","email_domain":"Unknown","expires_at":"0001-01-01T00:00:00.000Z","href":"/api/assisted-install/v2/infra-envs/89cc84a1-2dfd-4d7e-9ca3-903342c40d60","id":"89cc84a1-2dfd-4d7e-9ca3-903342c40d60","kind":"InfraEnv","name":"ocp4s_infra-env","openshift_version":"4.9","proxy":{"http_proxy":"","https_proxy":"","no_proxy":""},"pull_secret_set":true,"ssh_authorized_key":"ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCrkO4oLIFTwjkGON+aShlQRKwXHOf3XKrGDmpb+tQM3UcbsF2U7klsr9jBcGObQMZO7KBW8mlRu0wC2RxueBgjbqvylKoFacgVZg6PORfkclqE1gZRYFwoxDkLo2c5y5B7OhcAdlHO0eR5hZ3/0+8ZHZle0W+A0AD7qqowO2HlWLkMMt1QXFD7R0r6dzTs9u21jASGk3jjYgCOw5iHvqm2ueVDFAc4yVwNZ4MXKg5MRvqAJDYPqhaRozLE60EGIziy9SRj9HWynyNDncCdL1/IBK2z9T0JwDebD6TDNcPCtL+AeKIpaHed52PkjnFf+Q+8/0Z0iXt6GyFYlx8OkxdsiMgMxiXx43yIRaWZjx54kVtc9pB6CL50UKPQ2LjuFPIZSfaCab5KDgPRtzue82DE6Mxxg4PS+FTW32/bq1WiOxCg9ABrZ0n1CGaZWFepJkSw47wodMnvlBkcKY3Rn/SsLZVOUsJysd+b08LQgl1Fr3hjVrEQMLbyU0UxvoerYfk= root@ocp4-helper","static_network_config":"dns-resolver:\n config:\n server:\n - 172.21.1.1\ninterfaces:\n- ipv4:\n address:\n - ip: 172.21.6.13\n prefix-length: 24\n dhcp: false\n enabled: true\n name: enp1s0\n state: up\n type: ethernet\nroutes:\n config:\n - destination: 0.0.0.0/0\n next-hop-address: 172.21.6.254\n next-hop-interface: enp1s0\n table-id: 254HHHHH00:60:2F:8B:42:88=enp1s0","type":"full-iso","updated_at":"2022-02-11T04:01:14.008388Z","user_name":"admin"}

rm -f ${request_body}

# on helper

cd /data/sno/

wget -O discovery_image_ocp4s.iso "http://172.21.6.103:8888/images/${INFRA_ENV_ID}?arch=x86_64&type=full-iso&version=4.9"

# coreos-installer iso kargs modify -a \

# " ip=${SNO_IP}::${SNO_GW}:${SNO_NETMAST}:${SNO_HOSTNAME}:${SNO_IF}:none nameserver=${SNO_DNS}" \

# /data/sno/discovery_image_ocp4s.iso

/bin/mv -f /data/sno/discovery_image_ocp4s.iso /data/sno/sno.iso

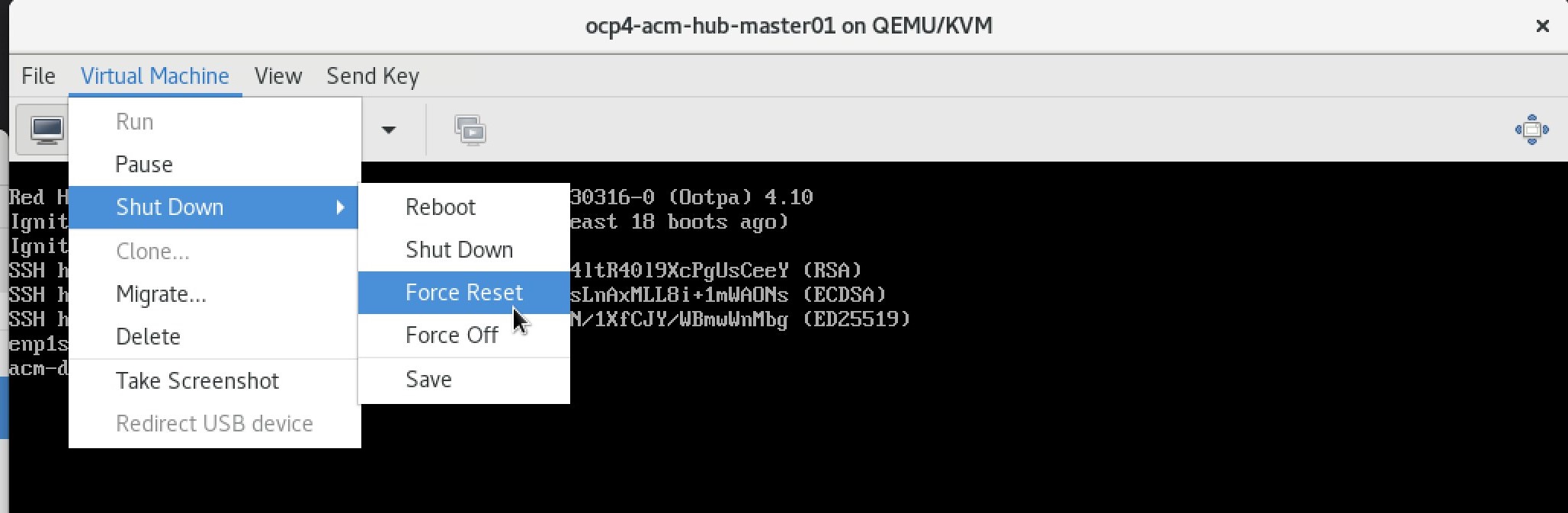

启动kvm

我们回到kvm宿主机,启动kvm,开始安装single node openshift

# back to kvm host

create_lv() {

var_vg=$1

var_lv=$2

var_size=$3

lvremove -f $var_vg/$var_lv

lvcreate -y -L $var_size -n $var_lv $var_vg

wipefs --all --force /dev/$var_vg/$var_lv

}

create_lv vgdata lvsno 120G

export KVM_DIRECTORY=/data/kvm

mkdir -p ${KVM_DIRECTORY}

cd ${KVM_DIRECTORY}

scp root@192.168.7.11:/data/sno/sno.* ${KVM_DIRECTORY}/

# on kvm host

# export KVM_DIRECTORY=/data/kvm

virt-install --name=ocp4-sno --vcpus=16 --ram=65536 \

--cpu=host-model \

--disk path=/dev/vgdata/lvsno,device=disk,bus=virtio,format=raw \

--os-variant rhel8.3 --network bridge=baremetal,model=virtio,mac=$(<sno.mac) \

--graphics vnc,port=59012 \

--boot menu=on --cdrom ${KVM_DIRECTORY}/sno.iso

在 assisted install service里面配置sno参数

回到 assisted install service webUI,能看到node已经被发现

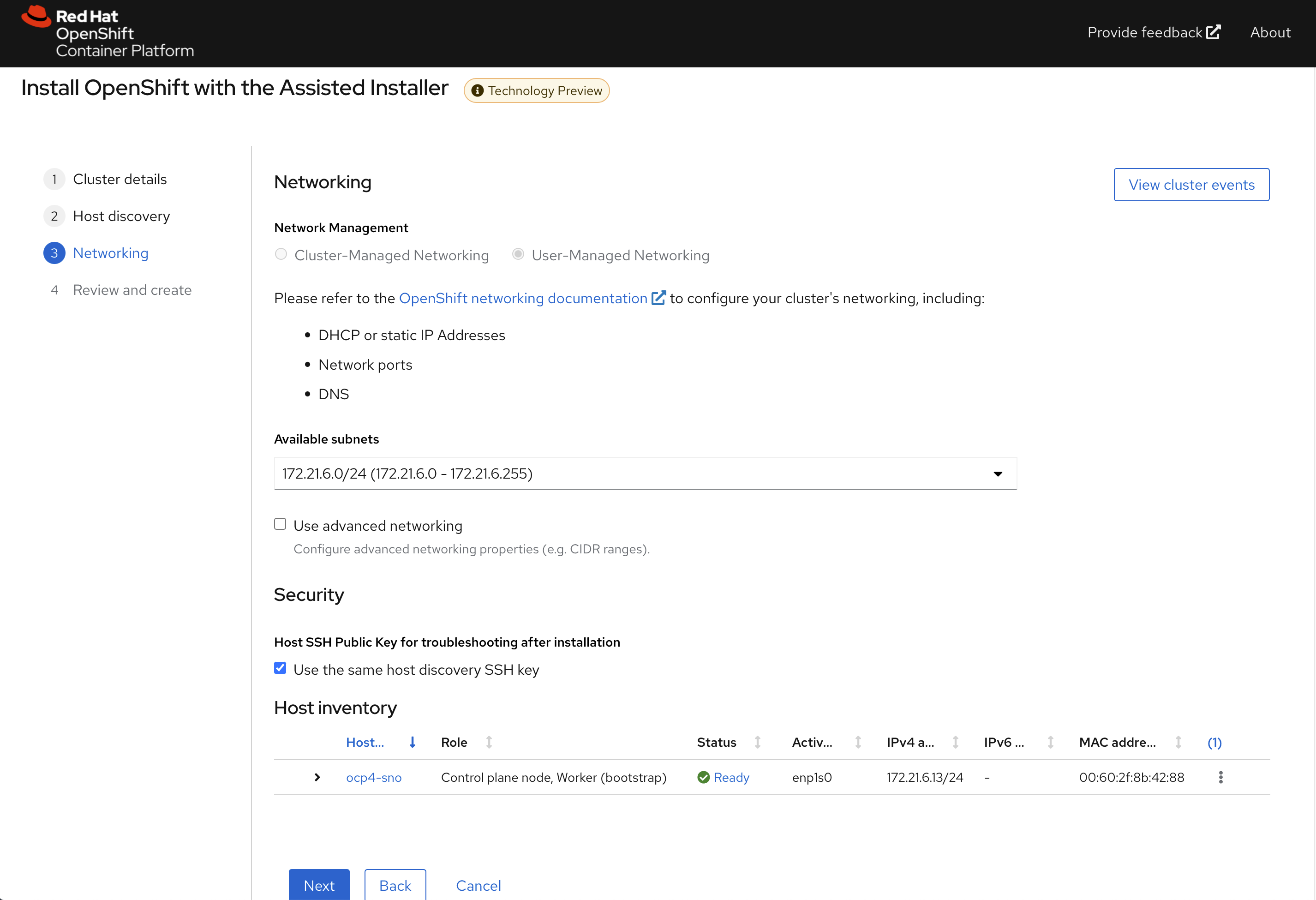

点击下一步,配置物理机的安装子网

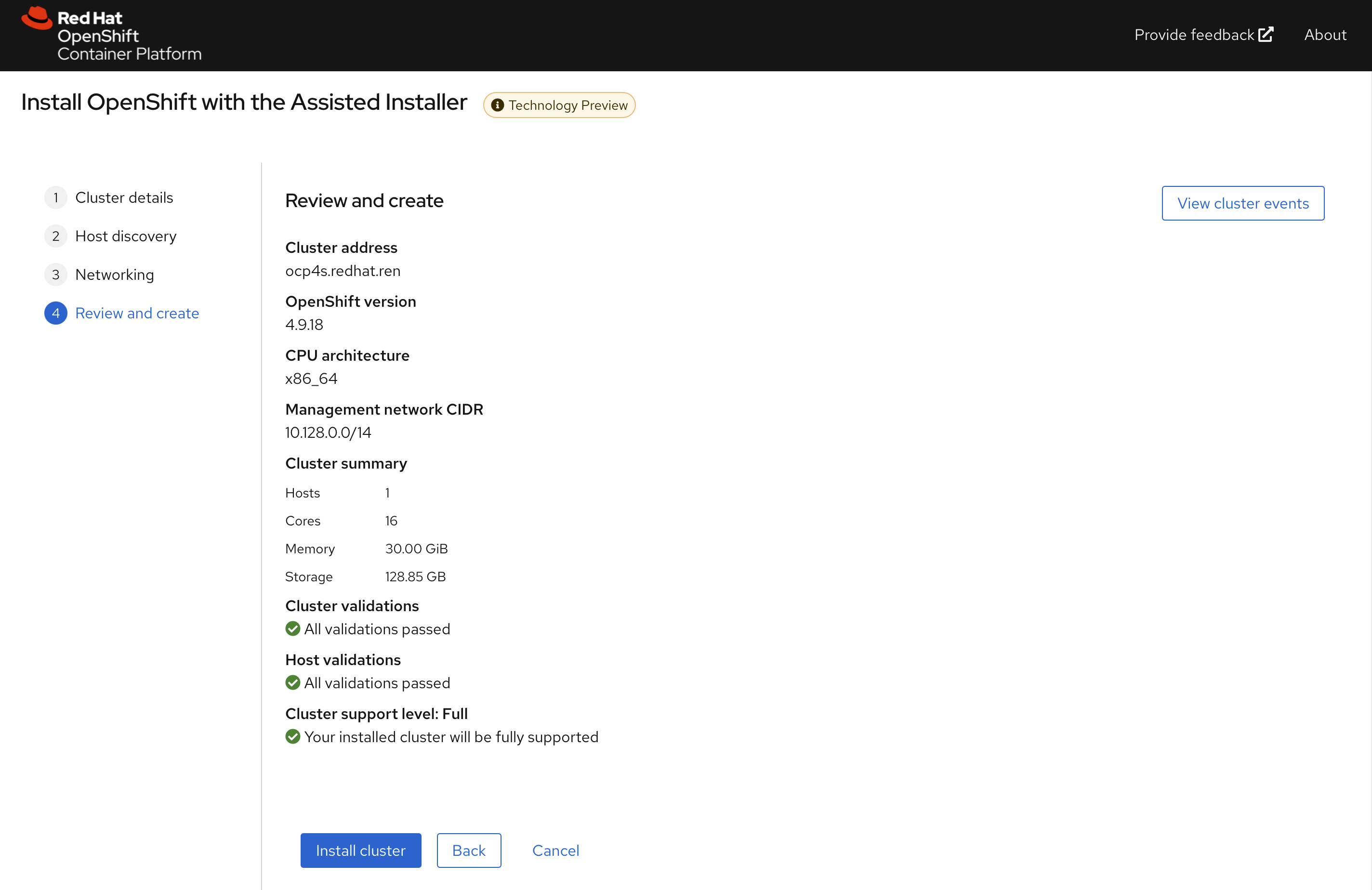

点击下一步,回顾集群配置信息

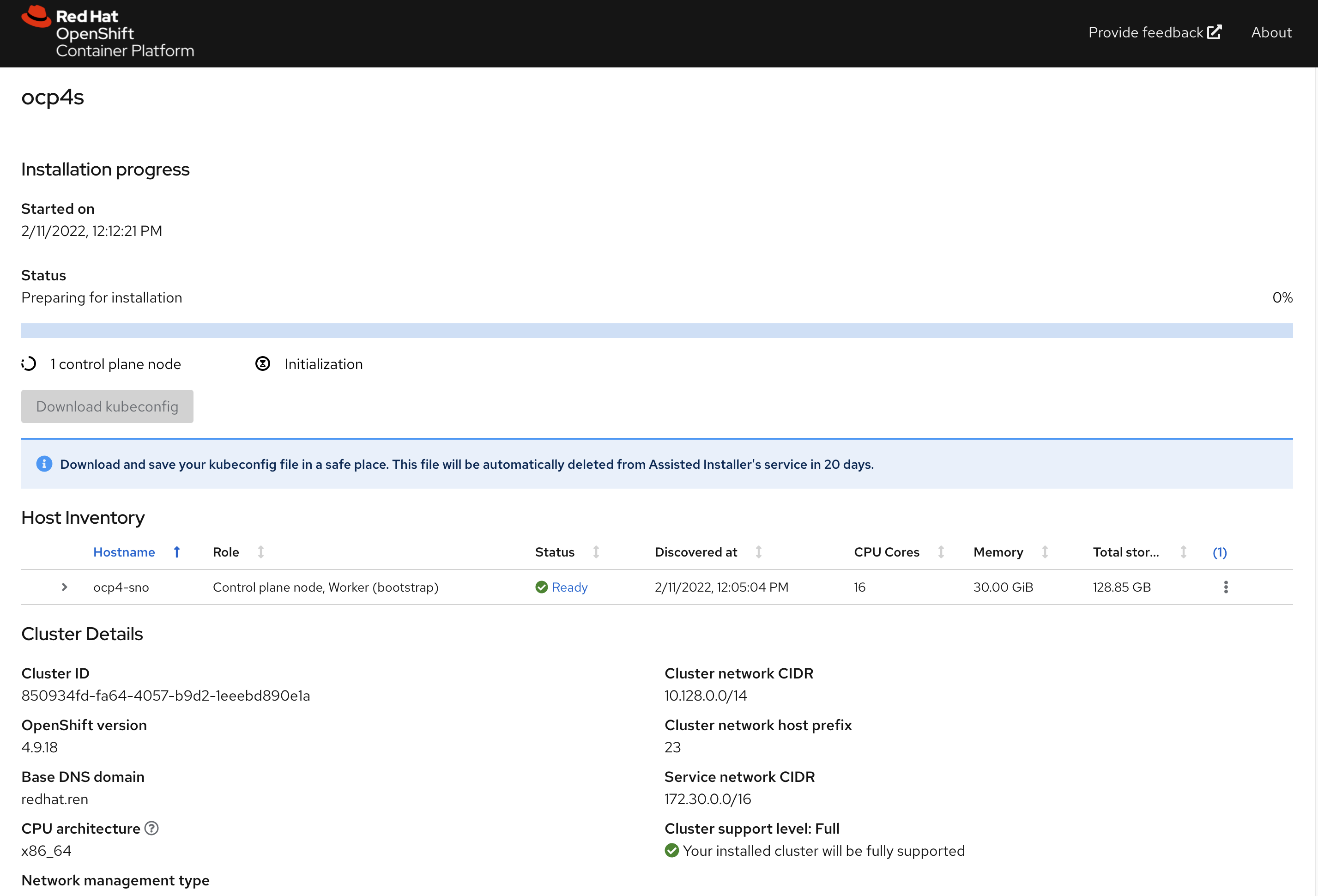

开始安装,到这里,我们等待就可以

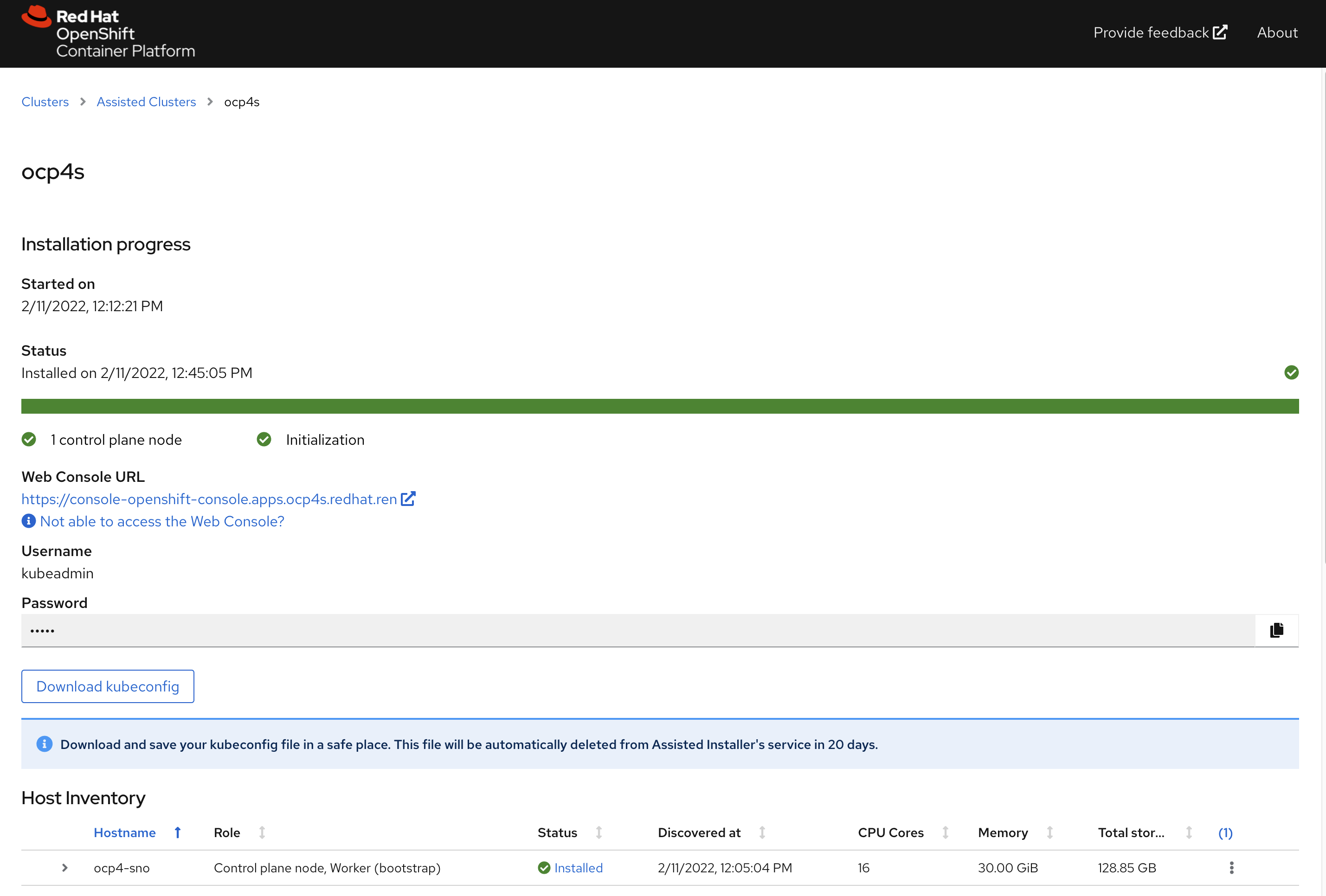

一段时间以后,通常20-30分钟,就安装完成了,当然这要网络情况比较好的条件下。

⚠️不要忘记下载集群证书,还有webUI的用户名,密码。

访问sno集群

# back to helper

# copy kubeconfig from web browser to /data/sno

export KUBECONFIG=/data/sno/auth/kubeconfig

oc get node

# NAME STATUS ROLES AGE VERSION

# ocp4-sno Ready master,worker 9h v1.22.3+e790d7f

oc get co

# NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE MESSAGE

# authentication 4.9.12 True False False 6h6m

# baremetal 4.9.12 True False False 9h

# cloud-controller-manager 4.9.12 True False False 9h

# cloud-credential 4.9.12 True False False 9h

# cluster-autoscaler 4.9.12 True False False 9h

# config-operator 4.9.12 True False False 9h

# console 4.9.12 True False False 9h

# csi-snapshot-controller 4.9.12 True False False 9h

# dns 4.9.12 True False False 6h6m

# etcd 4.9.12 True False False 9h

# image-registry 4.9.12 True False False 9h

# ingress 4.9.12 True False False 9h

# insights 4.9.12 True False False 9h

# kube-apiserver 4.9.12 True False False 9h

# kube-controller-manager 4.9.12 True False False 9h

# kube-scheduler 4.9.12 True False False 9h

# kube-storage-version-migrator 4.9.12 True False False 9h

# machine-api 4.9.12 True False False 9h

# machine-approver 4.9.12 True False False 9h

# machine-config 4.9.12 True False False 9h

# marketplace 4.9.12 True False False 9h

# monitoring 4.9.12 True False False 9h

# network 4.9.12 True False False 9h

# node-tuning 4.9.12 True False False 9h

# openshift-apiserver 4.9.12 True False False 6h4m

# openshift-controller-manager 4.9.12 True False False 9h

# openshift-samples 4.9.12 True False False 6h4m

# operator-lifecycle-manager 4.9.12 True False False 9h

# operator-lifecycle-manager-catalog 4.9.12 True False False 9h

# operator-lifecycle-manager-packageserver 4.9.12 True False False 9h

# service-ca 4.9.12 True False False 9h

# storage 4.9.12 True False False 9h

访问集群的webUI

https://console-openshift-console.apps.ocp4s-ais.redhat.ren/

用户名密码是: kubeadmin / Sb7Fp-U466I-SkPB4-6bpEn

reference

https://github.com/openshift/assisted-service/tree/master/docs/user-guide

- https://access.redhat.com/solutions/6135171

- https://github.com/openshift/assisted-service/blob/master/docs/user-guide/assisted-service-on-local.md

- https://github.com/openshift/assisted-service/blob/master/docs/user-guide/restful-api-guide.md

search

- pre-network-manager-config.sh

- /Users/wzh/Desktop/dev/assisted-service/internal/constants/scripts.go

- NetworkManager

https://superuser.com/questions/218340/how-to-generate-a-valid-random-mac-address-with-bash-shell

end

cat << EOF > test

02:00:00:2c:23:a5=enp1s0

EOF

cat test | cut -d= -f1 | tr '[:lower:]' '[:upper:]'

printf '00-60-2F-%02X-%02X-%02X\n' $[RANDOM%256] $[RANDOM%256] $[RANDOM%256]

virsh domifaddr freebsd11.1

cat configmap.yml | python3 -c 'import json, yaml, sys; print(json.dumps(yaml.load(sys.stdin)))' | jq -r .data.OS_IMAGES | jq '.[] | select( .openshift_version == "4.9" and .cpu_architecture == "x86_64" ) ' | jq .

# {

# "openshift_version": "4.9",

# "cpu_architecture": "x86_64",

# "url": "https://mirror.openshift.com/pub/openshift-v4/dependencies/rhcos/4.9/4.9.0/rhcos-4.9.0-x86_64-live.x86_64.iso",

# "rootfs_url": "https://mirror.openshift.com/pub/openshift-v4/dependencies/rhcos/4.9/4.9.0/rhcos-live-rootfs.x86_64.img",

# "version": "49.84.202110081407-0"

# }

cat configmap.yml | python3 -c 'import json, yaml, sys; print(json.dumps(yaml.load(sys.stdin)))' | jq -r .data.RELEASE_IMAGES | jq -r .

# [

# {

# "openshift_version": "4.6",

# "cpu_architecture": "x86_64",

# "url": "quay.io/openshift-release-dev/ocp-release:4.6.16-x86_64",

# "version": "4.6.16"

# },

# {

# "openshift_version": "4.7",

# "cpu_architecture": "x86_64",

# "url": "quay.io/openshift-release-dev/ocp-release:4.7.42-x86_64",

# "version": "4.7.42"

# },

# {

# "openshift_version": "4.8",

# "cpu_architecture": "x86_64",

# "url": "quay.io/openshift-release-dev/ocp-release:4.8.29-x86_64",

# "version": "4.8.29"

# },

# {

# "openshift_version": "4.9",

# "cpu_architecture": "x86_64",

# "url": "quay.io/openshift-release-dev/ocp-release:4.9.18-x86_64",

# "version": "4.9.18",

# "default": true

# },

# {

# "openshift_version": "4.9",

# "cpu_architecture": "arm64",

# "url": "quay.io/openshift-release-dev/ocp-release:4.9.18-aarch64",

# "version": "4.9.18"

# },

# {

# "openshift_version": "4.10",

# "cpu_architecture": "x86_64",

# "url": "quay.io/openshift-release-dev/ocp-release:4.10.0-rc.0-x86_64",

# "version": "4.10.0-rc.0"

# }

# ]

cat << EOF > /data/sno/static.ip.bu

variant: openshift

version: 4.9.0

metadata:

labels:

machineconfiguration.openshift.io/role: master

name: 99-zzz-master-static-ip

# passwd:

# users:

# name: wzh

# password_hash: "$(openssl passwd -1 wzh)"

# storage:

# files:

# - path: /etc/NetworkManager/system-connections/${SNO_IF}.nmconnection

# overwrite: true

# contents:

# inline: |

# [connection]

# id=${SNO_IF}

# type=ethernet

# autoconnect-retries=1

# interface-name=${SNO_IF}

# multi-connect=1

# permissions=

# wait-device-timeout=60000

# [ethernet]

# mac-address-blacklist=

# [ipv4]

# address1=${SNO_IP}/${SNO_NETMAST_S=24},${SNO_GW}

# dhcp-hostname=${SNO_HOSTNAME}

# dhcp-timeout=90

# dns=${SNO_DNS};

# dns-search=

# may-fail=false

# method=manual

# [ipv6]

# addr-gen-mode=eui64

# dhcp-hostname=${SNO_HOSTNAME}

# dhcp-timeout=90

# dns-search=

# method=disabled

# [proxy]

EOF

# https://access.redhat.com/solutions/221403

# VAR_PWD_HASH="$(openssl passwd -1 -salt 'openshift' 'redhat')"

VAR_PWD_HASH="$(python3 -c 'import crypt,getpass; print(crypt.crypt("redhat"))')"

tmppath=$(mktemp)

butane /data/sno/static.ip.bu \

| python3 -c 'import json, yaml, sys; print(json.dumps(yaml.load(sys.stdin)))' \

| jq '.spec.config | .ignition.version = "3.1.0" ' \

| jq --arg VAR "$VAR_PWD_HASH" --arg VAR_SSH "$NODE_SSH_KEY" '.passwd.users += [{ "name": "wzh", "system": true, "passwordHash": $VAR , "sshAuthorizedKeys": [ $VAR_SSH ], "groups": [ "adm", "wheel", "sudo", "systemd-journal" ] }]' \

| jq --argjson VAR "$VAR_99_master_chrony" '.storage.files += [$VAR] ' \

| jq --argjson VAR "$VAR_99_worker_chrony" '.storage.files += [$VAR] ' \

| jq --argjson VAR "$VAR_99_master_container_registries" '.storage.files += [$VAR] ' \

| jq --argjson VAR "$VAR_99_worker_container_registries" '.storage.files += [$VAR] ' \

| jq --argjson VAR "$VAR_99_master_install_images" '.storage.files += [$VAR] ' \

| jq --argjson VAR "$VAR_99_master_install_crts" '.storage.files += [$VAR] ' \

| jq --argjson VAR "$VAR_99_master_chrony_2" '.storage.files += [$VAR] ' \

| jq --argjson VAR "$VAR_99_master_container_registries_2" '.storage.files += [$VAR] ' \

| jq --argjson VAR "$VAR_99_master_install_images_2" '.storage.files += [$VAR] ' \

| jq --argjson VAR "$VAR_99_master_install_crts_2" '.storage.files += [$VAR] ' \

| jq -c . \

> ${tmppath}

VAR_IGNITION=$(cat ${tmppath})

rm -f ${tmppath}

bottom

openshift 4.6 静态IP离线 baremetal 安装,包含operator hub

安装过程视频

本文描述ocp4.6在baremetal(kvm模拟)上面,静态ip安装的方法。包括operator hub步骤。

离线安装包下载

ocp4.3的离线安装包下载和3.11不太一样,按照如下方式准备。另外,由于默认的baremetal是需要dhcp, pxe环境的,那么需要准备一个工具机,上面有dhcp, tftp, haproxy等工具,另外为了方便项目现场工作,还准备了ignition文件的修改工具,所以离线安装包需要一些其他第三方的工具。

https://github.com/wangzheng422/ocp4-upi-helpernode 这个工具,是创建工具机用的。

https://github.com/wangzheng422/filetranspiler 这个工具,是修改ignition文件用的。

打包好的安装包,在这里下载,百度盘下载链接,版本是4.6.5:

链接: https://pan.baidu.com/s/1-5QWpayV2leinq4DOtiFEg 密码: gjoe

其中包括如下类型的文件:

- ocp4.tgz 这个文件包含了iso等安装介质,以及各种安装脚本,全部下载的镜像列表等。需要复制到宿主机,以及工具机上去。

- registry.tgz 这个文件也是docker image registry的仓库打包文件。需要先补充镜像的话,按照这里操作: 4.6.add.image.md

- install.image.tgz 这个文件是安装集群的时候,需要的补充镜像.

- rhel-data.7.9.tgz 这个文件是 rhel 7 主机的yum更新源,这么大是因为里面有gpu, epel等其他的东西。这个包主要用于安装宿主机,工具机,以及作为计算节点的rhel。

合并这些切分文件,使用类似如下的命令

cat registry.?? > registry.tgz

在外网云主机上面准备离线安装源

准备离线安装介质的文档,已经转移到了这里:4.6.build.dist.md

宿主机准备

本次实验,是在一个32C, 256G 的主机上面,用很多个虚拟机安装测试。所以先准备这个宿主机。

如果是多台宿主机,记得一定要调整时间配置,让这些宿主机的时间基本一致,否则证书会出问题。

主要的准备工作有

- 配置yum源

- 配置dns

- 安装镜像仓库

- 配置vnc环境

- 配置kvm需要的网络

- 创建helper kvm

- 配置一个haproxy,从外部导入流量给kvm

以上准备工作,dns部分需要根据实际项目环境有所调整。

本次的宿主机是一台rhel7

cat << EOF >> /etc/hosts

127.0.0.1 registry.ocp4.redhat.ren

EOF

# 准备yum更新源

mkdir /etc/yum.repos.d.bak

mv /etc/yum.repos.d/* /etc/yum.repos.d.bak

cat << EOF > /etc/yum.repos.d/remote.repo

[remote]

name=RHEL FTP

baseurl=ftp://127.0.0.1/data

enabled=1

gpgcheck=0

EOF

yum clean all

yum repolist

yum -y install byobu htop

systemctl disable --now firewalld

# 配置registry

mkdir -p /etc/crts/ && cd /etc/crts

openssl req \

-newkey rsa:2048 -nodes -keyout redhat.ren.key \

-x509 -days 3650 -out redhat.ren.crt -subj \

"/C=CN/ST=GD/L=SZ/O=Global Security/OU=IT Department/CN=*.ocp4.redhat.ren" \

-config <(cat /etc/pki/tls/openssl.cnf \

<(printf "[SAN]\nsubjectAltName=DNS:registry.ocp4.redhat.ren,DNS:*.ocp4.redhat.ren,DNS:*.redhat.ren"))

/bin/cp -f /etc/crts/redhat.ren.crt /etc/pki/ca-trust/source/anchors/

update-ca-trust extract

cd /data

mkdir -p /data/registry

# tar zxf registry.tgz

yum -y install podman docker-distribution pigz skopeo

# pigz -dc registry.tgz | tar xf -

cat << EOF > /etc/docker-distribution/registry/config.yml

version: 0.1

log:

fields:

service: registry

storage:

cache:

layerinfo: inmemory

filesystem:

rootdirectory: /data/4.6.5/registry

delete:

enabled: true

http:

addr: :5443

tls:

certificate: /etc/crts/redhat.ren.crt

key: /etc/crts/redhat.ren.key

compatibility:

schema1:

enabled: true

EOF

# systemctl restart docker

# systemctl stop docker-distribution

systemctl enable --now docker-distribution

# systemctl restart docker-distribution

# podman login registry.redhat.ren:5443 -u a -p a

# firewall-cmd --permanent --add-port=5443/tcp

# firewall-cmd --reload

# 加载更多的镜像

# 解压缩 ocp4.tgz

bash add.image.load.sh /data/4.6.5/install.image 'registry.ocp4.redhat.ren:5443'

# https://github.com/christianh814/ocp4-upi-helpernode/blob/master/docs/quickstart.md

# 准备vnc环境

yum -y install tigervnc-server tigervnc gnome-terminal gnome-session \

gnome-classic-session gnome-terminal nautilus-open-terminal \

control-center liberation-mono-fonts google-noto-sans-cjk-fonts \

google-noto-sans-fonts fonts-tweak-tool

yum install -y qgnomeplatform xdg-desktop-portal-gtk \

NetworkManager-libreswan-gnome PackageKit-command-not-found \

PackageKit-gtk3-module abrt-desktop at-spi2-atk at-spi2-core \

avahi baobab caribou caribou-gtk2-module caribou-gtk3-module \

cheese compat-cheese314 control-center dconf empathy eog \

evince evince-nautilus file-roller file-roller-nautilus \

firewall-config firstboot fprintd-pam gdm gedit glib-networking \

gnome-bluetooth gnome-boxes gnome-calculator gnome-classic-session \

gnome-clocks gnome-color-manager gnome-contacts gnome-dictionary \

gnome-disk-utility gnome-font-viewer gnome-getting-started-docs \

gnome-icon-theme gnome-icon-theme-extras gnome-icon-theme-symbolic \

gnome-initial-setup gnome-packagekit gnome-packagekit-updater \

gnome-screenshot gnome-session gnome-session-xsession \

gnome-settings-daemon gnome-shell gnome-software gnome-system-log \

gnome-system-monitor gnome-terminal gnome-terminal-nautilus \

gnome-themes-standard gnome-tweak-tool nm-connection-editor orca \

redhat-access-gui sane-backends-drivers-scanners seahorse \

setroubleshoot sushi totem totem-nautilus vinagre vino \

xdg-user-dirs-gtk yelp

yum install -y cjkuni-uming-fonts dejavu-sans-fonts \

dejavu-sans-mono-fonts dejavu-serif-fonts gnu-free-mono-fonts \

gnu-free-sans-fonts gnu-free-serif-fonts \

google-crosextra-caladea-fonts google-crosextra-carlito-fonts \

google-noto-emoji-fonts jomolhari-fonts khmeros-base-fonts \

liberation-mono-fonts liberation-sans-fonts liberation-serif-fonts \

lklug-fonts lohit-assamese-fonts lohit-bengali-fonts \

lohit-devanagari-fonts lohit-gujarati-fonts lohit-kannada-fonts \

lohit-malayalam-fonts lohit-marathi-fonts lohit-nepali-fonts \

lohit-oriya-fonts lohit-punjabi-fonts lohit-tamil-fonts \

lohit-telugu-fonts madan-fonts nhn-nanum-gothic-fonts \

open-sans-fonts overpass-fonts paktype-naskh-basic-fonts \

paratype-pt-sans-fonts sil-abyssinica-fonts sil-nuosu-fonts \

sil-padauk-fonts smc-meera-fonts stix-fonts \

thai-scalable-waree-fonts ucs-miscfixed-fonts vlgothic-fonts \

wqy-microhei-fonts wqy-zenhei-fonts

vncpasswd

cat << EOF > ~/.vnc/xstartup

#!/bin/sh

unset SESSION_MANAGER

unset DBUS_SESSION_BUS_ADDRESS

vncconfig &

gnome-session &

EOF

chmod +x ~/.vnc/xstartup

vncserver :1 -geometry 1280x800

# 如果你想停掉vnc server,这么做

vncserver -kill :1

# firewall-cmd --permanent --add-port=6001/tcp

# firewall-cmd --permanent --add-port=5901/tcp

# firewall-cmd --reload

# connect vnc at port 5901

# export DISPLAY=:1

# https://www.cyberciti.biz/faq/how-to-install-kvm-on-centos-7-rhel-7-headless-server/

# 配置kvm环境

yum -y install qemu-kvm libvirt libvirt-python libguestfs-tools virt-install virt-viewer virt-manager

systemctl enable libvirtd

systemctl start libvirtd

lsmod | grep -i kvm

brctl show

virsh net-list

virsh net-dumpxml default

# 创建实验用虚拟网络

cat << EOF > /data/virt-net.xml

<network>

<name>openshift4</name>

<forward mode='nat'>

<nat>

<port start='1024' end='65535'/>

</nat>

</forward>

<bridge name='openshift4' stp='on' delay='0'/>

<domain name='openshift4'/>

<ip address='192.168.7.1' netmask='255.255.255.0'>

</ip>

</network>

EOF

virsh net-define --file virt-net.xml

virsh net-autostart openshift4

virsh net-start openshift4

# restore back

virsh net-destroy openshift4

virsh net-undefine openshift4

# 创建工具机

mkdir -p /data/kvm

cd /data/kvm

lvremove -f datavg/helperlv

lvcreate -y -L 430G -n helperlv datavg

virt-install --name="ocp4-aHelper" --vcpus=2 --ram=4096 \

--disk path=/dev/datavg/helperlv,device=disk,bus=virtio,format=raw \

--os-variant centos7.0 --network network=openshift4,model=virtio \

--boot menu=on --location /data/kvm/rhel-server-7.8-x86_64-dvd.iso \

--initrd-inject helper-ks.cfg --extra-args "inst.ks=file:/helper-ks.cfg"

# virt-viewer --domain-name ocp4-aHelper

# virsh start ocp4-aHelper

# virsh list --all

# start chrony/ntp server on host

cat << EOF > /etc/chrony.conf

driftfile /var/lib/chrony/drift

makestep 1.0 3

rtcsync

allow 192.0.0.0/8

local stratum 10

logdir /var/log/chrony

EOF

systemctl enable --now chronyd

# systemctl restart chronyd

chronyc tracking

chronyc sources -v

chronyc sourcestats -v

chronyc makestep

工具机准备

以下是在工具机里面,进行的安装操作。

主要的操作有

- 配置yum源

- 运行ansible脚本,自动配置工具机

- 上传定制的安装配置文件

- 生成ignition文件

sed -i 's/#UseDNS yes/UseDNS no/g' /etc/ssh/sshd_config

systemctl restart sshd

cat << EOF > /root/.ssh/config

StrictHostKeyChecking no

UserKnownHostsFile=/dev/null

EOF

# in helper node

mkdir /etc/yum.repos.d.bak

mv /etc/yum.repos.d/* /etc/yum.repos.d.bak/

cat << EOF > /etc/yum.repos.d/remote.repo

[remote]

name=RHEL FTP

baseurl=ftp://192.168.7.1/data

enabled=1

gpgcheck=0

EOF

yum clean all

yum repolist

yum -y install ansible git unzip podman python36

mkdir -p /data/ocp4/

# scp ocp4.tgz to /data

cd /data

tar zvxf ocp4.tgz

cd /data/ocp4

# 这里使用了一个ansible的项目,用来部署helper节点的服务。

# https://github.com/wangzheng422/ocp4-upi-helpernode

unzip ocp4-upi-helpernode.zip

# 这里使用了一个ignition文件合并的项目,用来帮助自定义ignition文件。

# https://github.com/wangzheng422/filetranspiler

podman load -i filetranspiler.tgz

# 接下来,我们使用ansible来配置helper节点,装上各种openshift集群需要的服务

# 根据现场环境,修改 ocp4-upi-helpernode-master/vars-static.yaml

# 主要是修改各个节点的网卡和硬盘参数,还有IP地址

cd /data/ocp4/ocp4-upi-helpernode-master

ansible-playbook -e @vars-static.yaml -e '{staticips: true}' tasks/main.yml

# try this:

/usr/local/bin/helpernodecheck

mkdir -p /data/install

# GOTO image registry host

# copy crt files to helper node

scp /etc/crts/redhat.ren.ca.crt root@192.168.7.11:/data/install/

scp /etc/crts/redhat.ren.crt root@192.168.7.11:/data/install/

scp /etc/crts/redhat.ren.key root@192.168.7.11:/data/install/

# GO back to help node

/bin/cp -f /data/install/redhat.ren.crt /etc/pki/ca-trust/source/anchors/

update-ca-trust extract

# 定制ignition

cd /data/install

# 根据现场环境,修改 install-config.yaml

# 至少要修改ssh key, 还有 additionalTrustBundle,这个是镜像仓库的csr

# vi install-config.yaml

cat << EOF > /data/install/install-config.yaml

apiVersion: v1

baseDomain: redhat.ren

compute:

- hyperthreading: Enabled

name: worker

replicas: 3

controlPlane:

hyperthreading: Enabled

name: master

replicas: 3

metadata:

name: ocp4

networking:

clusterNetworks:

- cidr: 10.254.0.0/16

hostPrefix: 24

networkType: OpenShiftSDN

serviceNetwork:

- 172.30.0.0/16

platform:

none: {}

pullSecret: '{"auths":{"registry.ocp4.redhat.ren:5443": {"auth": "ZHVtbXk6ZHVtbXk=","email": "noemail@localhost"},"registry.ppa.redhat.ren:5443": {"auth": "ZHVtbXk6ZHVtbXk=","email": "noemail@localhost"}}}'

sshKey: |

$( cat /root/.ssh/helper_rsa.pub | sed 's/^/ /g' )

additionalTrustBundle: |

$( cat /data/install/redhat.ren.ca.crt | sed 's/^/ /g' )

imageContentSources:

- mirrors:

- registry.ocp4.redhat.ren:5443/ocp4/openshift4

source: quay.io/openshift-release-dev/ocp-release

- mirrors:

- registry.ocp4.redhat.ren:5443/ocp4/openshift4

source: quay.io/openshift-release-dev/ocp-v4.0-art-dev

EOF

cd /data/install/

/bin/rm -rf *.ign .openshift_install_state.json auth bootstrap manifests master*[0-9] worker*[0-9]

openshift-install create ignition-configs --dir=/data/install

cd /data/ocp4/ocp4-upi-helpernode-master

# 我们来为每个主机,复制自己版本的ign,并复制到web server的目录下

ansible-playbook -e @vars-static.yaml -e '{staticips: true}' tasks/ign.yml

# 如果对每个主机有自己ign的独特需求,在这一步,去修改ign。

# 以下操作本来是想设置网卡地址,但是实践发现是不需要的。

# 保留在这里,是因为他可以在安装的时候注入文件,非常有用。

# mkdir -p bootstrap/etc/sysconfig/network-scripts/

# cat <<EOF > bootstrap/etc/sysconfig/network-scripts/ifcfg-ens3

# DEVICE=ens3

# BOOTPROTO=none

# ONBOOT=yes

# IPADDR=192.168.7.12

# NETMASK=255.255.255.0

# GATEWAY=192.168.7.1

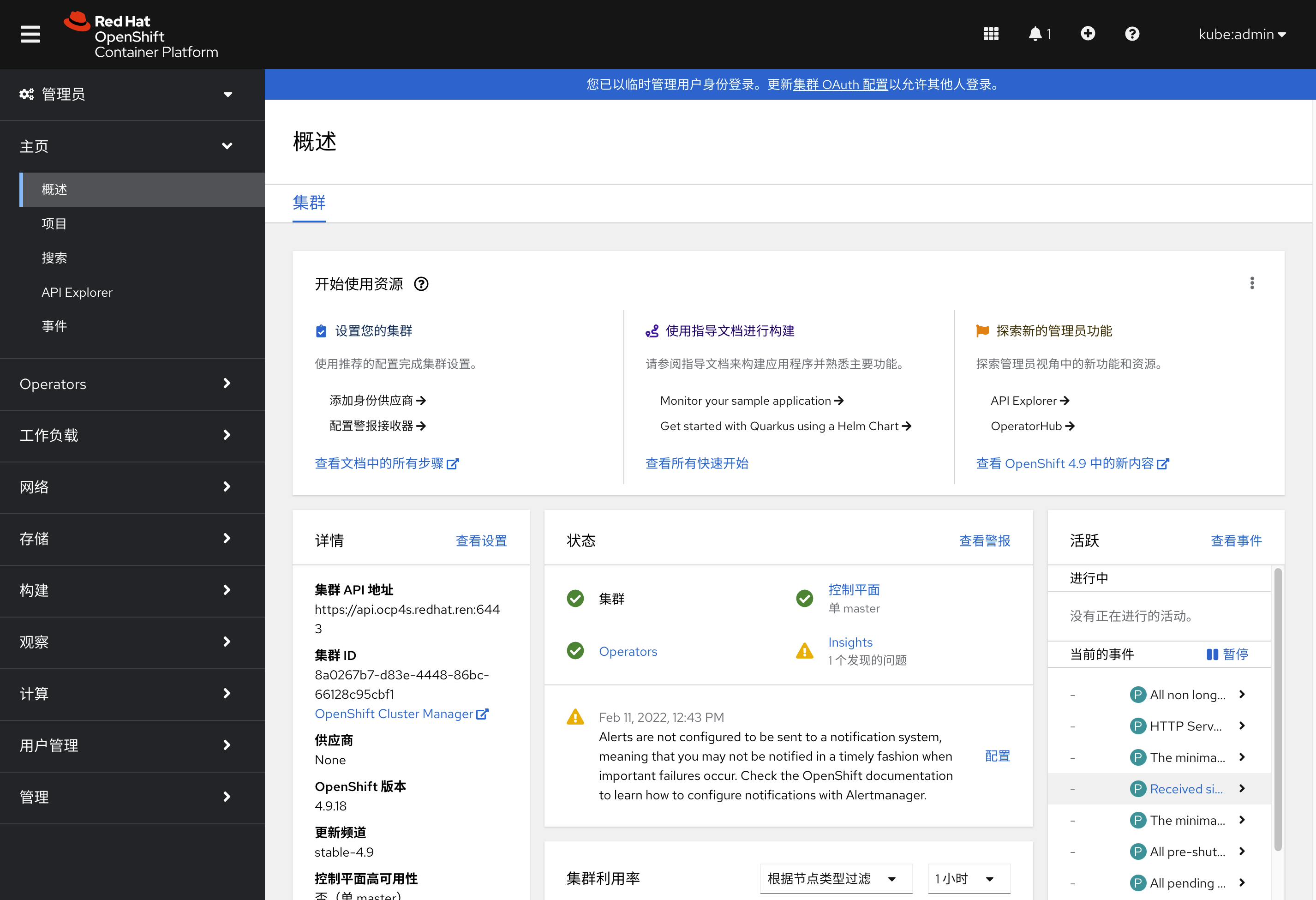

# DNS=192.168.7.11

# DNS1=192.168.7.11

# DNS2=192.168.7.1

# DOMAIN=redhat.ren

# PREFIX=24

# DEFROUTE=yes

# IPV6INIT=no

# EOF

# filetranspiler -i bootstrap.ign -f bootstrap -o bootstrap-static.ign

# /bin/cp -f bootstrap-static.ign /var/www/html/ignition/

# 我们为每个节点创建各自的iso文件

cd /data/ocp4/ocp4-upi-helpernode-master

ansible-playbook -e @vars-static.yaml -e '{staticips: true}' tasks/iso.yml

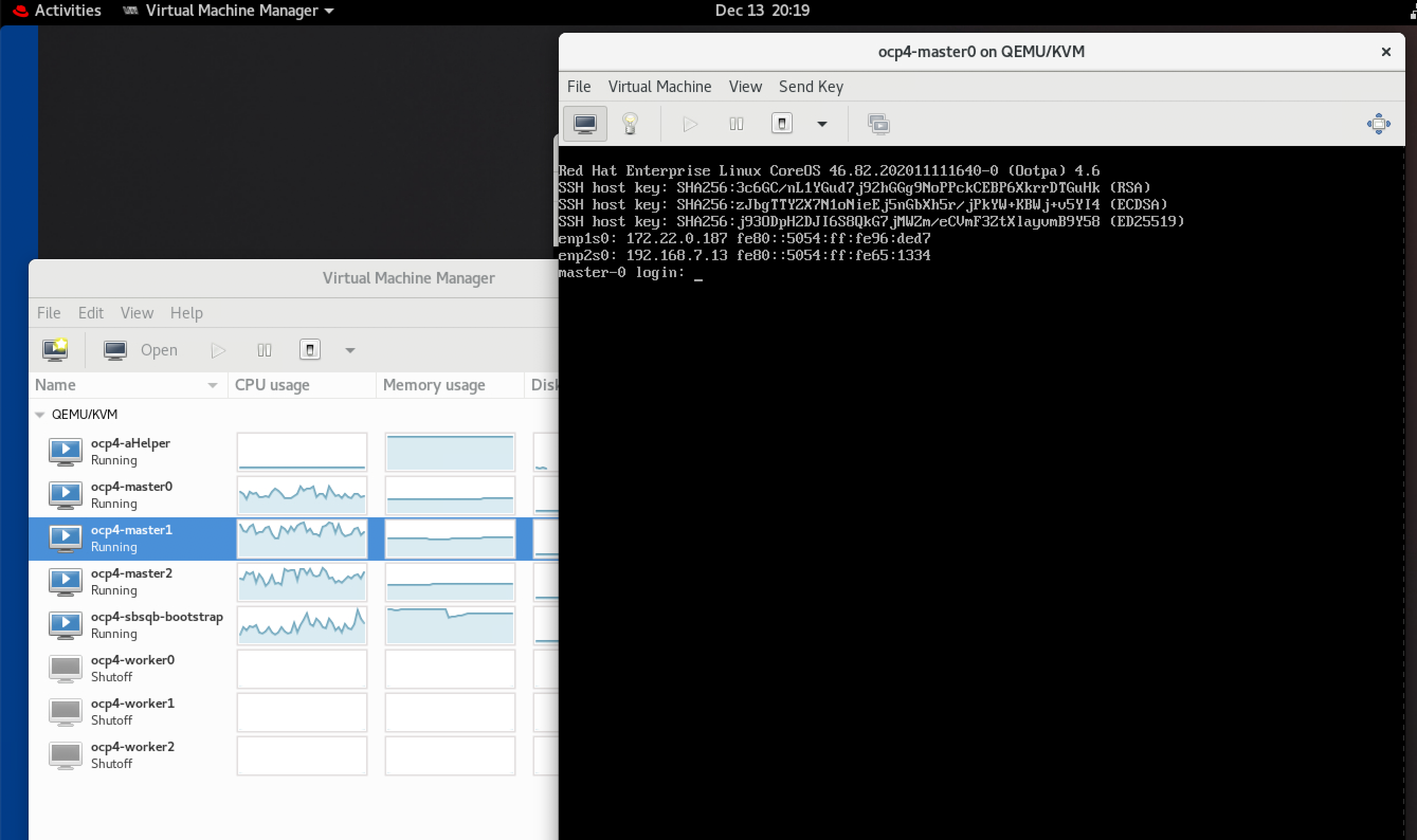

回到宿主机

本来,到了这一步,就可以开始安装了,但是我们知道coreos装的时候,要手动输入很长的命令行,实际操作的时候,那是不可能输入对的,输入错一个字符,安装就失败,要重启,重新输入。。。

为了避免这种繁琐的操作,参考网上的做法,我们就需要为每个主机定制iso了。好在,之前的步骤,我们已经用ansible创建了需要的iso,我们把这些iso复制到宿主机上,就可以继续了。

这里面有一个坑,我们是不知道主机的网卡名称的,只能先用coreos iso安装启动一次,进入单用户模式以后,ip a 来查看以下,才能知道,一般来说,是ens3。

另外,如果是安装物理机,disk是哪个,也需要上述的方法,来看看具体的盘符。另外,推荐在物理机上安装rhel 8 来测试一下物理机是不是支持coreos。物理机安装的时候,遇到不写盘的问题,可以尝试添加启动参数: ignition.firstboot=1

# on kvm host

export KVM_DIRECTORY=/data/kvm

cd ${KVM_DIRECTORY}

scp root@192.168.7.11:/data/install/*.iso ${KVM_DIRECTORY}/

create_lv() {

var_name=$1

lvremove -f datavg/$var_name

lvcreate -y -L 120G -n $var_name datavg

# wipefs --all --force /dev/datavg/$var_name

}

create_lv bootstraplv

create_lv master0lv

create_lv master1lv

create_lv master2lv

create_lv worker0lv

create_lv worker1lv

create_lv worker2lv

# finally, we can start install :)

# 你可以一口气把虚拟机都创建了,然后喝咖啡等着。

# 从这一步开始,到安装完毕,大概30分钟。

virt-install --name=ocp4-bootstrap --vcpus=4 --ram=8192 \

--disk path=/dev/datavg/bootstraplv,device=disk,bus=virtio,format=raw \

--os-variant rhel8.0 --network network=openshift4,model=virtio \

--boot menu=on --cdrom ${KVM_DIRECTORY}/rhcos_install-bootstrap.iso

# 想登录进coreos一探究竟?那么这么做

# ssh core@bootstrap

# journalctl -b -f -u bootkube.service

virt-install --name=ocp4-master0 --vcpus=4 --ram=16384 \

--disk path=/dev/datavg/master0lv,device=disk,bus=virtio,format=raw \

--os-variant rhel8.0 --network network=openshift4,model=virtio \

--boot menu=on --cdrom ${KVM_DIRECTORY}/rhcos_install-master-0.iso

# ssh core@192.168.7.13

virt-install --name=ocp4-master1 --vcpus=4 --ram=16384 \

--disk path=/dev/datavg/master1lv,device=disk,bus=virtio,format=raw \

--os-variant rhel8.0 --network network=openshift4,model=virtio \

--boot menu=on --cdrom ${KVM_DIRECTORY}/rhcos_install-master-1.iso

virt-install --name=ocp4-master2 --vcpus=4 --ram=16384 \

--disk path=/dev/datavg/master2lv,device=disk,bus=virtio,format=raw \

--os-variant rhel8.0 --network network=openshift4,model=virtio \

--boot menu=on --cdrom ${KVM_DIRECTORY}/rhcos_install-master-2.iso

virt-install --name=ocp4-worker0 --vcpus=4 --ram=32768 \

--disk path=/dev/datavg/worker0lv,device=disk,bus=virtio,format=raw \

--os-variant rhel8.0 --network network=openshift4,model=virtio \

--boot menu=on --cdrom ${KVM_DIRECTORY}/rhcos_install-worker-0.iso

virt-install --name=ocp4-worker1 --vcpus=4 --ram=16384 \

--disk path=/dev/datavg/worker1lv,device=disk,bus=virtio,format=raw \

--os-variant rhel8.0 --network network=openshift4,model=virtio \

--boot menu=on --cdrom ${KVM_DIRECTORY}/rhcos_install-worker-1.iso

virt-install --name=ocp4-worker2 --vcpus=4 --ram=16384 \

--disk path=/dev/datavg/worker2lv,device=disk,bus=virtio,format=raw \

--os-variant rhel8.0 --network network=openshift4,model=virtio \

--boot menu=on --cdrom ${KVM_DIRECTORY}/rhcos_install-worker-2.iso

# on workstation

# open http://192.168.7.11:9000/

# to check

# if you want to stop or delete vm, try this

virsh list --all

virsh destroy ocp4-bootstrap

virsh destroy ocp4-master0

virsh destroy ocp4-master1

virsh destroy ocp4-master2

virsh destroy ocp4-worker0

virsh destroy ocp4-worker1

virsh destroy ocp4-worker2

virsh undefine ocp4-bootstrap

virsh undefine ocp4-master0

virsh undefine ocp4-master1

virsh undefine ocp4-master2

virsh undefine ocp4-worker0

virsh undefine ocp4-worker1

virsh undefine ocp4-worker2

在工具机上面

这个时候,安装已经自动开始了,我们只需要回到工具机上静静的观察就可以了。

在bootstrap和装master阶段,用这个命令看进度。

cd /data/install

export KUBECONFIG=/data/install/auth/kubeconfig

echo "export KUBECONFIG=/data/install/auth/kubeconfig" >> ~/.bashrc

oc completion bash | sudo tee /etc/bash_completion.d/openshift > /dev/null

cd /data/install

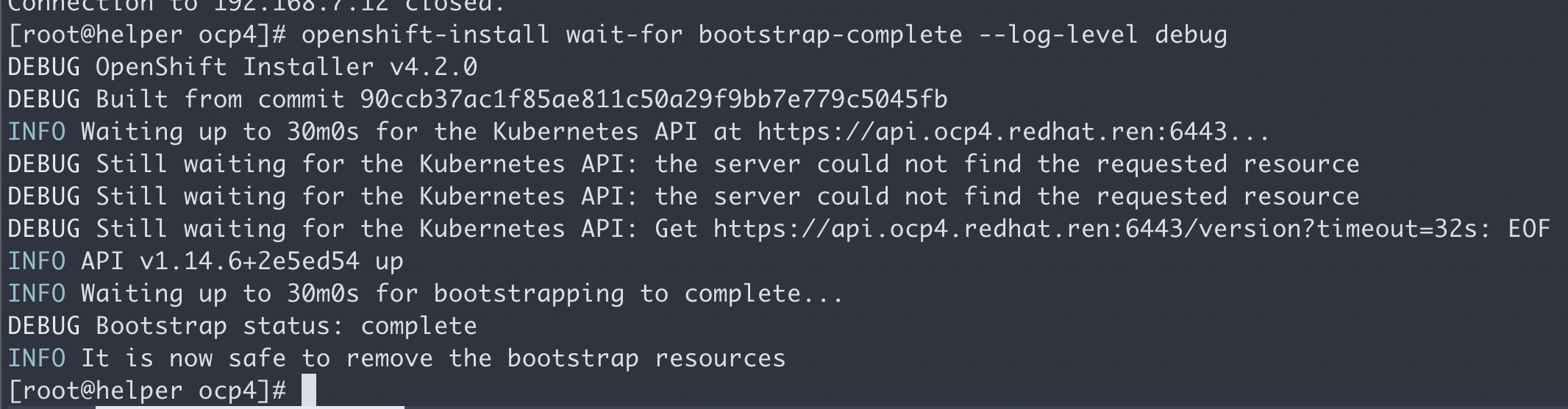

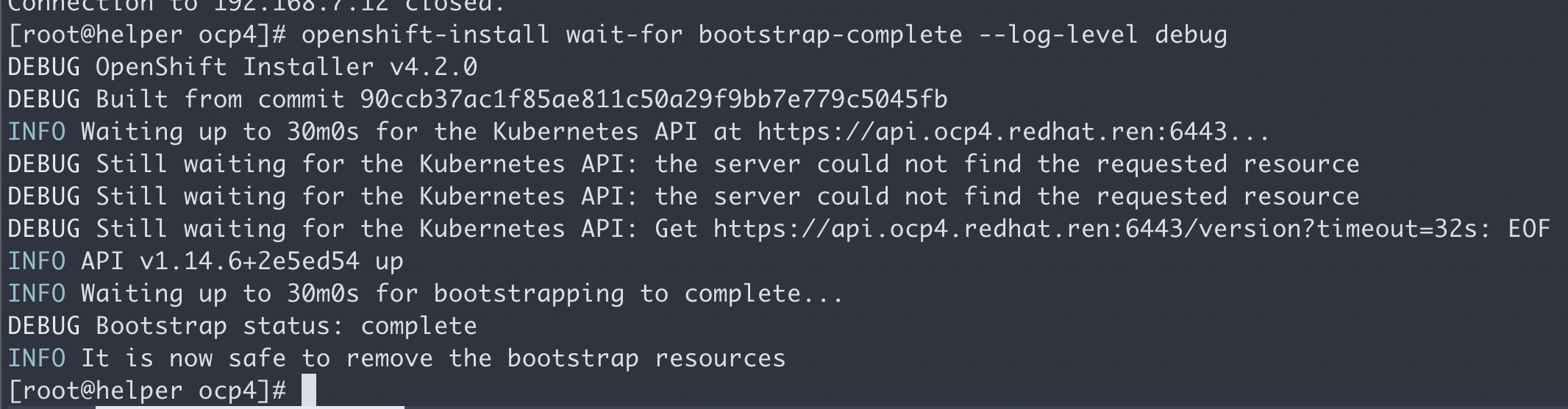

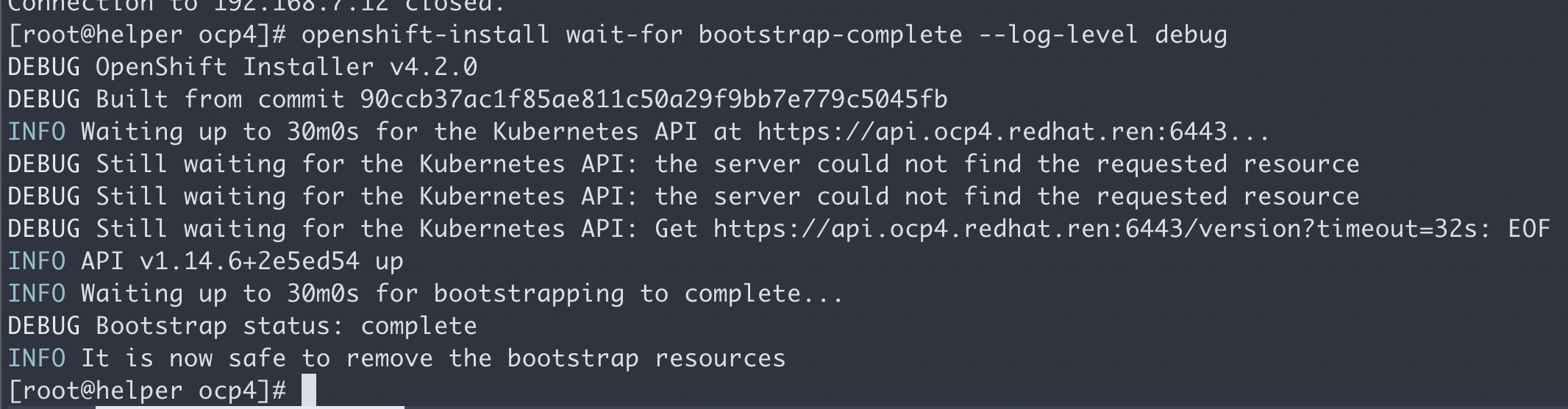

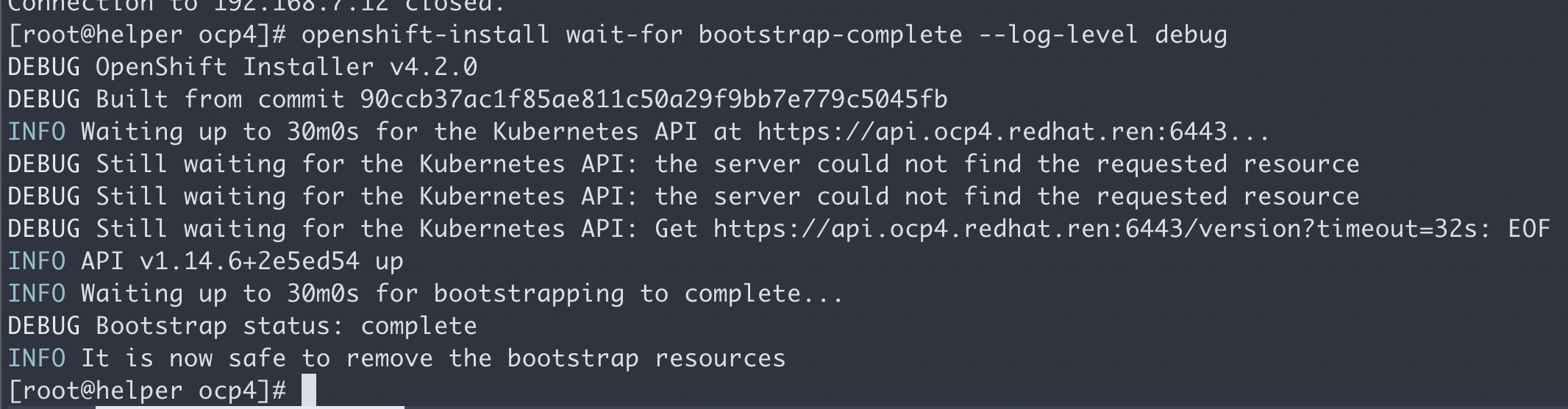

openshift-install wait-for bootstrap-complete --log-level debug

一切正常的话,会看到这个。

有时候证书会过期,验证方法是登录 bootstrap, 看看过期时间。如果确定过期,要清除所有的openshift-install生成配置文件的缓存,重新来过。

echo | openssl s_client -connect localhost:6443 | openssl x509 -noout -text | grep Not

一般来说,如果在openshift-install这一步之前,按照文档,删除了缓存文件,就不会出现过期的现象。

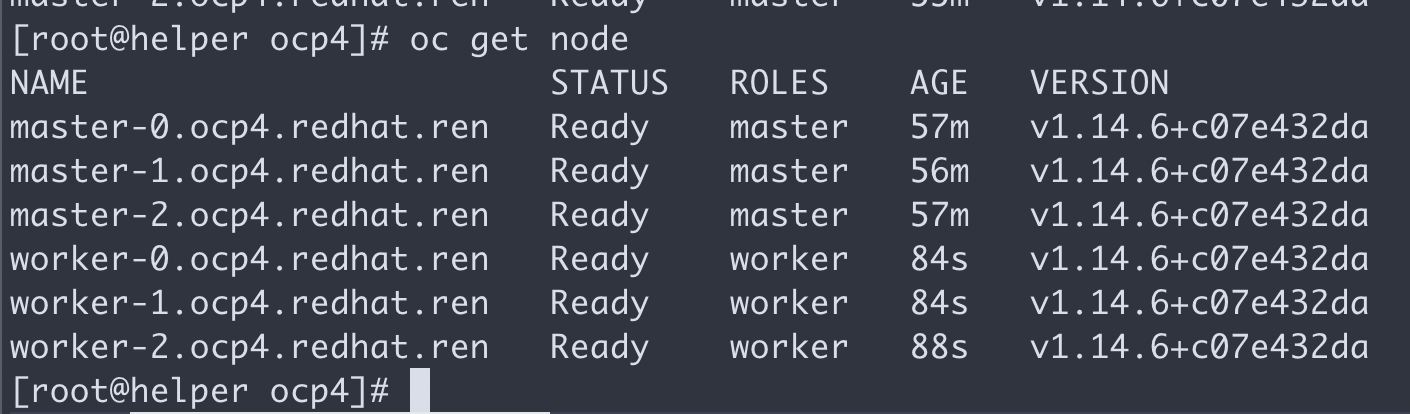

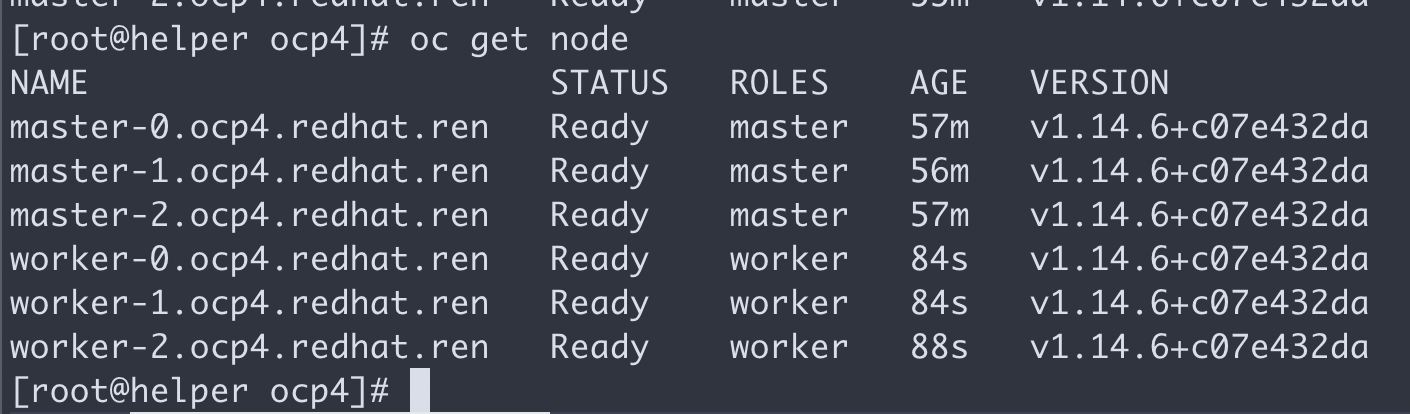

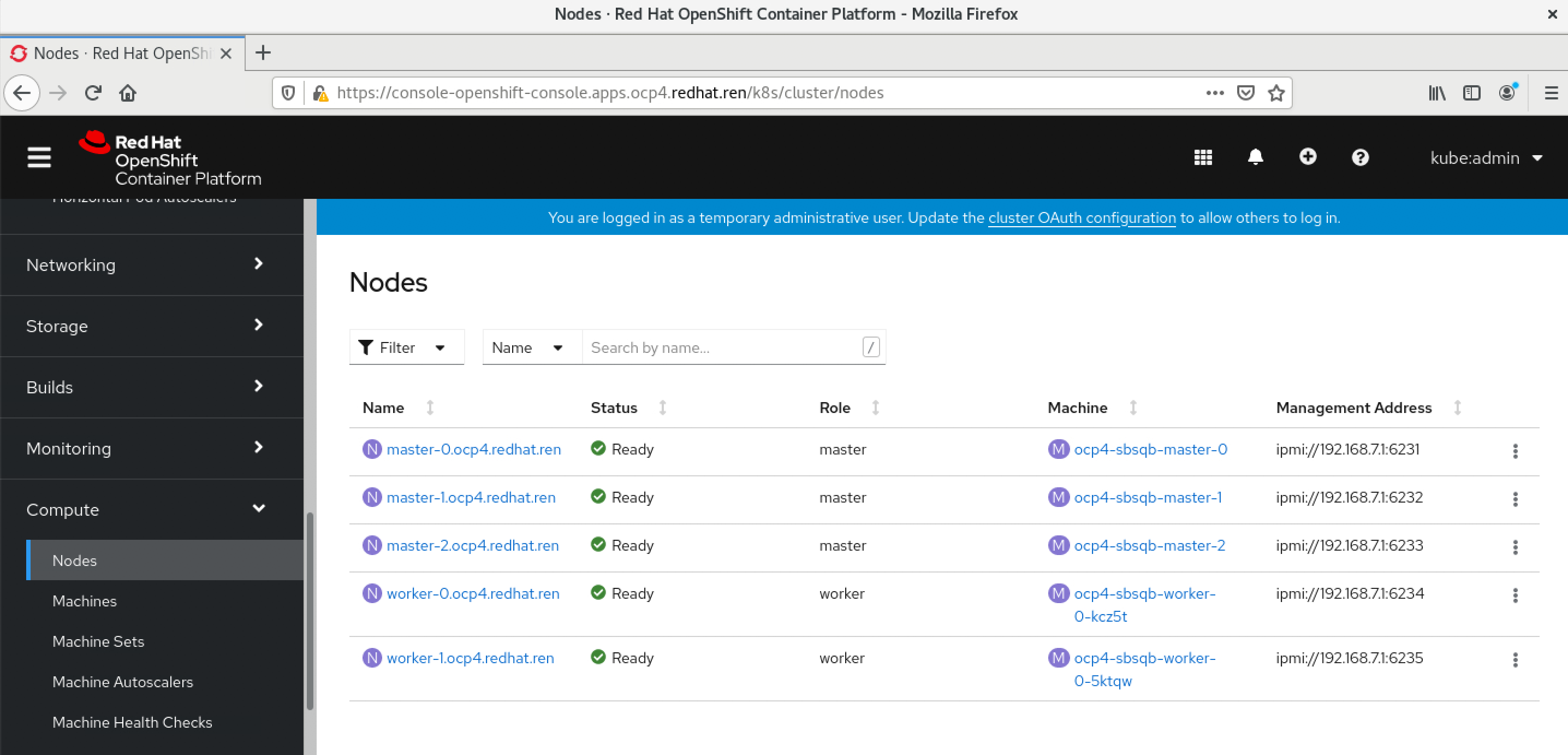

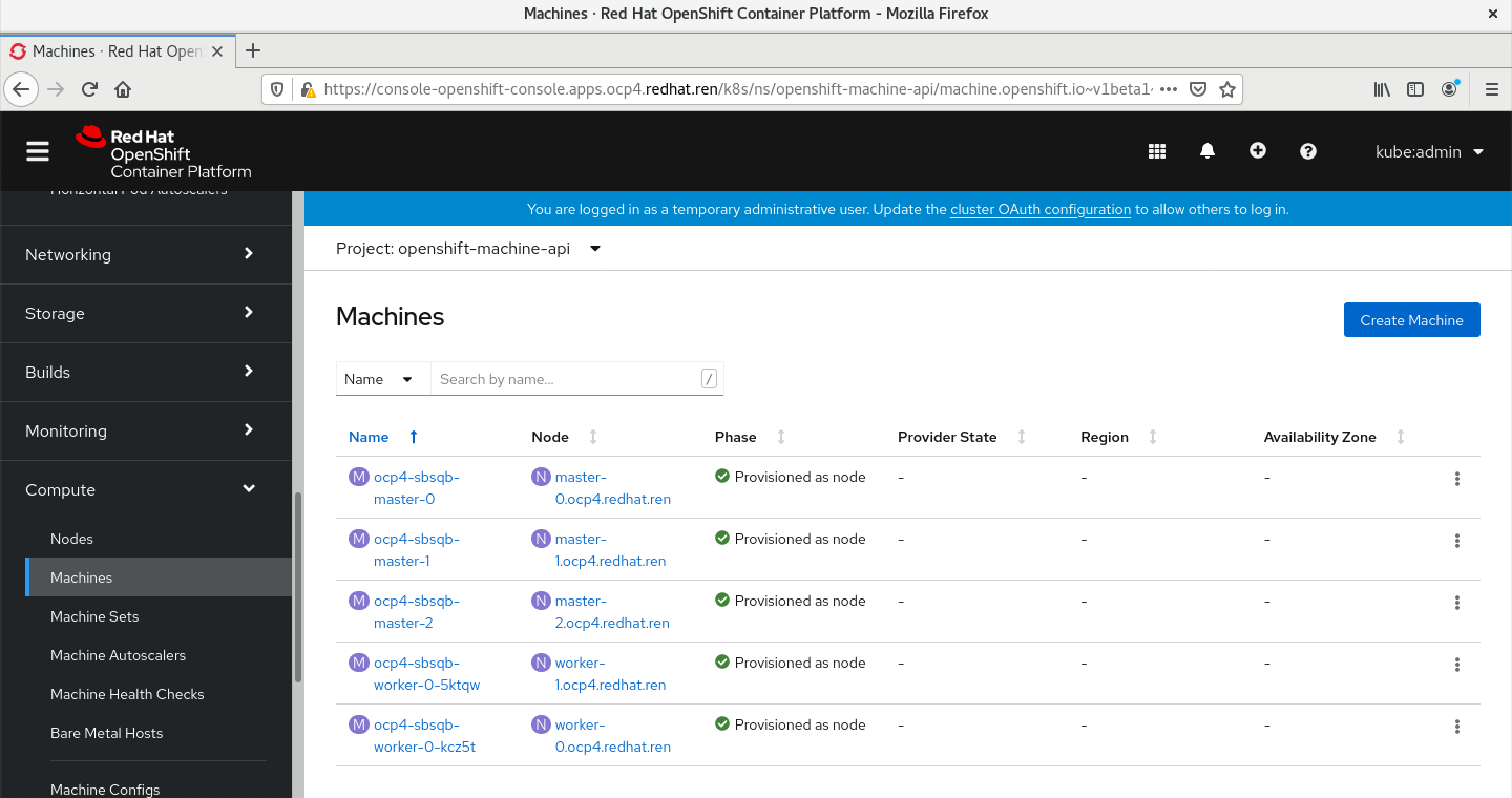

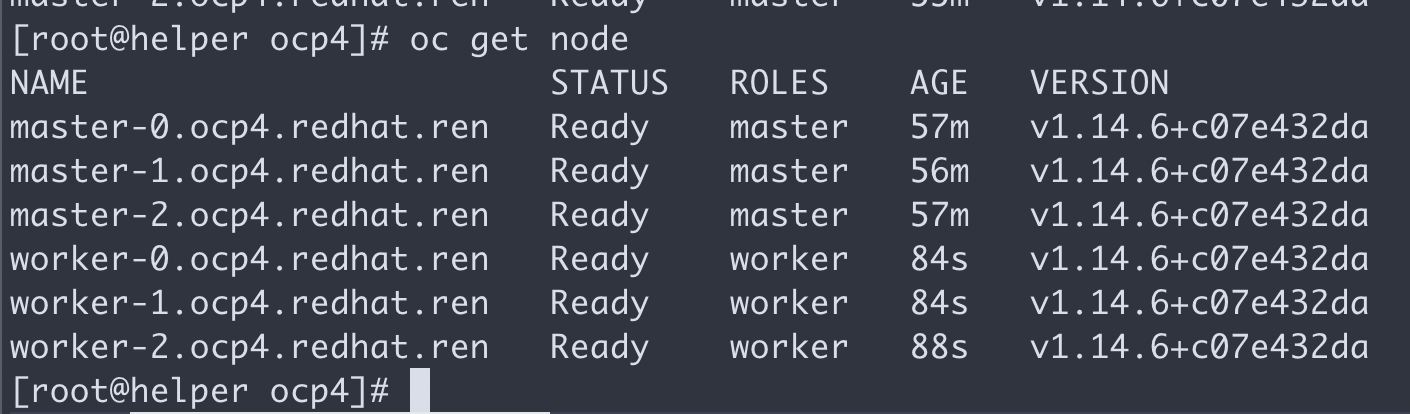

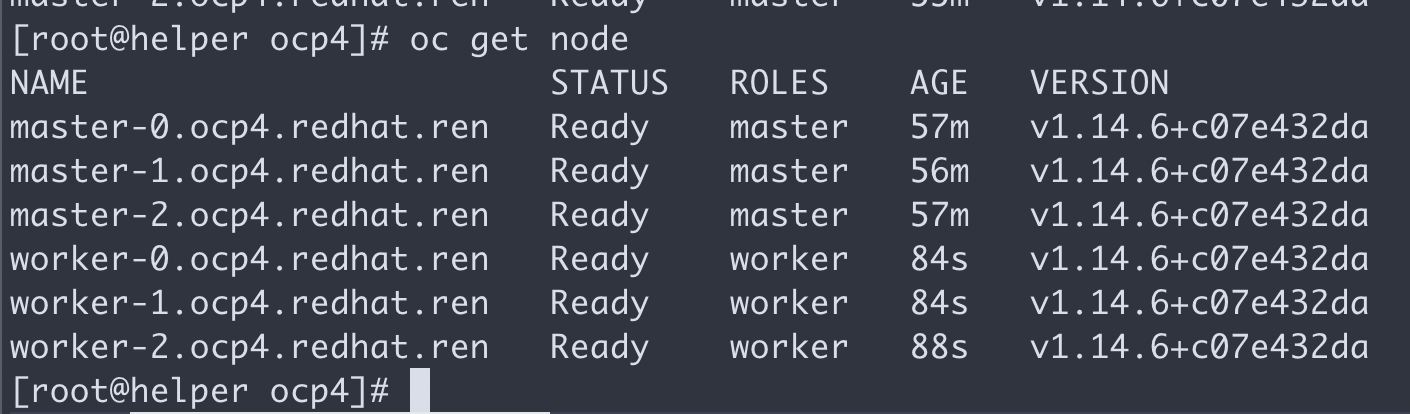

oc get nodes

这个时候,只能看到master,是因为worker的csr没有批准。如果虚拟机是一口气创建的,那么多半不会遇到下面的问题。

oc get csr

会发现有很多没有被批准的

批准之

yum -y install jq

oc get csr | grep -v Approved

oc get csr -ojson | jq -r '.items[] | select(.status == {} ) | .metadata.name' | xargs oc adm certificate approve

# oc get csr -o name | xargs oc adm certificate approve

然后worker 节点cpu飙高,之后就能看到worker了。

等一会,会看到这个,就对了。

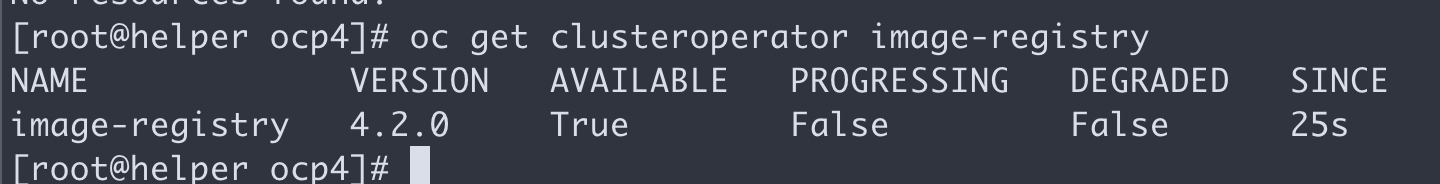

上面的操作完成以后,就可以完成最后的安装了

openshift-install wait-for install-complete --log-level debug

# here is the output

# INFO To access the cluster as the system:admin user when using 'oc', run 'export KUBECONFIG=/data/install/auth/kubeconfig'

# INFO Access the OpenShift web-console here: https://console-openshift-console.apps.ocp4.redhat.ren

# INFO Login to the console with user: "kubeadmin", and password: "7MXaT-vqouq-UukdG-uzNEi"

我们的工具机是带nfs的,那么就配置高档一些的nfs存储吧,不要用emptydir

bash /data/ocp4/ocp4-upi-helpernode-master/files/nfs-provisioner-setup.sh

# oc edit configs.imageregistry.operator.openshift.io

# 修改 storage 部分

# storage:

# pvc:

# claim:

oc patch configs.imageregistry.operator.openshift.io cluster -p '{"spec":{"managementState": "Managed","storage":{"pvc":{"claim":""}}}}' --type=merge

oc patch configs.imageregistry.operator.openshift.io cluster -p '{"spec":{"managementState": "Removed"}}' --type=merge

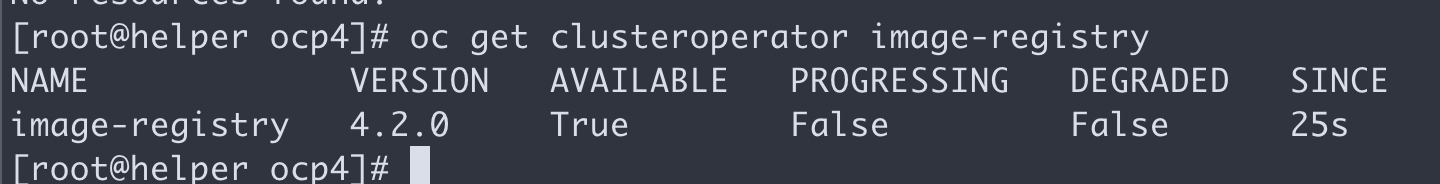

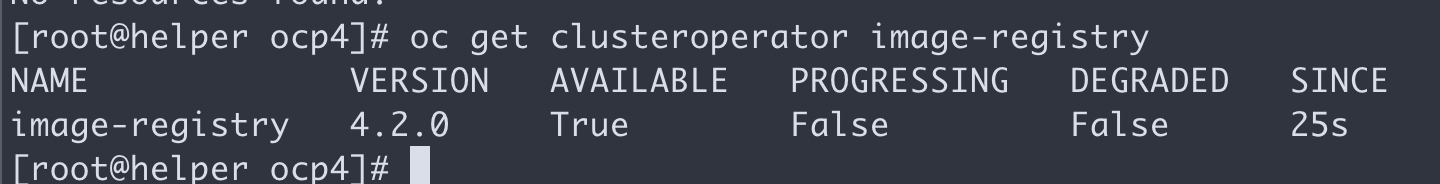

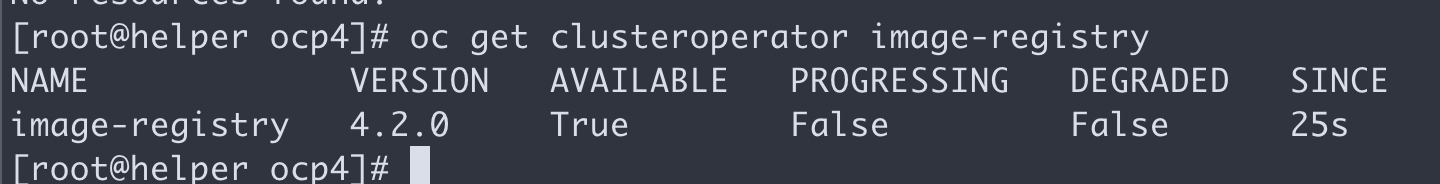

oc get clusteroperator image-registry

oc get configs.imageregistry.operator.openshift.io cluster -o yaml

# 把imagepruner给停掉

# https://bugzilla.redhat.com/show_bug.cgi?id=1852501#c24

# oc patch imagepruner.imageregistry/cluster --patch '{"spec":{"suspend":true}}' --type=merge

# oc -n openshift-image-registry delete jobs --all

oc get configs.samples.operator.openshift.io/cluster -o yaml

oc patch configs.samples.operator.openshift.io/cluster -p '{"spec":{"managementState": "Managed"}}' --type=merge

oc patch configs.samples.operator.openshift.io/cluster -p '{"spec":{"managementState": "Unmanaged"}}' --type=merge

oc patch configs.samples.operator.openshift.io/cluster -p '{"spec":{"managementState": "Removed"}}' --type=merge

配置一下本地的dns ( 把 *.apps.ocp4.redhat.ren 配置成 192.168.7.11 ) ,指向工具机的haproxy,打开浏览器就能访问管理界面了

chrony/NTP 设置

在 ocp 4.6 里面,需要设定ntp同步,我们之前ansible脚本,已经创建好了ntp的mco配置,把他打到系统里面就好了。

oc apply -f /data/ocp4/ocp4-upi-helpernode-master/machineconfig/

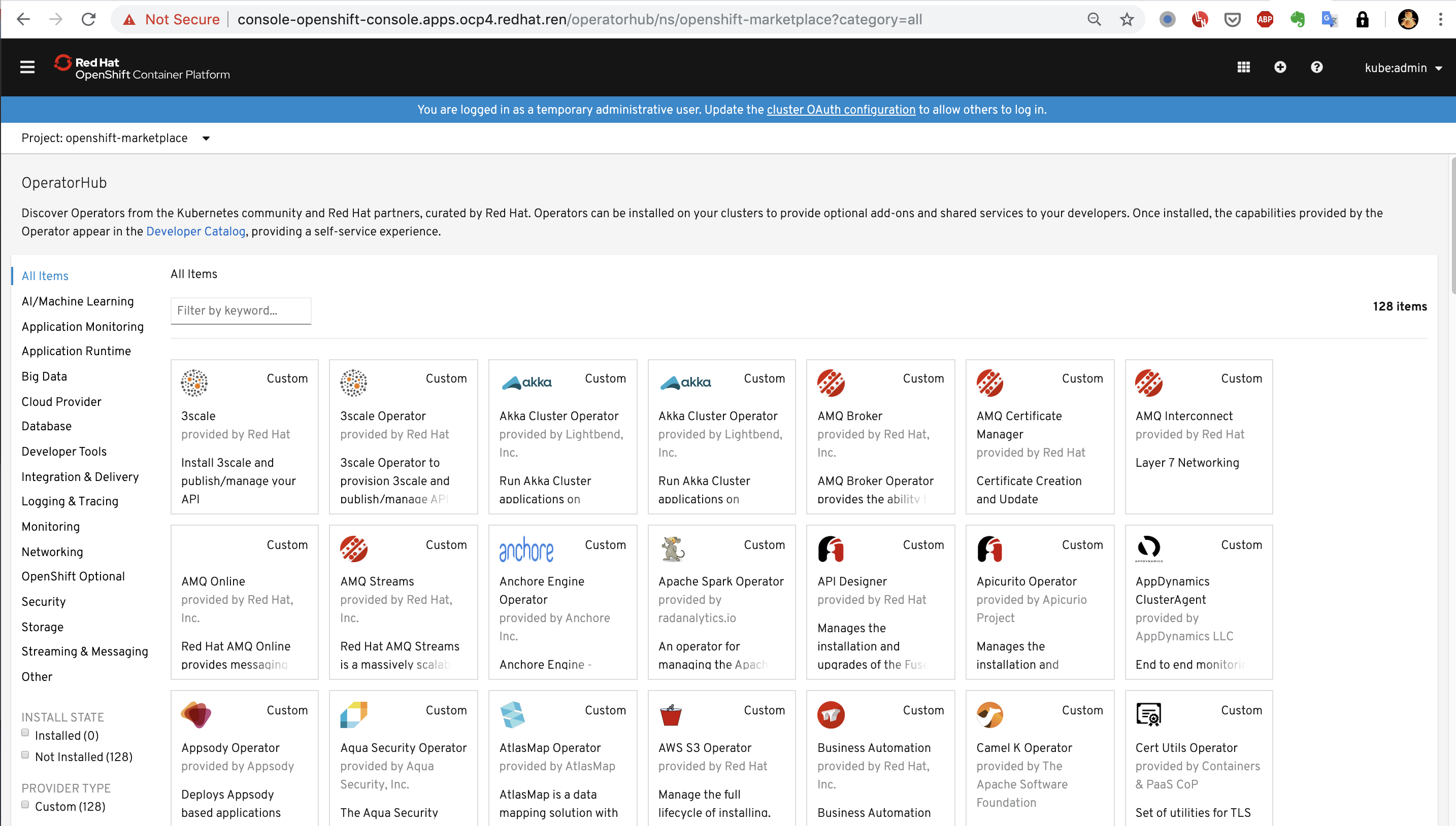

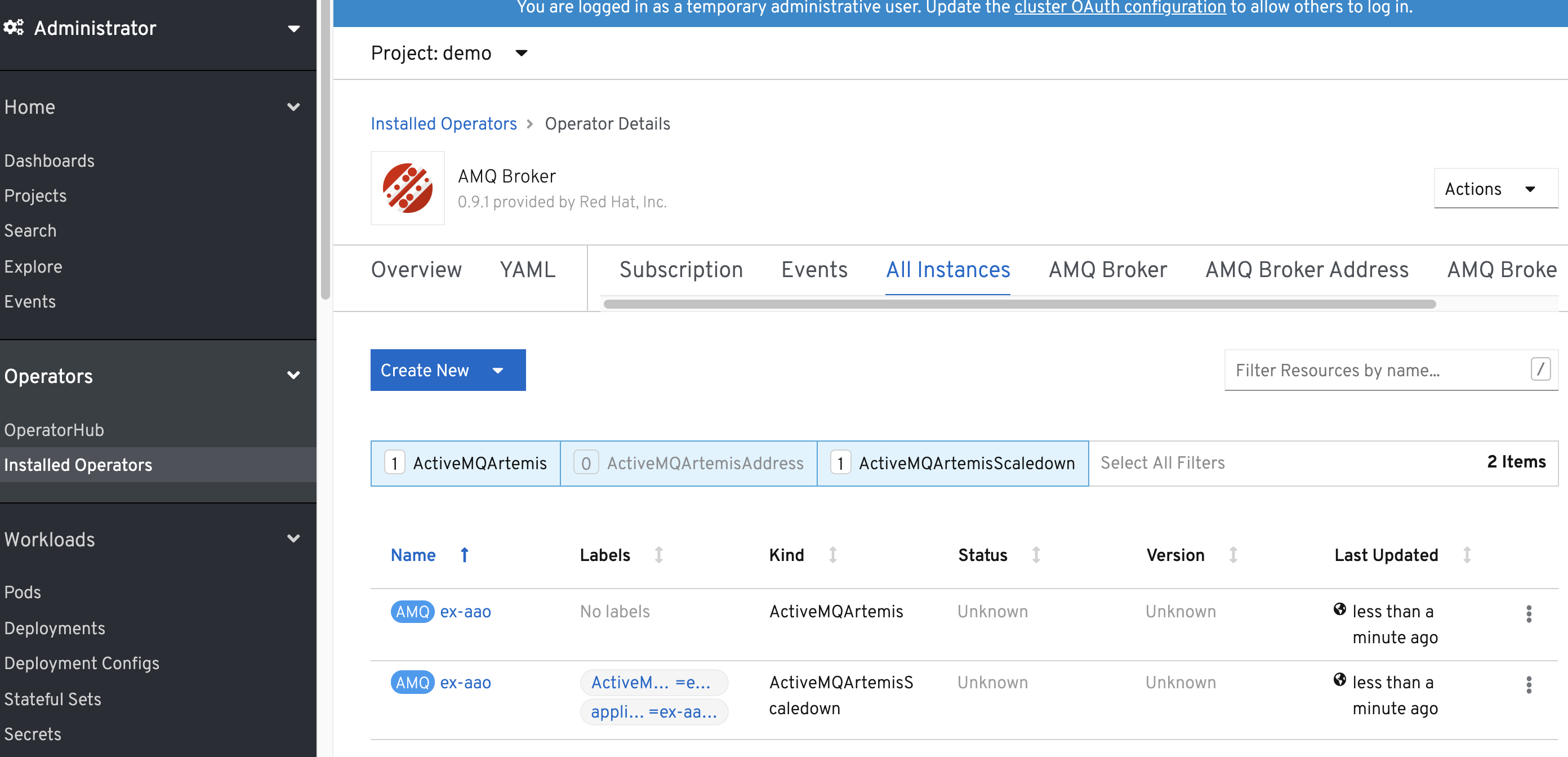

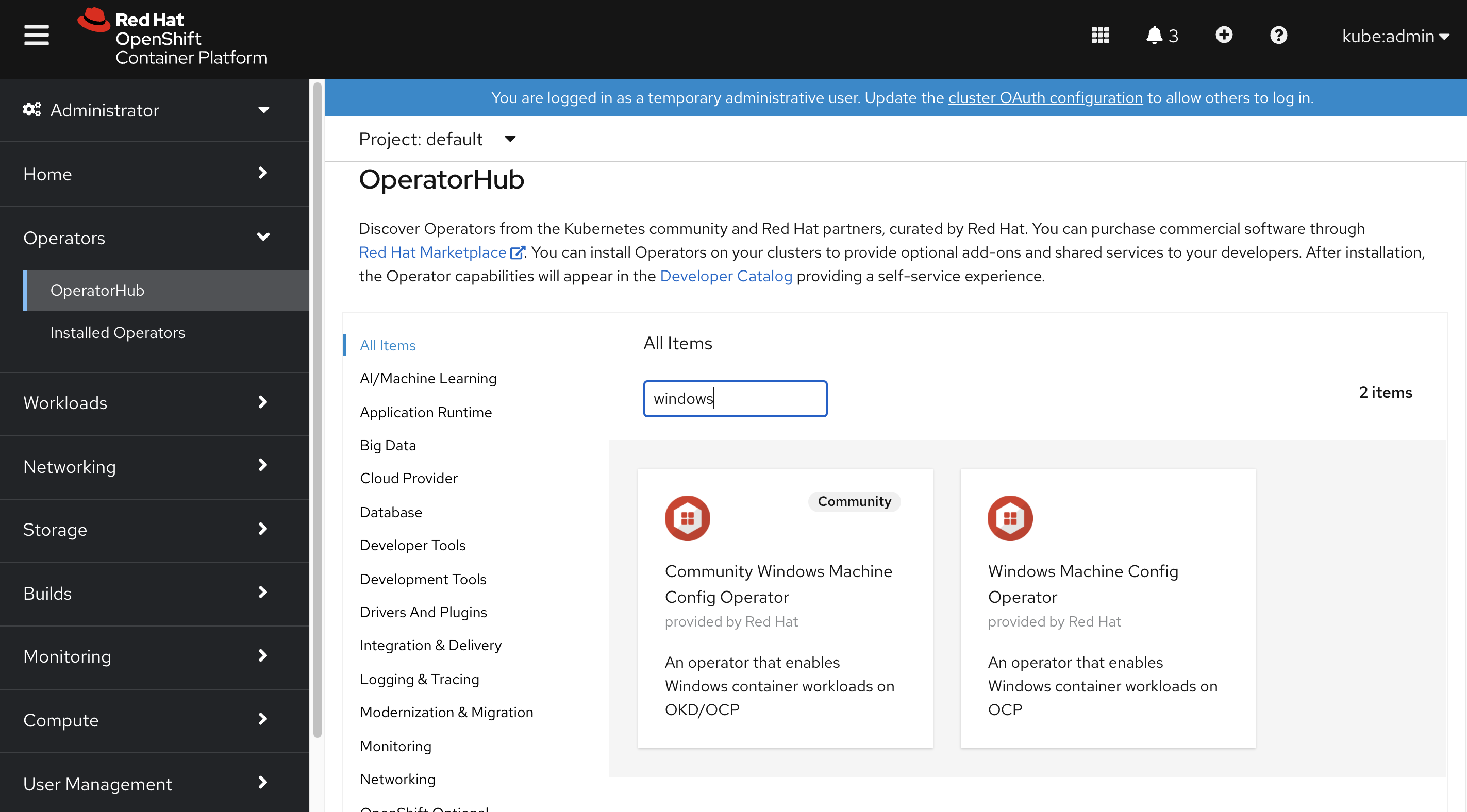

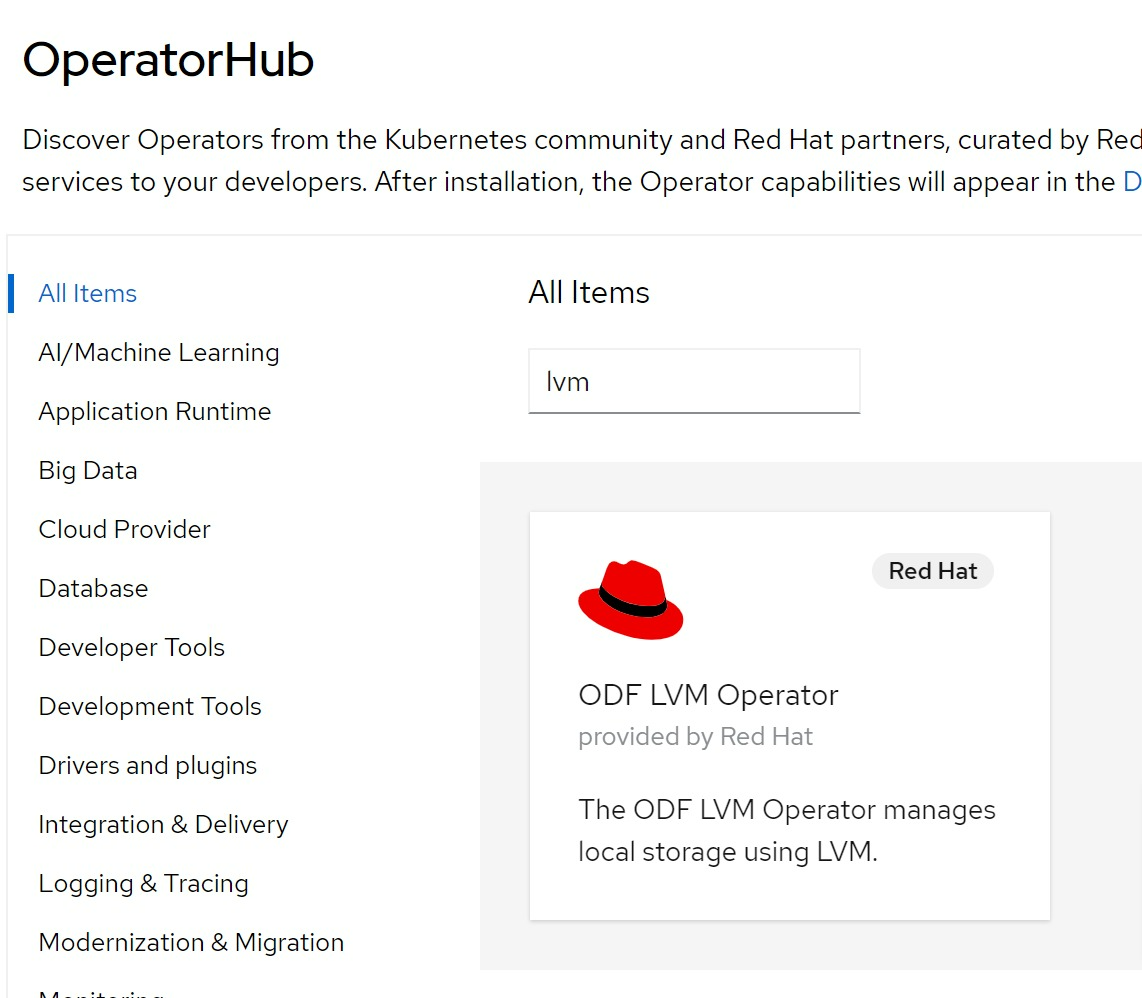

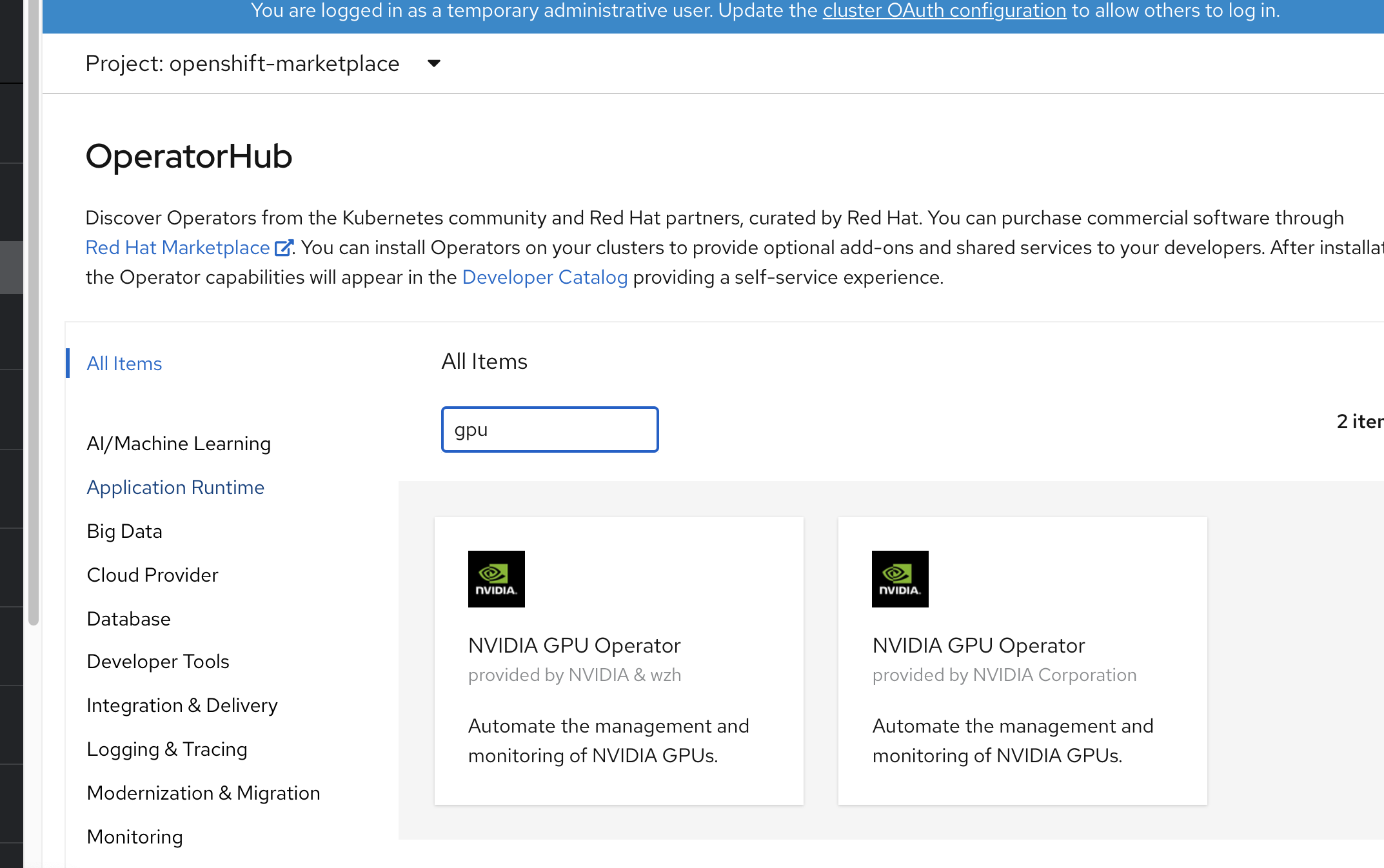

Operator Hub 离线安装

https://docs.openshift.com/container-platform/4.2/operators/olm-restricted-networks.html

https://github.com/operator-framework/operator-registry

https://www.cnblogs.com/ericnie/p/11777384.html?from=timeline&isappinstalled=0

https://access.redhat.com/documentation/en-us/openshift_container_platform/4.2/html-single/images/index

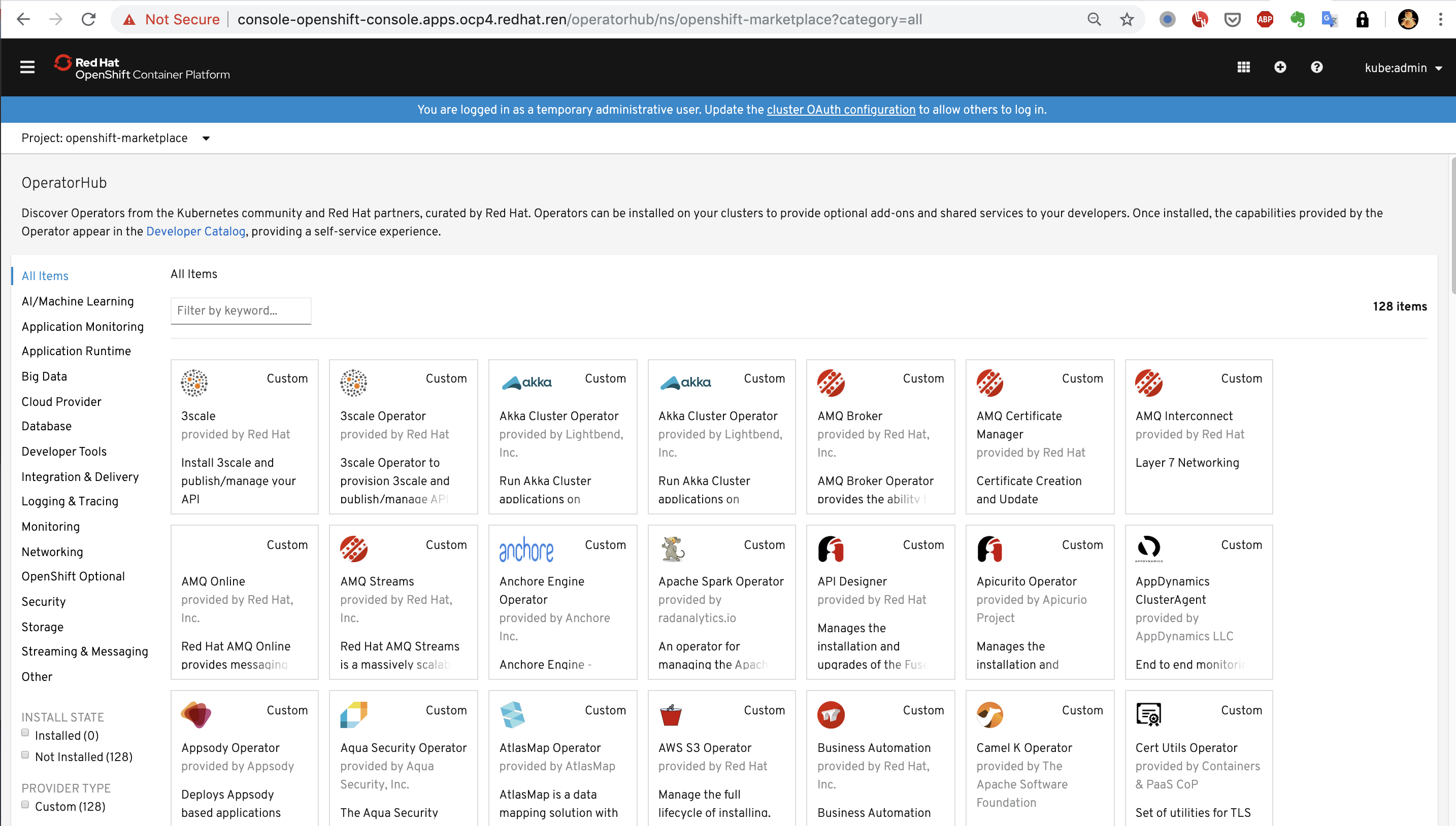

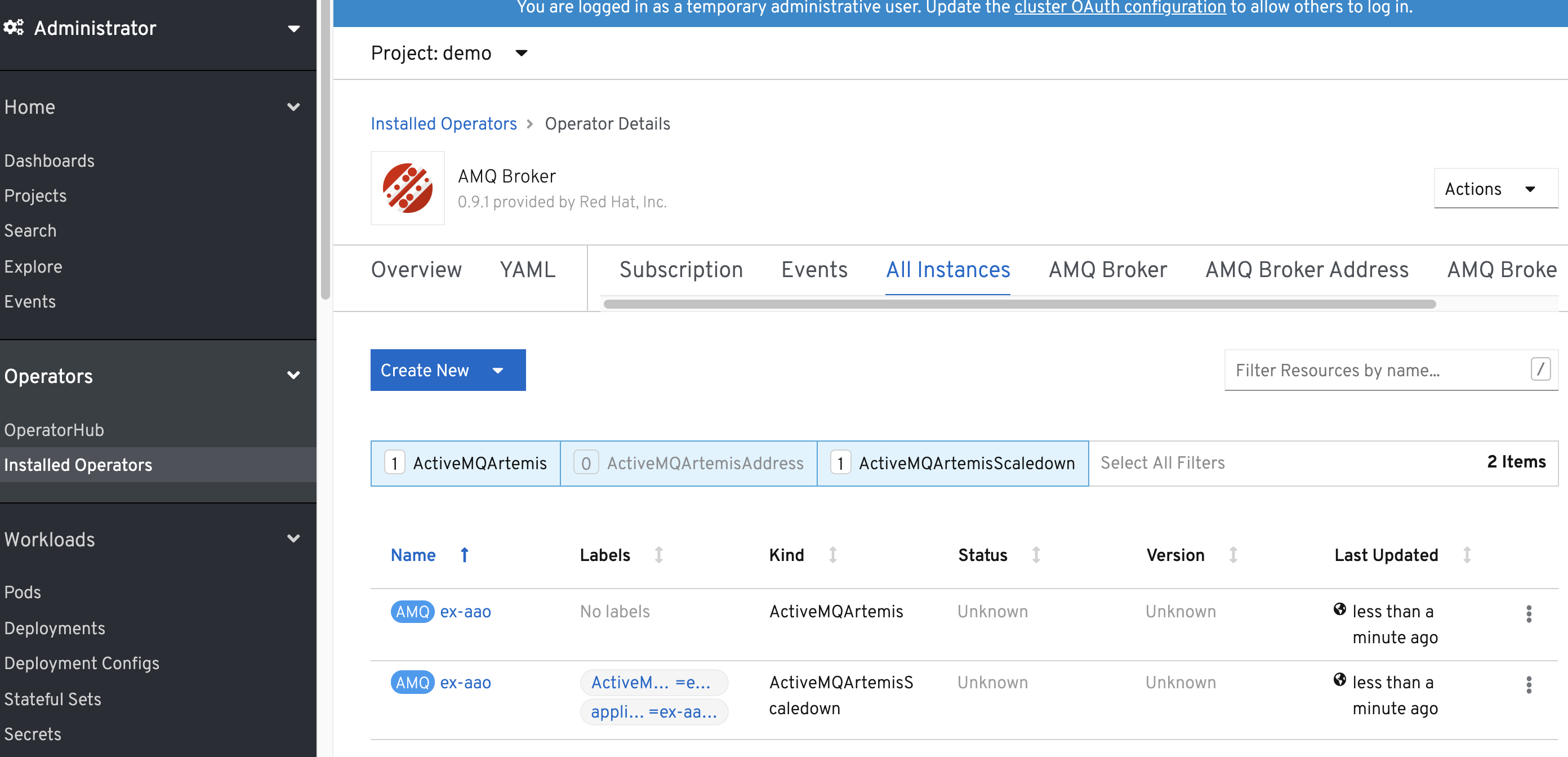

operator hub 准备分2个层次,一个是本文章描述的,制作operator hub的离线资源,并镜像operator 镜像。做到这一步,能够在离线部署的ocp4.2上,看到operator hub,并且能够部署operator。但是如果要用operator来部署要用的组件,那么operator会再去下载镜像,这个层次的镜像,也需要离线部署,但是由于每个operator需要的镜像都不一样,也没有统一的地方进行描述,所以需要各个项目现场,根据需要另外部署,本项目会尽量多的下载需要的镜像,但是目前无法避免遗漏。

# on helper node, 在工具机上

cd /data/ocp4

# scp /etc/crts/redhat.ren.crt 192.168.7.11:/root/ocp4/